How to do hotword detection with Universal-Streaming Speech-to-Text and Go

Learn to build hotword detection in Go using AssemblyAI's Universal-Streaming Speech-to-Text API.

In this tutorial, you'll learn how to respond to hotwords in voice data using AssemblyAI's Universal-Streaming Speech-to-Text API with direct WebSocket connections in Go.

If you've ever wanted to create a personal AI assistant like Siri or Alexa, the first step is to figure out how to trigger the AI using a specific word or phrase (also known as a hotword). All of the prevalent AI systems use a similar approach; for Alexa, the hotword is "Alexa," and for Siri, the hotword is "Hey Siri."

In this tutorial, you'll learn how to implement a hotword detection system using AssemblyAI's Universal-Streaming Speech-to-Text API with direct WebSocket connections. In homage to Iron Man, the assistant in the example is called Jarvis, but you're welcome to name it whatever you want.

Before you start

To complete this tutorial, you'll need:

- Go 1.19 or later installed on your system

- An AssemblyAI account with a valid API key

- A microphone connected to your computer

Set up your environment

You'll use the Go bindings of PortAudio to get raw audio data from your microphone and the Gorilla WebSocket library to connect directly to AssemblyAI's streaming API.

Let's start by creating a new Go project and setting up all of the required dependencies:

mkdir jarvis

cd jarvis

go mod init jarvis

go get github.com/gordonklaus/portaudio

go get github.com/gorilla/websocket

If the execution is successful, you should end up with the following directory structure:

jarvis

├── go.mod

└── go.sum

Create an AssemblyAI account

To get an API key, you first need to create an AssemblyAI account. Go to the sign-up page and fill out the form to get started.

Next, go to the API Keys page of your Account Dashboard and take note of your API key, as you'll need it in the next step.

Record the raw audio data

With the dependencies set up and the API key in hand, you're ready to start implementing the core logic of your personal AI assistant. The first step is to figure out how to get raw audio data from the microphone.

Create a new recorder.go file in the jarvis directory, import the dependencies, and define a new recorder struct:

package main

import (

"bytes"

"encoding/binary"

"github.com/gordonklaus/portaudio"

)

type recorder struct {

stream *portaudio.Stream

in []int16

}

The recorder struct will hold a reference to the input stream and read data from that stream via the in field of the struct.

Use the following code to configure a newRecorder function to create and initialize a new recorder struct:

func newRecorder(sampleRate int, framesPerBuffer int) (*recorder,

error) {

in := make([]int16, framesPerBuffer)

stream, err := portaudio.OpenDefaultStream(1, 0,

float64(sampleRate), framesPerBuffer, in)

if err != nil {

return nil, err

}

return &recorder{

stream: stream,

in: in,

}, nil

}

This function takes in the required sample rate and frames per buffer to configure PortAudio, opens the default audio input device attached to your computer, and returns a pointer to a new recorder struct.

Next, define a few methods on the recorder struct pointer that you'll use in the next step:

func (r *recorder) Read() ([]byte, error) {

if err := r.stream.Read(); err != nil {

return nil, err

}

buf := new(bytes.Buffer)

if err := binary.Write(buf, binary.LittleEndian, r.in); err

!= nil {

return nil, err

}

return buf.Bytes(), nil

}

func (r *recorder) Start() error {

return r.stream.Start()

}

func (r *recorder) Stop() error {

return r.stream.Stop()

}

func (r *recorder) Close() error {

return r.stream.Close()

}

The Read method reads data from the input stream, writes it to a buffer, and then returns that buffer. The Start, Stop, and Close methods call similarly named methods on the stream.

Create a WebSocket client for AssemblyAI

We’ll now connect directly to AssemblyAI's WebSocket endpoint. Create a new client.go file:

package main

import (

"encoding/json"

"fmt"

"net/http"

"net/url"

"time"

"github.com/gorilla/websocket"

)

type AssemblyAIClient struct {

conn *websocket.Conn

apiKey string

}

// Message types for AssemblyAI v3 WebSocket API

type BeginMessage struct {

Type string `json:"type"`

ID string `json:"id"`

ExpiresAt float64 `json:"expires_at"` // Unix timestamp as

number

}

type TurnMessage struct {

Type string `json:"type"`

TurnOrder int `json:"turn_order"`

TurnIsFormatted bool `json:"turn_is_formatted"`

EndOfTurn bool `json:"end_of_turn"`

Transcript string `json:"transcript"`

EndOfTurnConfidence float64 `json:"end_of_turn_confidence"`

Words []Word `json:"words"`

}

type Word struct {

Text string `json:"text"`

Start int `json:"start"`

End int `json:"end"`

Confidence float64 `json:"confidence"`

WordIsFinal bool `json:"word_is_final"`

}

type TerminationMessage struct {

Type string `json:"type"`

AudioDurationSeconds int

`json:"audio_duration_seconds"`

SessionDurationSeconds int

`json:"session_duration_seconds"`

}

type TerminateMessage struct {

Type string `json:"type"`

}

func NewAssemblyAIClient(apiKey string) *AssemblyAIClient {

return &AssemblyAIClient{

apiKey: apiKey,

}

}

func (c *AssemblyAIClient) Connect(sampleRate int) error {

// Build URL with query parameters

params := url.Values{}

params.Add("sample_rate", fmt.Sprintf("%d", sampleRate))

params.Add("format_turns", "true")

wsURL :=

fmt.Sprintf("wss://streaming.assemblyai.com/v3/ws?%s",

params.Encode())

header := http.Header{}

header.Add("Authorization", c.apiKey)

conn, _, err := websocket.DefaultDialer.Dial(wsURL, header)

if err != nil {

return fmt.Errorf("failed to connect to AssemblyAI:

%w", err)

}

c.conn = conn

return nil

}

func (c *AssemblyAIClient) SendAudio(audioData []byte) error {

// For Universal-Streaming, send raw binary audio data

directly

return c.conn.WriteMessage(websocket.BinaryMessage,

audioData)

}

func (c *AssemblyAIClient) ReadMessage() (interface{}, error) {

_, message, err := c.conn.ReadMessage()

if err != nil {

return nil, err

}

// Parse the message type first

var baseMsg map[string]interface{}

if err := json.Unmarshal(message, &baseMsg); err != nil {

return nil, err

}

msgType, ok := baseMsg["type"].(string)

if !ok {

return nil, fmt.Errorf("message type not found")

}

// Parse based on message type

switch msgType {

case "Begin":

var msg BeginMessage

if err := json.Unmarshal(message, &msg); err != nil {

return nil, err

}

return &msg, nil

case "Turn":

var msg TurnMessage

if err := json.Unmarshal(message, &msg); err != nil {

return nil, err

}

return &msg, nil

case "Termination":

var msg TerminationMessage

if err := json.Unmarshal(message, &msg); err != nil {

return nil, err

}

return &msg, nil

default:

return nil, fmt.Errorf("unknown message type: %s",

msgType)

}

}

func (c *AssemblyAIClient) SendTerminate() error {

msg := TerminateMessage{

Type: "Terminate",

}

return c.conn.WriteJSON(msg)

}

func (c *AssemblyAIClient) Close() error {

if c.conn != nil {

// Send terminate message before closing

c.SendTerminate()

time.Sleep(100 * time.Millisecond) // Give time for

message to send

return c.conn.Close()

}

return nil

}

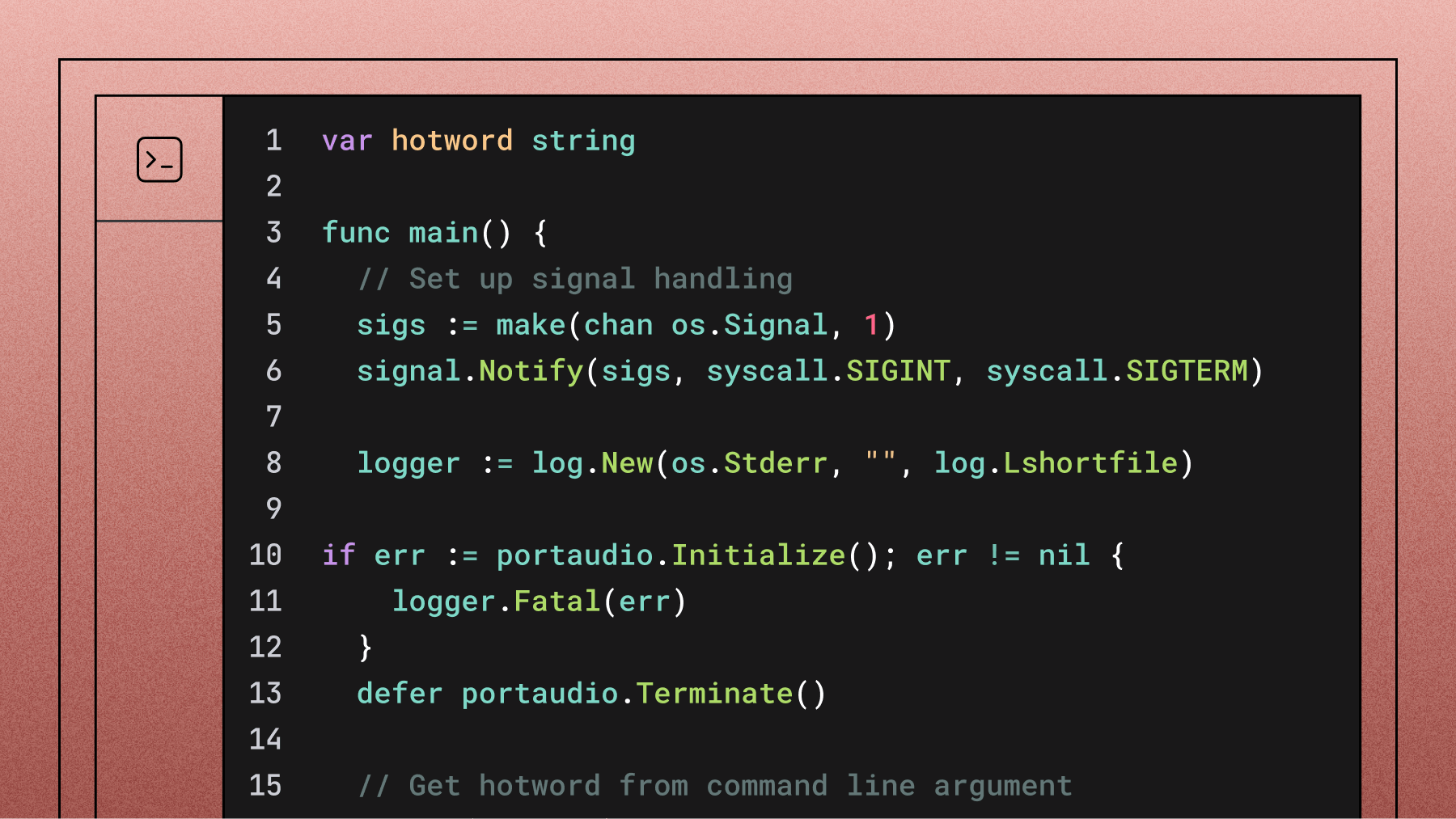

Implement the main application logic

Now create the main application file main.go:

package main

import (

"context"

"fmt"

"log"

"os"

"os/signal"

"strings"

"syscall"

"time"

"github.com/gordonklaus/portaudio"

)

var hotword string

func main() {

// Set up signal handling

sigs := make(chan os.Signal, 1)

signal.Notify(sigs, syscall.SIGINT, syscall.SIGTERM)

logger := log.New(os.Stderr, "", log.Lshortfile)

// Initialize PortAudio

if err := portaudio.Initialize(); err != nil {

logger.Fatal(err)

}

defer portaudio.Terminate()

// Get hotword from command line argument

if len(os.Args) < 2 {

logger.Fatal("Usage: go run . <hotword>")

}

hotword = os.Args[1]

fmt.Printf("Listening for hotword: %s\n", hotword)

// Get default audio device configuration

device, err := portaudio.DefaultInputDevice()

if err != nil {

logger.Fatal(err)

}

var (

apiKey = os.Getenv("ASSEMBLYAI_API_KEY")

sampleRate = int(device.DefaultSampleRate)

framesPerBuffer = int(0.05 * float64(sampleRate)) //

50ms of audio (recommended for v3)

)

if apiKey == "" {

logger.Fatal("ASSEMBLYAI_API_KEY environment variable

is required")

}

// Create AssemblyAI client and connect with sample rate

client := NewAssemblyAIClient(apiKey)

if err := client.Connect(sampleRate); err != nil {

logger.Fatal("Failed to connect to AssemblyAI:", err)

}

defer client.Close()

// Create audio recorder

rec, err := newRecorder(sampleRate, framesPerBuffer)

if err != nil {

logger.Fatal("Failed to create recorder:", err)

}

defer rec.Close()

if err := rec.Start(); err != nil {

logger.Fatal("Failed to start recording:", err)

}

// Start goroutine to handle transcript responses

ctx, cancel := context.WithCancel(context.Background())

defer cancel()

go handleTranscripts(ctx, client, logger)

// Main audio processing loop

go func() {

ticker := time.NewTicker(50 * time.Millisecond) //

50ms intervals

defer ticker.Stop()

for {

select {

case <-ctx.Done():

return

case <-ticker.C:

audioData, err := rec.Read()

if err != nil {

logger.Printf("Error reading audio: %v", err)

continue

}

if err := client.SendAudio(audioData); err

!= nil {

logger.Printf("Error sending audio:

%v", err)

continue

}

}

}

}()

// Wait for termination signal

<-sigs

fmt.Println("\nStopping recording...")

cancel()

if err := rec.Stop(); err != nil {

logger.Printf("Error stopping recorder: %v", err)

}

}

func handleTranscripts(ctx context.Context, client

*AssemblyAIClient, logger *log.Logger) {

for {

select {

case <-ctx.Done():

return

default:

msg, err := client.ReadMessage()

if err != nil {

logger.Printf("Error reading message: %v",

err)

continue

}

switch m := msg.(type) {

case *BeginMessage:

expiresTime :=

time.Unix(int64(m.ExpiresAt), 0)

fmt.Printf("✅ Session started - ID: %s,

Expires: %s\n", m.ID, expiresTime.Format(time.RFC3339))

case *TurnMessage:

if m.TurnIsFormatted {

// Clear line and print formatted

transcript

fmt.Printf("\r%s\r", strings.Repeat(

" ", 80))

fmt.Printf("%s\n", m.Transcript)

// Check for hotword in formatted

transcript

if detectHotword(m.Transcript,

hotword) {

fmt.Println("🎯 Hotword detected! I am here!")

// Add your custom logic here

for when hotword is detected

}

} else {

// Print partial transcript with

carriage return

fmt.Printf("\r%s", m.Transcript)

}

case *TerminationMessage:

fmt.Printf("\n❌ Session terminated -

Audio: %ds, Session: %ds\n",

m.AudioDurationSeconds,

m.SessionDurationSeconds)

return

}

}

}

}

func detectHotword(text, hotword string) bool {

return strings.Contains(strings.ToLower(text),

strings.ToLower(hotword))

}

Run the application

Let's see the results of your work in action by running the code. Open up the terminal, cd into the project directory, and set up your AssemblyAI API key as an environment variable:

export ASSEMBLYAI_API_KEY='your_api_key_here'

Note: Replace your_api_key_here with your actual AssemblyAI API key.

Finally, run this command:

go run . Jarvis

This will set Jarvis as the hotword, and the code will output "🎯 Hotword detected! I am here!" whenever it detects "Jarvis" in the transcribed speech.

Understanding the code structure

Your final project should have the following structure:

jarvis/

├── go.mod

├── go.sum

├── main.go

├── recorder.go

└── client.go

How it works:

- Audio Recording: The recorder.go file handles capturing audio from your microphone using PortAudio

- WebSocket Client: The client.go file manages the direct WebSocket connection to AssemblyAI's streaming API

- Main Logic: The main.go file coordinates everything, sending audio data and processing transcript responses

- Hotword Detection: Simple string matching checks for your chosen hotword in the final transcripts

Key features:

- Real-time transcription: Audio is processed and transcribed in real-time

- Hotword detection: Automatically detects when your chosen word is spoken

- Graceful shutdown: Properly handles termination signals and cleans up resources

- Error handling: Comprehensive error handling for network issues and audio problems

Important considerations

Pricing

- Universal-Streaming: $0.15 per hour (newer model optimized for voice agents)

- Billing is based on total session duration (connection time), not just audio processing time

Language Support

AssemblyAI's Universal-Streaming Speech-to-Text currently supports English only. If you need multilingual support, consider using the pre-recorded transcription API instead.

Performance Tips

- Use audio chunks of ~50ms for optimal latency. Larger chunk sizes are workable, but may result in latency fluctuations.

- Monitor your connection for stability in production environments

- Consider implementing reconnection logic for robust applications

Next steps

Now that you have a working hotword detection system, you can extend it by:

- Adding custom responses: Implement different actions based on detected hotwords

- Adding wake word sensitivity: Implement confidence thresholds to reduce false positives

- Building voice commands: Extend beyond hotwords to full voice command recognition

Conclusion

In this tutorial, you learned how to create a hotword detection application using AssemblyAI's Universal-Streaming Speech-to-Text API with direct WebSocket connections. You saw how PortAudio makes it easy to capture audio from a microphone and how AssemblyAI's powerful speech recognition enables real-time voice interaction.

AssemblyAI's Universal-Streaming Speech-to-Text API provides the foundation for building sophisticated voice applications. Whether you're developing voice assistants, implementing voice commands, or creating accessibility solutions, AssemblyAI's speech recognition capabilities offer the accuracy and performance needed for production applications.

With its industry-leading accuracy and streaming capabilities, AssemblyAI empowers developers to create innovative voice-enabled experiences that feel natural and responsive.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.