It's no secret that having access to pre-trained Deep Learning models is crucial to contemporary Deep Learning applications. With state-of-the-art models growing larger and larger into the trillions of parameters, it no longer makes sense to train advanced models from scratch in a large number of areas, especially fields like Automatic Speech Recognition.

Given the importance of pre-trained Deep Learning models, which Deep Learning framework - PyTorch or TensorFlow - has more of these models available to users is an important question to answer.

In this article, we'll explore this topic quantitatively so you can stay informed about the current state of the Deep Learning landscape.

Why do Pre-trained Deep Learning Models Matter?

Especially for intricate applications like Natural Language Processing, where the size and complexity of models hinder the engineering and optimization process, the ability to build state-of-the-art (SOTA) models from scratch is an impossible task for all but the largest budgets and organizations.

OpenAI's paradigm-shifting GPT-3 has over 175 billion parameters; and, if that weren't enough, its successor is slated to be orders of magnitude larger. GPT-4 is expected to have over 100 trillion parameters.

Because of this model growth, small-scale ventures rely on pre-trained Deep Learning models in their workflow, either for direct out-of-the-box inference, fine-tuning, or transfer learning.

In the arena of model availability, PyTorch and TensorFlow diverge sharply. Both PyTorch and TensorFlow have their own official model repositories, as we’ll explore below in the Official Resources sections, but practitioners may want to utilize models from other sources. Let’s take a quantitative look at model availability for both Deep Learning frameworks to get an idea of how they compare.

HuggingFace

The demand for access to pre-trained Deep Learning models is evident by the meteoric rise of HuggingFace, which recently raised $40 million recently in a Series B funding round. HuggingFace's popularity and rapid growth can be attributed to its ease-of-use, providing access to SOTA models in just a few lines of code.

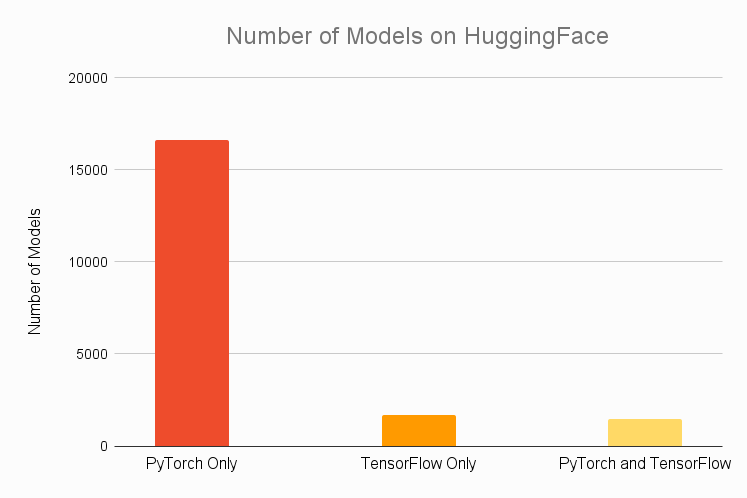

When we segment the number of total and top Deep Learning models on HuggingFace by framework (PyTorch or TensorFlow), we begin to see a trend that will continue throughout our discussion.

Total Models

Below you can see a graph comparing the total number of models on HuggingFace by framework. A cursory inspection of this graph allows you to easily glean the superior framework on this front.

Key takeaways:

1. Almost 85% of Deep Learning models are PyTorch exclusive

2. In contrast, only about 8% of models are TensorFlow exclusive

3. Only 16% of all models are available in TensorFlow

4. Of the roughly 16% of models that are not PyTorch exclusive, roughly half of them are still available in PyTorch

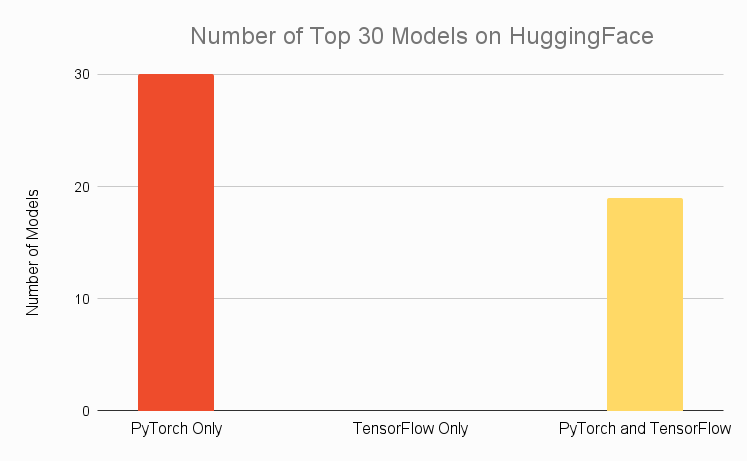

Top Models

To play Devil's Advocate, one might say that, although there are astronomically more models available in PyTorch, the best models are surely still available in both frameworks - that PyTorch's numbers are padded by thousands of uploads of low-quality Deep Learning models given that so many beginners choose PyTorch.

A fair point - let's dive into the numbers to see if this argument holds up by considering only the 30 most popular models on HuggingFace:

We find that the above argument does not hold up.

The key takeaways from the graph are that:

- All top 30 models are available in PyTorch

- Not even two-thirds of the top 30 models are available in TensorFlow

- There are zero top 30 models that are TensorFlow exclusive

Conclusion

PyTorch dominates the pre-trained Deep Learning model landscape on HuggingFace.

Papers with Code

Another popular source for Deep Learning models is Papers with Code - a website whose mission is to create a free and open resource with Machine Learning papers, code, datasets, etc.

Papers over Time

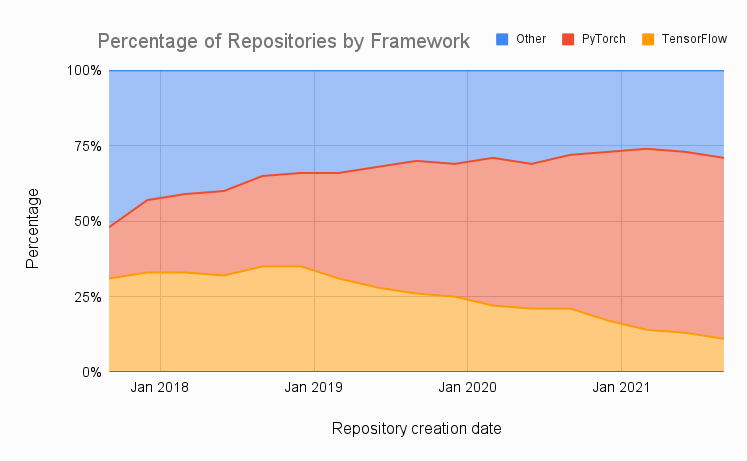

If we look at a plot of the percentage of papers with associated repositories that utilize PyTorch, TensorFlow, or another framework over time, we observe a steadily growing fraction of papers which utilize PyTorch:

Conversely, we see the continuing, near-monotonic decline of TensorFlow, even after the release of TensorFlow 2 in 2019.

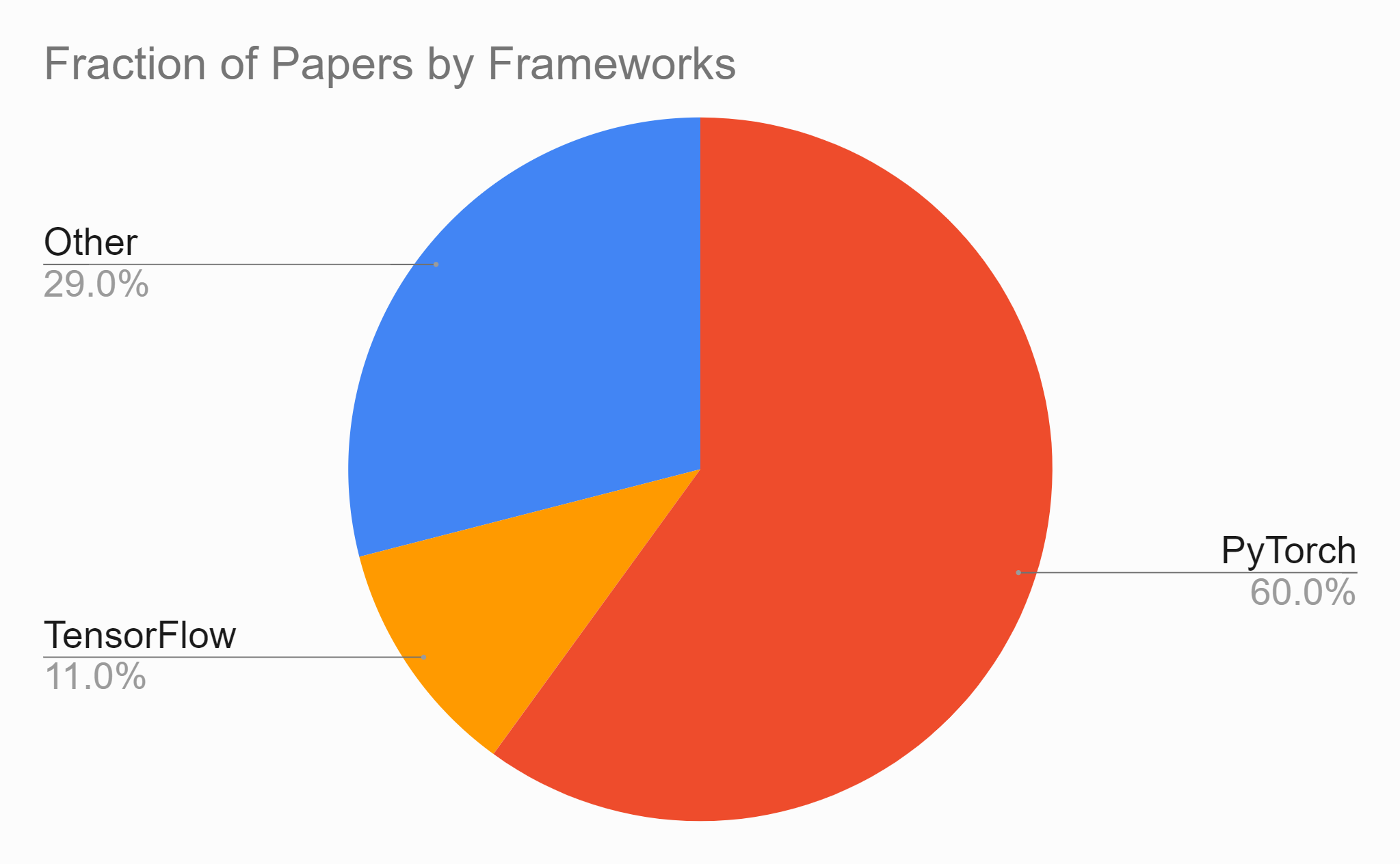

Fraction of Papers Last Quarter

If we consider just the most recent quarter of data, Q4 2021, in which 4,500 repositories were created, we find that 60% of them are implemented in PyTorch, with just 11% implemented in TensorFlow.

Conclusion

When considering the availability of Deep Learning models on Papers with Code, we again see another clear victory for PyTorch. It should be noted that not all of these papers offer pre-trained models, we simply use the aggregate to note the general trend.

PyTorch Official Resources

Beyond HuggingFace and Papers with Code, PyTorch has several official sources for pre-trained Deep Learning models.

Hub

PyTorch Hub is a research-oriented platform for sharing repositories with pre-trained models that cover a wide range of applications.

TorchVision

TorchVision is PyTorch's official Computer Vision library and includes a selection of pre-trained Deep Learning models. It includes everything you need for your Computer Vision projects, including model architectures and popular datasets.

For more pre-trained vision models, you can check out TIMM (pyTorch IMage Models).

TorchText

If your area of expertise is Natural Language Processing rather than Computer Vision, then you might want to check out TorchText. It contains a few pre-trained Deep Learning models, including an instance of RoBERTa. It also contains datasets frequently seen in the NLP domain, as well as data processing utilities to operate on these and other datasets.

SpeechBrain

SpeechBrain is an open-source speech toolkit for PyTorch. It supports ASR, diarization, and more, and comes with some pre-trained Deep Learning models.

TensorFlow Official Resources

TensorFlow, just like PyTorch, has several official sources for pre-trained Deep Learning models.

Hub

TensorFlow Hub is a repository of trained Machine Learning models ready for fine-tuning. This resouce allows you to utilize a large Deep Learning model like BERT with just a few lines of code.

The models in Hub cover a wide range of use cases, with models available for image, video, audio, and text problem domains. There are models available in TensorFlow, TensorFlow Lite, and TensorFlow.js models. You can see a list of the available models here.

MediaPipe

MediaPipe is a framework for building multimodal, cross-platform applied Machine Learning pipelines. MediaPipe offers pre-trained models which can be used for face detection, multi-hand tracking, object detection, and more

Final Words

While both frameworks have their share of official tools to distribute pre-trained Deep Learning models, it is obvious from our above analysis that PyTorch dominates most, if not all, non-affiliated model sources. With the ever-growing popularity of PyTorch and its Pythonic, easy-to-use approach to Deep Learning, we expect this trend to continue into at least the near future.

While PyTorch beats out TensorFlow on this front, the conversation on which framework is better in toto is quite nuanced, and most information on the subject is outdated. You can check out this analysis for an up-to-date, in-depth look into when each framework should be used.