Model Context Protocol (MCP) - What it is, how it works, and why it matters

Learn what the Model Context Protocol (MCP) is and why it matters to AI agents

The Model Context Protocol is a new standard invented by Anthropic, intended to allow AI agents to interact with tools and services in a standardized way. In this blog, I'll explain what the Model Context Protocol is, how it works, and why it matters to AI agents.

I'll provide illustrated, functional code examples to show how we have evolved from bare LLMs to AI agents, and how the Model Context Protocol is a necessary step in this evolution towards an agentic future. This blog is suitable for both technical and non-technical audiences alike, so read on for a complete overview of MCP.

What is the Model Context Protocol?

First, what actually is Anthropic's MCP? We'll start with a brief explainer now for overall context, but hold off on a more detailed look until later when we have a better understanding of why MCP was even developed in the first place, and how it relates to the progression towards an agentic economy.

The Model Context Protocol is exactly that - a protocol. Protocols standardize the way things are done and allow disparate groups of people to build systems in an interoperable way. Of course, standards are not unique to technology and have existed for a very long time in engineering. Screw sizes and threadings are standardized so that if your desk loses a screw you can just go to the hardware store and buy a new one - you don't have to call a machinist to make you a custom screw. This concept of interchangeable parts is crucial for efficiency and consistency, and is a large part of the reason for the compounding returns on production we've seen since the industrial era.

MCP is a standard for how LLM-based AI systems interact with external resources and tools. AI agents are currently able to interact with a variety of systems, including local storage, remote databases/knowledge centers, remote tools like AssemblyAI's API, and other remote resources. Currently, this means that, for each service, we have to build a custom "bridge" to allow the AI agent to interact with the service, represented by the different shapes in the below figure.

This approach means that every time we want to add a capability for an agent to reliably perform some task or access some external resource, we have to build such an adapter. MCP eliminates this issue by standardizing the interface between AI agents and the tools and resources they needs to be useful:

So MCP is like the USB-C for AI agents. USB-C, which you've surely seen in a growing number of places in recent years, is a standard interface for both data and power transfer. Its flexibility and generality has allowed it to become ubiquitous in modern devices. This is why MCP is a big deal. We want AI agents that can perform complicated, useful tasks. It has become apparent in recent years that these will not be monolithic models - they will be a network of interconnected models, resources, and tools. Therefore, standardizing the interface between these components means that developers, researchers, and builders can focus on designing the functional pieces of these networks, rather than the nitty-gritty "wiring" of the networks themselves.

Perhaps more importantly than its innate design, USB-C is valuable because it's actually being adopted. Standards are only useful if they are widely adopted, so this is another reason MCP is exciting - developers and companies are rapidly doing so. Anthropic invented MCP relatively recently - just 6 months ago in November of 2024, but OpenAI's recent adoption of MCP has contributed significantly to its overall growth. We can see this in the sharp growth in attention the MCP servers repository has received recently:

So, ultimately, all of this means that we can use the growing MCP standard build agentic AI systems that are interoperable and composable, allowing us to rapidly build agents that leverage the existing digital ecosystem of interconnected resources and services that we have been building for decades.

Below we'll see what this means in practice and how to actually build using MCP. But first, to truly understand the value of MCP, let's take a look at how we got to MCP from a meta-perspective.

Level 0: Bare LLMs

LLMs have been in the public eye for barely two years, but have already had a substantial impact on the way we work and live. For a thorough history of how LLMs developed, see our overview and history of LLMs. Below I'll quickly recap only the important points which are relevant to the discussion of MCP.

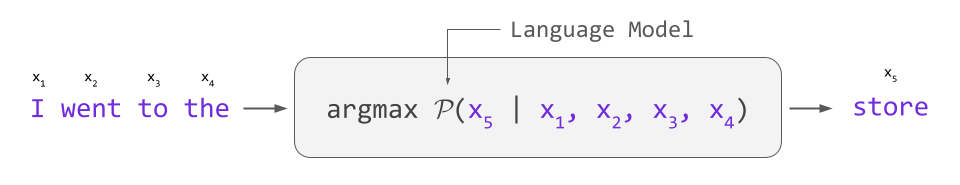

LLMs are fundamentally probabilistic systems. They are trained on massive amounts of text and, through this, learn common patterns in language. This means that they can generate text that is coherent and relevant to the input they receive, for example finishing a sentence.:

This makes LLMs really good at certain things like drafting emails or creative writing, where the goal is to produce coherent, relevant text. However, people quickly learned that we can get LLMs to "do" other tasks simply by asking. That is, just by phrasing a task in terms of natural language, we can get LLMs to do other things, like translate between languages:

These abilities - the so-called "Emergent Abilities of LLMs" - were an unexpected surprise. Very quickly, people started attempting to use LLMs for a variety of different tasks, benchmarking their performance, and debating what metrics should be used to measure this sort of general competence.

However, it quickly became apparent that performance varied drastically across tasks. For tasks that require high precision and reliable data processing, LLMs were not suitable. Even for many tasks that did not require high precision but required several steps, LLMs were not a reliable choice. For example, below I've asked Claude to subtract 201122 from 316043, and then calculate the square root of the result. You can find the script in this article's GitHub repo here. The answer to this problem is 339.

client = anthropic.Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY"),

)

# We give this calculation to the LLM, where the true answer is 339

PROMPT = (

"find 201122 removed from 316043 and then find the square root of the result. "

"Return this value **only** with no other words."

)

# %%

response = client.messages.create(

model="claude-3-7-sonnet-20250219",

max_tokens=1024,

messages=[{"role": "user", "content": PROMPT}],

)

print(response.content)Here's Claude's response:

114.9195780204327The answer is incorrect and, worse yet, if you repeat the question, you can get a different answer each time. This is completely unsurprising given the probabilistic nature of LLMs, but frustrating to developers and researchers who thought that "smart" enough LLMs could effectively be a panacea for a huge range of problems.

LLMs may be good at trivial "targeted" tasks, but they break down when the complexity reaches a certain threshold. This unreliability completely precludes them from being deployed for serious applications. We use computers for their precision and reliability, and LLMs are neither of these (at least for many problems of a complexity that is relevant to humans).

So where did we go from there?

Level 1: LLMs + Tools

At first researchers tried to "brute force" the problem by simply continuing the trend that made LLMs successful in the first place - scaling. LLMs are generally performant because they are huge - they have billions of parameters and are trained on enormous datasets. It is precisely through this scaling process from (small) Language Models to Large Language Models that LLMs were observed to gain many useful skills, so it seemed reasonable that scaling the models to be even larger would yield even better results.

However, it soon became clear that this scaling trend could not continue and was not the way forward towards more competent and useful general AI. The inherent probabilistic nature of LLMs was a fundamental limitation and not something that could be "scaled away", as predicted/noted by some AI leaders like Yann LeCun.

So, LLMs can not do everything we need done on their own, but why should they? Over decades we have built up a massive ecosystem of digital technology - standards and libraries and frameworks and infrastructure - that has already allowed computers to accomplish much of what we need and transform the way we live. Why not allow LLMs to leverage this preexisting work?

The idea is to provide LLMs access to this functionality, and by composing these available "tools", the LLM can solve the complicated problems that they cannot solve on their own. In this way, all the LLM needs to do is determine what needs to be done, not how to actually do it.

Here is an example that shows how we can get the LLM to reliably answer the question from the last section by giving the LLM access to simple arithmetic "tools". These tools are wrappers for the arithmetic operations of the underlying programming language on which the LLM is called (Python in this case).

First we define the tools (functions), and then create a mapping from the tools' names to the actual functions themselves. This will make it easier to call them later on. Again, don't be worried if you don't understand the syntax - just understand the overall concept.

def subtract(a: int, b: int) -> int:

return a-b

def sqrt(num: int) -> float:

return num**0.5

TOOL_MAPPING = {

"subtract": subtract,

"sqrt": sqrt,

}Now we cast this information as a list of tools in a format that the LLM expects, specifying the name of the tool, what it does, and the inputs it requires.

TOOLS = [

{

"name": "subtract",

"description": "Calculate the difference between `a` and `b`",

"input_schema": {

"type": "object",

"properties": {

"a": {

"type": "number",

"description": "The first operand",

},

"b": {

"type": "number",

"description": "The second operand; the number to be subracted",

}

},

"required": ["a", "b"],

},

},

{

"name": "sqrt",

"description": "Find the square root of an integer",

"input_schema": {

"type": "object",

"properties": {

"num": {

"type": "number",

"description": "The operand to find the square root of",

},

},

"required": ["num"],

},

}

]We ask Claude the same question as above, this time passing in tools=TOOLS to the request, which tells Claude that it can use these tools when generating its responses.

client = anthropic.Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY"),

)

PROMPT = (

"find 201122 removed from 316043 and then find the square root of the result. "

"Return this value only (nothing else)."

)

initial_response = client.messages.create(

model="claude-3-7-sonnet-20250219",

max_tokens=1024,

tools=TOOLS,

messages=[{"role": "user", "content": PROMPT}],

)

print(initial_response.content)Now Claude doesn't just return text, it returns text and a request to use a tool. We can see in the text that Claude now plans out how to solve this problem, knowing it first needs to subtract. It then delegates this task to our program, providing us the name of the tool to call and the inputs to pass into the tool.

[TextBlock(citations=None, text="I need to find the difference between 316043 and 201122, and then calculate the square root of that result. I'll use the appropriate tools to do this.", type='text'),

ToolUseBlock(id='toolu_01Vq1MnteDMJVEcJoLpTqupe', input={'a': 316043, 'b': 201122}, name='subtract', type='tool_use')]So we isolate the tool request, and formulate the reply. The tool mapping allows us to select the appropriate function by its name (provided by Claude), and we can easily pass in the inputs as keyword arguments with **subtract_tool_request.input. The tool use ID allows Claude to connect the tool request and result, which is important when there are several tool calls.

subract_tool_request = [block for block in initial_response.content if block.type == "tool_use"][0]

subract_tool_reply = {

"role": "user",

"content": [

{

"type": "tool_result",

"tool_use_id": subract_tool_request.id,

"content": str(TOOL_MAPPING[subract_tool_request.name](**subract_tool_request.input)),

}

],

}So we can now add Claude's previous message to the chat history, and then add the appropriately-formatted tool reply. Besides these two additions, we call Claude again in the exact same way:

intermediate_response = client.messages.create(

model="claude-3-7-sonnet-20250219",

max_tokens=1024,

tools=TOOLS,

messages=[

{"role": "user", "content": PROMPT},

{"role": "assistant", "content": initial_response.content},

subract_tool_reply

],

)

print(intermediate_response.content)Claude knows it needs to use another tool, this time the square root tool sqrt:

[TextBlock(citations=None, text="Now I'll find the square root of this result:", type='text'),

ToolUseBlock(id='toolu_01PtfMubJWYJtZ9KNDqQ7Zn1', input={'num': 114921}, name='sqrt', type='tool_use')]

So we repeat the above tool-calling and reply-formulation process once more (omitted for brevity here, but you can find the full script on GitHub), and finally Claude returns the correct answer to the question:

[TextBlock(citations=None, text='339.0', type='text')]Importantly, Claude is now able to find the correct answer reliably with the tools provided to it - if we ask the same question, we will get the same response. Note that Claude (and indeed any LLM) still technically can make mistakes, but in practice it is reliable with this sort of tool use.

So, with tool use we begin to see the power of LLM agents. But we want AI agents to be able to do more than just math - we want an AI assistant that can draft a document for us, share it with colleagues, and send them emails asking for input. How do we get there from an AI agent that can just perform arithmetic reliably?

Level 2: LLMs + Tools + APIs

A big part of the reason computers are so valuable to us is that they can communicate with each other. There is a massive ecosystem of technologies - cloud storage, microservices, virtualization, dynamic scaling, etc. - that we rely on every day for banking, communication, shopping, and more. This ecosystem is an inextricable part of modern life, and many of us rely on it more than we may realize. For AI agents to be truly valuable in the general sense, they need access to this digital infrastructure.

For example, Google has servers, and on those servers runs Google Docs. Google exposes this service to you, allowing you to log into the web UI, create documents, share them, etc. A whole suite of functionality that they maintain and manage, but it's not the only way to interact with Google Docs.

Google has chosen to expose its Google Doc service through an API as well, not just a web interface. The API allows developers to interact with Google Docs programatically so they can build programs around workflows involving Google Docs. For example, if you have a stack of handwritten documents you want to digitize, you could write a program that monitors the target folder of a scanner, uses OCR to convert the images to text, and then uploads them to Google Docs. This way you could quickly and easily digitize a large number of documents without having to manually upload each one.

What if we give LLMs access to these APIs? Then our LLM can understand our problem and plan how to solve it, and then use tools for both local processing and interacting with remote resources to achieve the goal. This is the promise of AI agents - systems that can work autonomously or semi-autonomously to flexibly compose tools in a semantically-driven way.

Let's look at an example of how this works. I'll ask Claude to write a spooky story and then upload it to Google Drive for us. First, as before, we define our prompt, tools, and tool mapping:

import os

import anthropic

from doc_tools import create_document

client = anthropic.Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY"),

)

PROMPT = "Generate a spooky story that is 1 paragraph long, and then upload it to Google docs."

TOOL_MAPPING = {

"create_document": create_document,

}

TOOLS = [

{

"name": "create_document",

"description": "Create a new Google Document with the given title and text",

"input_schema": {

"type": "object",

"properties": {

"title": {

"type": "string",

"description": "The title of the document",

},

"text": {

"type": "string",

"description": "The text to insert into the document body",

}

},

"required": ["title", "text"],

},

},

]In this case, we have only one tool - create_document. As before, we submit our request to Claude:

initial_response = client.messages.create(

model="claude-3-7-sonnet-20250219",

max_tokens=1024,

tools=TOOLS,

messages=[{"role": "user", "content": PROMPT}],

)

print(initial_response.content)Claude responds with a textual block and a tool request for the create_document function.

[TextBlock(citations=None, text="I'd be happy to create a spooky story for you and upload it to Google Docs. Let me write a one-paragraph spooky story and then create the document for you.", type='text'),

ToolUseBlock(id='toolu_013aFg9xXVp4Z6m7htfEJwXJ', input={'title': 'Spooky Short Story',

'text': 'The old mansion at the end of Willow Street ... and escape.'}, name='create_document', type='tool_use')]As in the example in the previous section, we execute the tool with Claude's inputs, and then return the results to Claude to continue the conversation:

create_doc_tool_request = [block for block in initial_response.content if block.type == "tool_use"][0]

create_doc_tool_reply = {

"role": "user",

"content": [

{

"type": "tool_result",

"tool_use_id": create_doc_tool_request.id,

"content": str(TOOL_MAPPING[create_doc_tool_request.name](**create_doc_tool_request.input)),

}

],

}

created_doc_response = client.messages.create(

model="claude-3-7-sonnet-20250219",

max_tokens=1024,

tools=TOOLS,

messages=[

{"role": "user", "content": PROMPT},

{"role": "assistant", "content": initial_response.content},

create_doc_tool_reply

],

)

print(created_doc_response.content.text)And, finally, the LLM lets us know that it was successful in its task:

I've created a spooky story and uploaded it to Google Docs with the title "Spooky Short Story." The document has been successfully created and contains a one-paragraph spooky tale about Sarah's unsettling experience at an abandoned mansion on Willow Street.If I go check my Google Drive, I can see that the document has indeed been created!

The only fundamental difference between this example and the one in the previous section is that we've changed our toolset. The "heavy lifting" is done by the create_document function/tool that we've imported from the doc_tools.py file:

from doc_tools import create_documentYou can find the full doc_tools.py file on GitHub here - it uses Google's SDK to interact with the Google Docs API in order to upload the file.

The ability for AI agents to leverage external APIs in this way is amazing, and there is a lot of promise here, but there are also a few problems.

First, it is a lot of work. Every time we want to use a service in this way, we have to make a tool. In this case, the tool is essentially a wrapper for the underlying API, but this will not always be the case. Additionally, even if the tool is a simple wrapper, it adds another point of maintenance and scope, leading to an overall greater "surface area" and room for software entropy to sneak in. This is especially true given that a generally competent AI agent will likely need to have access to a lot of tools.

Second, there are a lot of security concerns around rolling your own when it comes to building these tools for AI agents. After all, an AI assistant may have access to your banking accounts, health records, and personal information. Everyone building his own way for an agent to access this information will inevitably lead to sensitive information being sent to the wrong place, the leaking of credentials, or something of that sort.

Third, we have to make sure our tools are constantly up to date with service providers! Any time they make a change, we have to make a change to the corresponding tool in our application.

What if there were a way around these problems?

This is the goal of MCP.

Level 3: LLMs + MCP

We noted in the first section that protocols are a way to standardize how we build interoperable systems. There are many protocols in software.

For example, look in the URL bar of the browser through which you're reading this article and you will see https. The HyperText Transfer Protocol (secure) is a standard that serves as the foundation for web communications, establishing clear rules for how browsers and servers exchange information. By providing a standardized communication framework, HTTPS enables the seamless web experience we rely on daily. Without this critical protocol, the web as we know it couldn't function because browsers and web servers would lack a "common language".

The Model Context Protocol is the HTTPS of AI agents. It is a standard for how AI systems can communicate with data sources, tools, and services. MCP allows us to build AI systems that are interoperable and composable, and it allows us to leverage the existing infrastructure that we have built up over decades in an intelligent way.

Let's see how it works in an example, and then we'll discuss why it matters.

MCP adopts a client-server architecture. A service will expose tools and other capabilities via an MCP server that an AI agent (via clients) can leverage. MCP servers don't just serve REST APIs like the one we used in the last section, they expose tools themselves in a way that the AI agent can natively understand, so we don't need to build the "translation layer" like we did in the example in the previous section (the doc_tools.py file). Let's see how we'd cast this previous example of uploading a spooky story to Google Docs to an MCP approach.

Note - to use a most MCP servers currently you actually have to run them locally. A local client connects to a local server which makes requests to external services. Cloudflare has a good write up on this and the current state of moving this functionality into the cloud to make it easier for AI agents to leverage MCP functionality.

Building the MCP server

The MCP server itself is actually relatively short and simple. Here it is in its entirety:

from typing import Any

from mcp.server.fastmcp import FastMCP

from doc_tools import create_document

mcp = FastMCP("google_docs")

def format_response(response: str) -> str:

return f"Response: {response}"

@mcp.tool()

async def create_google_doc(title: str, text: str) -> str:

result = create_document(title, text)

return format_response(result)

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport='stdio')The mcp package is provided by Anthropic's MCP Python SDK repository, allowing us to build MCP servers in Python (they also offer a TypeScript SDK).

We use the package to create an MCP server called google_docs. In the last section we imported the create_document function and provided it to the LLM via the tools parameter. The way we do this in an MCP server is to create an asynchronous function (create_google_doc) and add the mcp.tool decorator to register the function as a tool with the MCP server. The input types required for the function will automatically be inferred based on the function's signature, so we don't need to do any of that work ourselves.

Finally, we run the server with mcp.run(). This will start the server and listen for incoming requests. The transport argument specifies how the server will communicate with clients. In this case, we use stdio, which means it will read from standard input and write to standard output.

It's that easy to build an MCP server, but the crucial note here is that we wouldn't be the ones doing this in practice (more on this later). Now we need to connect this server to it with an MCP client.

Building the MCP client

The great thing here is that there's not much work to do. Since this MCP is a standard protocol, the way that clients interact with the server is standardized. In the same way that you don't need to do a lot of custom work to request a file from a remote web server - you can just use any tool that allows you to make a GET request - we don't need to do custom work here specifically to accommodate our MCP server.

In fact, we can just take the code directly from the MCP docs and run the chat loop with:

python client.py ./path/to/server.pyThe program requests available tools and prints them to the terminal, and then asks for a command:

[04/11/25 14:00:11] INFO Processing request of type ListToolsRequest server.py:534

Connected to server with tools: ['create_google_doc']

MCP Client Started!

Type your queries or 'quit' to exit.

Query: We give it a command similar to the last section, but this time ask for a specific title for the piece to have:

Query: Write me a 1 paragraph spooky story and then upload it to google drive. Name the file "MCP Spooky Story!"

[04/11/25 14:02:27] INFO Processing request of type ListToolsRequest server.py:534

[04/11/25 14:02:32] INFO Processing request of type CallToolRequest server.py:534

INFO file_cache is only supported with oauth2client<4.0.0 __init__.py:49

I'll help you write a spooky story and upload it to Google Drive. Let me create the document with a short scary paragraph.

[Calling tool create_google_doc with args {'title': 'MCP Spooky Story!', 'text': "The old grandfather clock struck midnight, its deep chimes echoing through the empty hallway of Sarah's Victorian home. She had always dismissed her grandmother's warnings about the thirteenth chime - the one that supposedly came after midnight on particularly dark nights. But tonight, as she sat alone in her study, the clock struck that impossible thirteenth time, and in the mirror across the room, Sarah caught a glimpse of something standing behind her chair - something that smiled with too many teeth and reached toward her with long, spindly fingers that shouldn't have been there at all."}]

I've created a Google Doc with your spooky story! The document has been titled "MCP Spooky Story!" as requested and contains a short, creepy paragraph about a thirteenth chime and an unsettling presence. The document has been successfully uploaded to your Google Drive.

Query: As before, the program creates the story perfectly and uploads it to my Google Drive:

Why does MCP matter?

You now have a solid understanding of what MCP is, how it works, and a few reasons its important. Let's take a closer look at why MCP really matters. There are a few levels to this consideration - we'll start with the practical level as to why it matters to AI agents.

The aspect of MCP that a many seem to be focusing on is that it standardizes the way that AI agents interact with tools and services. As we've seen above, this is indeed a big deal because it means that we can build AI systems that are interoperable and composable, but there is another aspect of MCP that is perhaps even more valuable. MCP shifts the onus of making AI agents interact with services to the service providers. When we built the MCP server above, it may have seemed pointless, like we just added another file for no reason, but it would be Google here who builds that MCP server. All we have to do is connect to the server and use the tools that it exposes.

Let's consider an analogy to see why this matters. We've spoken above how many providers offer APIs to interact with their services. Development teams that want to use these APIs will come up with their own ways to do so in their applications. Below we see an example of how two teams might each create a function to call AssemblyAI's API to create a transcript:

This certainly works, but consider what happens if AssemblyAI needs to update its API, say by changing the relevant endpoint. The change cascades into a multiplicative maintenance burden - every application that makes requests to this endpoint needs to be updated or it will stop functioning properly.

To make matters worse, if a development team does not adhere to good software engineering principles by, for example, not keeping their code DRY, the problem will be even worse. Now the team's code has to be updated in many locations!

Of course, we hope development teams design their software in such a way that this is avoided, but if they didn't it could be a huge change across the entire codebase. To circumvent this issue, some service providers offer SDKs, which provide a port of the functionality to the environment in which the developer is working. That way, the developer can just use the SDK and not worry about the underlying API. In other words, the SDK provides a layer of abstraction that keeps the applications' codebases decoupled from the API's implementation details.

Decoupling - both in this way and others - is a common practice in software engineering, and it allows developers to focus on building their applications rather than worrying about the details of how to interact with a service.

This is exactly what MCP does for AI agents! For providers who offer an MCP server, developers can hook AI agents into the corresponding service using a unified "language" for LLMs. This allows the AI agents to immediately access functionality in a robust way that is resilient against change. Additionally, it means that many different development teams don't have to spend time building custom tools for common tasks that an AI agent would need to do with a given service.

Rather than having to build the "bridge" to a service and then do something with the results - i.e. implement the business logic of their program - developers can just focus on the latter. This separation of concerns is especially important for AI agents given that so much of their utility comes in the form of composing tools in flexible and semantically-driven ways. In other words, the power of AI agents comes disproportionately from interacting with external services, the implementation details of which MCP abstracts away.

What does MCP mean for the future?

The Model Context Protocol is a seminal step in the development landscape of modern agentic AI systems. By standardizing how AI systems interact with external tools and services, MCP eliminates wasted time and energy in building intelligent applications, as well as reduces the barrier to entry in building such applications, which means faster development cycles and more reliable applications. Ultimately, in the coming years we'll see the rapid proliferation of agentic systems, and hopefully a tight feedback loop between the user experience and the design and topology of these agentic networks.

We'll have more MCP content coming in the next few weeks, so follow our newsletter if you want to stay up to date with our content. You can also check out our YouTube channel if you want to learn more about AI agents, LLMs, and building with AI, like this video on building an AI agent for Speech-to-Text with LiveKit:

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.