Introducing the AssemblyAI integration for LangChain Go

Learn how to use audio transcripts in LangChain Go using the AssemblyAI document loader.

LangChain is a framework for developing applications using Large Language Models (LLMs). LangChain provides common building blocks for building integrations with LLMs.

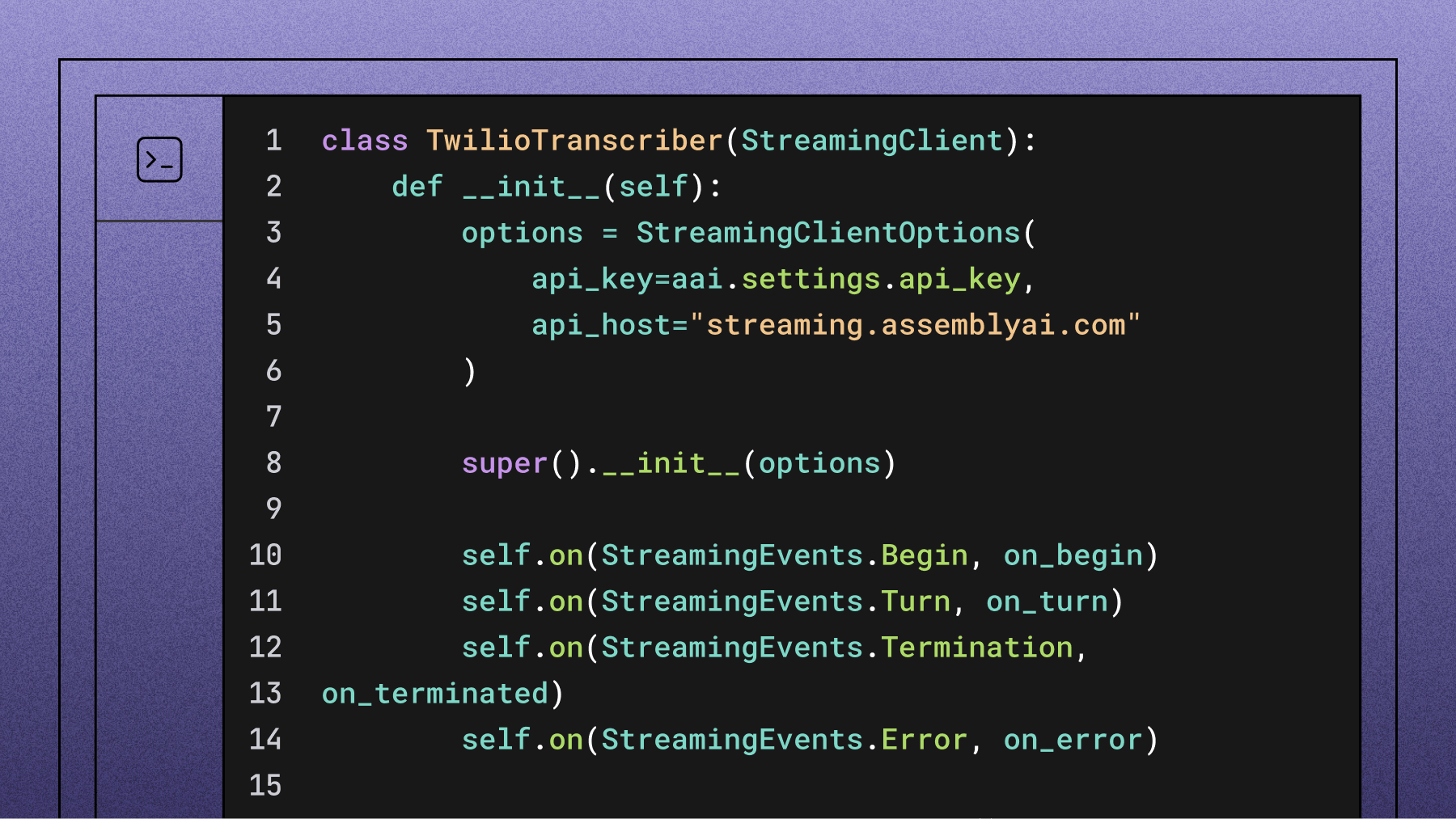

However, LLMs only operate on textual data and don't understand audio data. With our recent contribution to LangChain Go, you can now integrate AssemblyAI's industry-leading speech-to-text models using the new document loader.

💡

The AssemblyAI document loader is also available for both LangChain (Python) and LangChain.js (JavaScript).

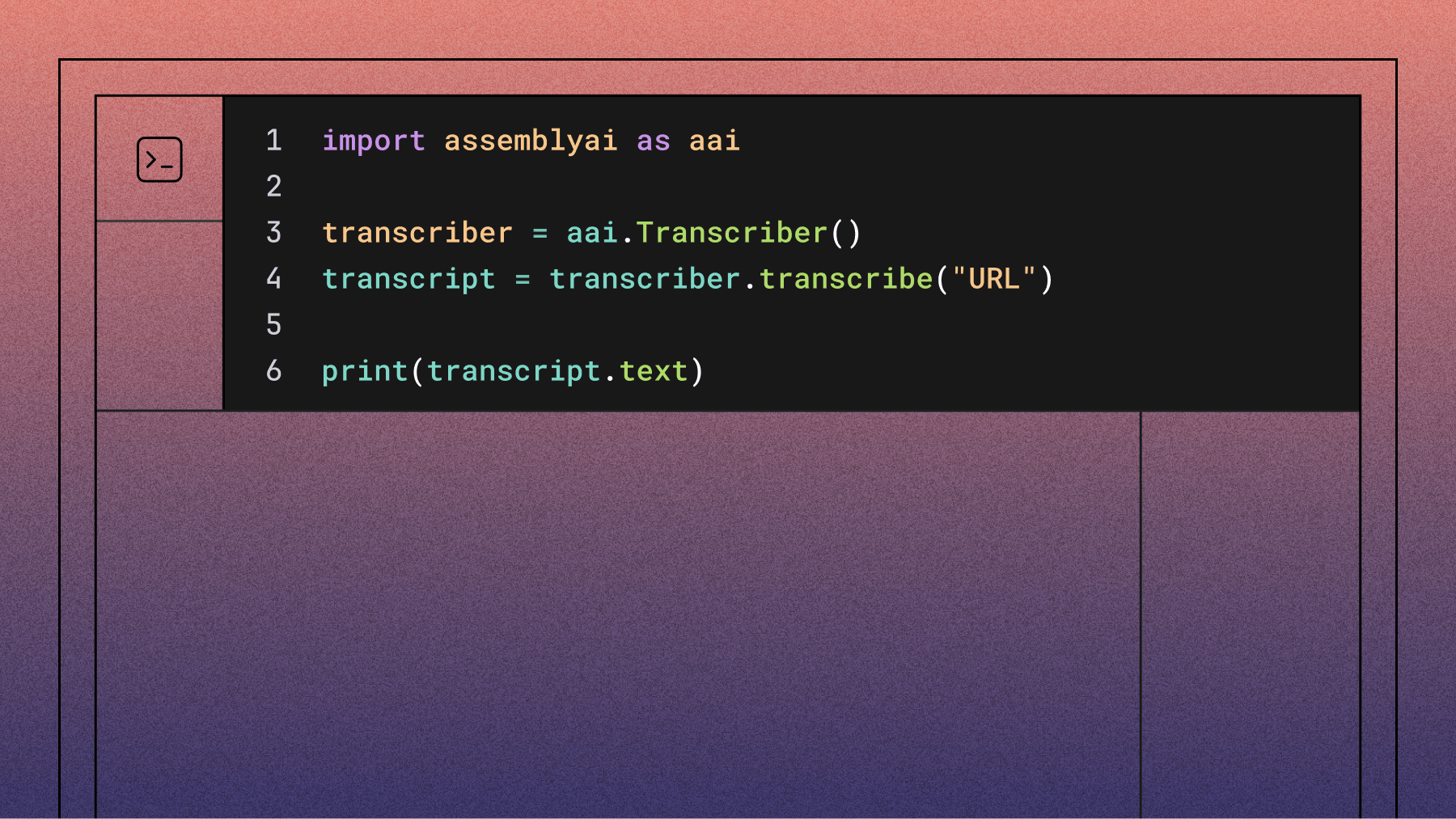

The following example answers a question about an audio file. The example uses AssemblyAI to transcribe the audio and OpenAI to generate a response to the question.

package main import ( "context" "fmt" "os" "github.com/AssemblyAI/assemblyai-go-sdk" "github.com/tmc/langchaingo/chains" "github.com/tmc/langchaingo/documentloaders" "github.com/tmc/langchaingo/llms/openai" ) func main() { apiKey := os.Getenv("ASSEMBLYAI_API_KEY") llm, _ := openai.New() chain := chains.LoadStuffQA(llm) loader := documentloaders.NewAssemblyAIAudioTranscript( apiKey, documentloaders.WithAudioURL("https://storage.googleapis.com/aai-docs-samples/sports_injuries.mp3"), documentloaders.WithTranscriptParams(&assemblyai.TranscriptOptionalParams{ LanguageCode: "en_us", }), ) ctx := context.Background() docs, _ := loader.Load(ctx) answer, _ := chains.Call(ctx, chain, map[string]any{ "input_documents": docs, "question": "What is a runner's knee?", }) fmt.Println(answer["text"]) }

When you run the example, you'll get an output similar to the following:

Runner's knee is a condition characterized by pain behind or around the kneecap, caused by overuse, muscle imbalance, and inadequate stretching. Symptoms include pain under or around the kneecap and pain when walking.

Leverage LLMs for audio data using LeMUR

To learn more ways you can chain the audio transcript loader, see the LangChain Go documentation.

If you're not already using LangChain Go, or if you're applying LLMs primarily to audio data, we encourage you to try LeMUR, a framework for leveraging LLMs to understand speech.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

%20influence%20automatic%20speaker%20labeling_.png)