What is speech recognition? A comprehensive guide

This article will provide a comprehensive overview of speech recognition, including its benefits and applications, and help you choose the right speech recognition API.

The use of speech recognition technology is exploding and is expected to grow at a clip of over 14% year over year for the foreseeable future.

Recent advancements in the AI research behind speech recognition technology have made speech recognition models more accurate and accessible than ever before. These advancements, coupled with consumers’ increased reliance on digital audio and video consumption, are powering this impressive growth and transforming the way we interact with this technology in both our personal and professional lives.

In this article, we’ll provide a comprehensive overview of speech recognition, including its benefits, applications, and how to get started using the technology.

What is speech recognition?

First, let’s explore deeper: what is speech recognition?

Speech recognition, also referred to as speech-to-text and Automatic Speech Recognition (ASR), is the use of Artificial Intelligence (AI) or Machine Learning to turn spoken words into readable text.

Speech recognition technology has existed since 1952, when the infamous Bell Labs created “Audrey,” a digit recognizer. Initially, Audrey could only be used to transcribe spoken numbers but a decade later, researchers were able to make Audrey to transcribe rudimentary spoken words like “hello”.

Later, researchers used classical Machine Learning technologies like Hidden Markov Models to power speech recognition models, though the accuracy of these classical models eventually plateaued.

Today, deep learning technology, heavily influenced by Baidu’s seminal paper Deep Speech: Scaling up end-to-end speech recognition, dominates the field.

In the next section, we’ll discuss how these deep learning approaches work in more detail.

How does speech recognition work?

In its simplest form, a speech recognition model takes audio inputs, breaks them into their individual parts, and outputs a written text.

Speech recognition models today typically use an end-to-end deep learning approach. This is because end-to-end deep learning models require less human effort to train and are more accurate than previous approaches.

Speech recognition occurs via three main steps: audio preprocessing, the deep learning speech recognition model, and text formatting.

Audio preprocessing converts the audio input into a usable format through transcoding, normalization, and segmentation.

The deep learning speech recognition model then maps the audio input to a sequence of words. Today, modern systems use Transformer and Conformer architectures to achieve speech recognition.

These models generate the likelihood of each word, or linguistic unit, being spoken in each short time frame. Then, a decoder generates the most probable word sequence based on pre-linguistic-unit likelihood values.

Finally, text formatting ensures that the text output by the deep learning recognition model is readable. For example, the raw outputs typically don’t have punctuation and casing, and emails and numbers are spelled out in their entirety. Text formatting changes these outputs into the more readable text format that we’re used to seeing.

Not all speech recognition models today are created equally — some can be limited in accuracy by factors such as accents, background noise, language, quality of audio input, and more. Following explicit steps to evaluate speech recognition models carefully will help users determine the best fit for their needs.

Today’s top speech recognition models, like Universal-1, are trained on millions of hours of multilingual audio data to help overcome these challenges. Universal-1, for example, produces near-human speech-to-text accuracy in almost all conditions, including in audio with accented speech, heavy background noise, and changes in spoken language, and returns results quickly for fast consumption.

Applications of speech recognition: More than just dictation

Speech recognition applications today reach far beyond just dictation software. In fact, AI speech recognition technology is powering a wide range of versatile Speech AI use cases across numerous industries.

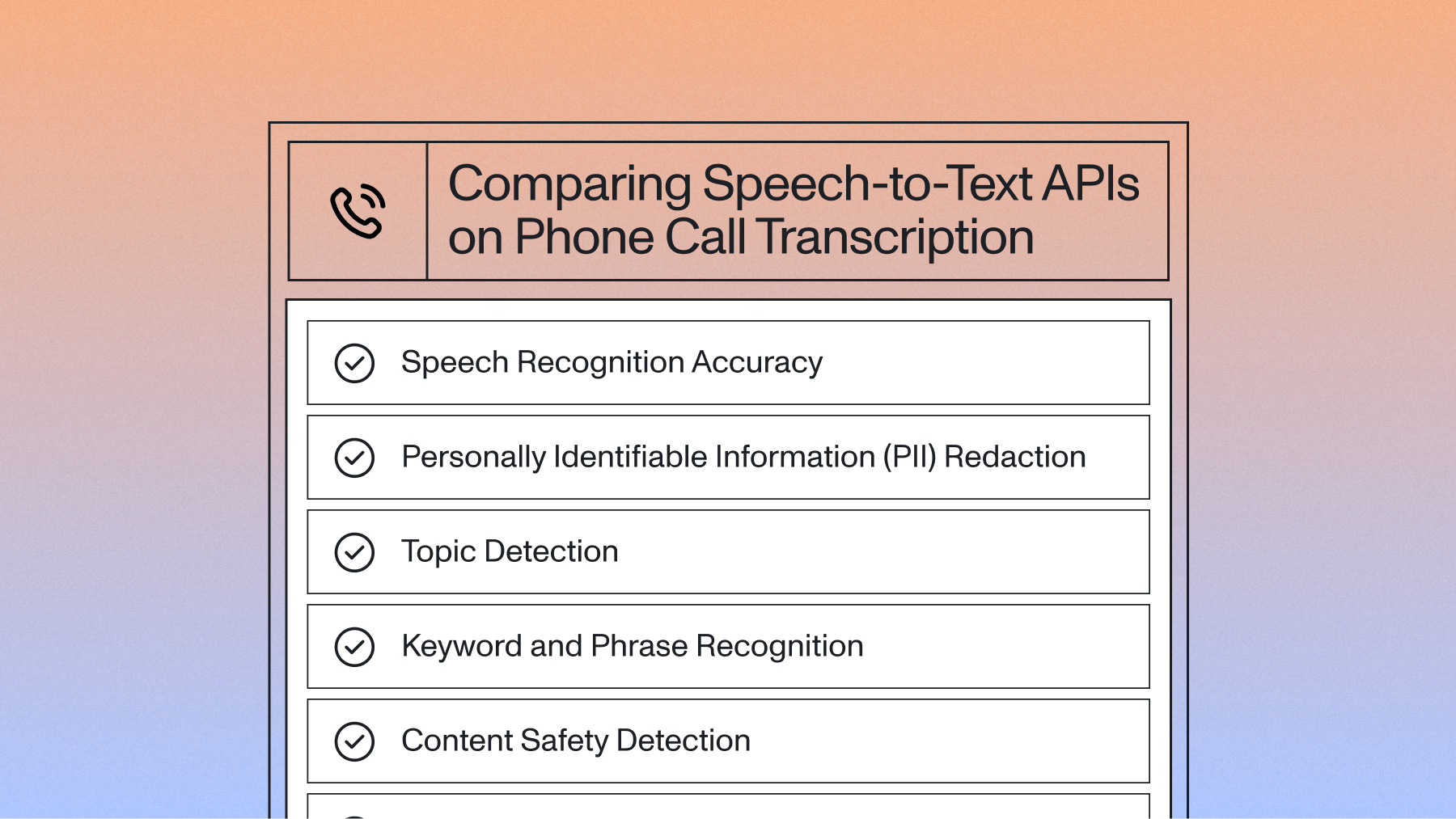

Streaming Speech-to-Text, for example, is being used to build apps that create on-screen subtitles during live broadcasts and virtual meetings, to support customer service agents during live calls, and to generate live notes during online educational courses.

Here are a few other industry examples of speech recognition applications:

Customer service

Speech recognition is being used as the foundation for powerful Conversation Intelligence platforms and to augment call centers, voice assistants, chatbots, and more. Conversation Intelligence platforms, for example, transcribe calls using speech recognition models and then apply additional Speech AI models to this data to analyze calls at scale, automate personalized responses, coach customer service representatives, identify industry trends, and more. Combined, these Speech AI tools create a better overall user experience.

Healthcare

The healthcare industry uses speech recognition technology to transcribe both in-office and virtual patient-doctor interactions. Additional Speech AI models are then used to perform actions such as redacting sensitive information from medical transcriptions and auto-populating appointment notes to reduce doctor burden.

Accessibility

Speech recognition models are also being used to increase accessibility across industries, such as to ensure people with hearing impairments can access needed information, support diverse learning styles with written and visual subtitles, improve media consumption by providing captions, and increase overall user experience.

Education

K-12 school systems and universities are implementing speech recognition tools to make online learning more accessible and user-friendly. Learning management systems, or LMSs, are adding speech-to-text transcription to increase the accessibility of course materials, as well as building with additional Speech AI models that can catalog course content, help educators evaluate reading comprehension, augment feedback loops, and more.

Content creation

Not surprisingly, speech recognition models are also being used by the content creation community. Tools like AI subtitle generators help creators more easily add AI-generated subtitles to their videos, as well as allow them to modify how the subtitles are displayed (color, font, size, etc.) on the video itself. The addition of subtitles makes the videos more accessible and increases their searchability to generate more traffic.

Smart homes and IoT

Smart home devices, like Google Home and Nest, have also integrated speech recognition technology to allow for a more seamless user experience. Accuracy is especially important for these devices, as well as IoT devices, as users need to interact with the technology via voice commands and receive timely responses.

Automotive

Speech recognition technology is also being integrated directly into vehicles to power navigational voice commands and in-vehicle entertainment systems.

Benefits of speech recognition: A game-changer for productivity and accessibility

Speech recognition technology offers a multitude of benefits across industries: increased productivity, improved operational efficiency, better accessibility, enhanced user experience, and more.

Jiminny, a leading conversation intelligence, sales coaching, and call recording platform, uses speech recognition to help customer success teams more efficiently manage and analyze conversational data. The insights teams extract from this data help them finetune sales techniques and build better customer relationships — and help them achieve a 15% higher win rate on average.

Qualitative data analysis platform Marvin built tools on top of speech recognition and Speech AI to help its users spend 60% less time analyzing data, significantly boosting productivity.

Screenloop, a hiring intelligence platform, integrated AI speech recognition to transcribe and analyze interview data. In addition to reduced time-to-hire and fewer rejected offers, Screenloop users spend 90% less time on manual hiring and interview tasks.

Lead intelligence company CallRail was an early adopter of speech recognition and Speech AI. Since its integration, its AI-powered conversation intelligence tools have increased call transcription accuracy by up to 23%. The company also doubled the number of customers using its conversation intelligence product.

Choosing the right speech recognition API: A buyer's guide

Choosing the best Speech-to-Text API or AI model for your project can seem daunting, but here are a few considerations to keep in mind.

1. Accuracy

Accuracy is one of the most important comparison tools we have for speech recognition APIs. Word Error Rate, or WER, is a good baseline to use when comparing, but keep in mind that the types of audio files (noisy versus academic settings, for example) will impact the WER. In addition, always look for a publicly available dataset to ensure the provider is offering transparency and replicable results — the absence of this would be a red flag.

WER does have limitations, however, as it can still be difficult to assess the “readability” of the text. Diffchecker tools — tools that allow you to compare two blocks of text and eyeball the differences for quick comparison — can be helpful here.

2. Additional features and models

In addition to speech recognition, it can be helpful when a provider offers additional Natural Language Processing and Speech Understanding models and features, such as LLMs, Speaker Diarization, Summarization, and more. This will enable you to move beyond basic transcription and into AI analysis with greater ease.

3. Support

Building with AI can be tricky. Knowing that you have a direct line of communication with customer success and support teams while you build will ensure a smoother and faster time to deployment. Also consider a company’s uptime reports, customer reviews, and changelogs for a more complete picture of the support you can expect.

4. Documentation

API documentation should be readily accessible and easy to follow, helping you get started with speech recognition faster. Quickstart guides, code examples, and integrations like SDKs will all be helpful resources, so ensure their availability prior to starting a project.

5. Pricing

Transparent pricing is also a necessity so that you can get an accurate idea of your incurring costs prior to building. Watch out for hidden costs and check for bulk usage discounts to save in the long term.

6. Language support

If you need multilingual support, make sure you check that the provider offers the language you need. Automatic Language Detection (ALD) is another great tool as it automatically allows users to detect the main language in an audio or video file and translate it in that language.

7. Privacy and security

When dealing with large amounts of sensitive data, solid privacy and security practices are a must. Make sure your speech recognition provider can answer questions such as:

- Have I accounted for defense in depth?

- Does the API provider adhere to strict industry standard frameworks?

- How much transparency is provided in code-level controls?

- What technical controls are supporting the security of my data?

Additionally, privacy measures like Personally Identifiable Information (PII) redaction ensure that data in particularly sensitive fields like medicine and customer information remains private.

8. Innovation

The fields of speech recognition and Speech AI are in nearly constant innovation. When choosing an API, make sure the provider has a strong focus on AI research and a history of frequent model updates and optimizations. This will ensure your speech recognition tool remains state-of-the-art.

Try AssemblyAI’s Universal-1 speech recognition for free in the no-code AI playground

The future of speech recognition: A glimpse into the voice-enabled world

Advancements in speech recognition and Speech AI continue to accelerate. Expect accuracy to continue to improve, as well as support for multilingual speech recognition and faster streaming, or real-time, speech recognition.

We’ll also see new applications for speech recognition expand in different areas. Voice biometrics, for example, is a technology that uses a person’s voice “print” to identify and authenticate them, and is already being integrated into technology like banking over the phone. Emotion recognition uses AI to detect human emotions in spoken audio or video as well as using facial detection technology.

In general, we can expect speech recognition technology to be integrated into nearly every aspect of daily life — from grocery checkouts to self-driving cars to home applications.

Still, some concern remains over the responsible use of speech recognition technology, especially over data privacy, data security, biases in AI algorithms, and more. Open conversations with AI providers will help assuage some of these concerns, as well as assess their commitment to responsibly move the field forward.

Unlock the power of speech with AssemblyAI

Speech recognition is a transformative technology that will change the way consumers and businesses interact with audio and video on a daily basis.

For example, launched in early 2024, AssemblyAI’s Universal-1 speech recognition model is raising the bar as the industry-leading Speech AI provider. Universal-1 was trained on over 12.5 million hours of multilingual audio data, helping it achieve:

- more than 92.5% accuracy in almost all conditions

- significantly reduced latency

- strong multilingual transcription

Not only does a model like Universal-1 provide highly accurate speech-to-text transcription, but it will also help power the next generation of AI products and tools built on top of this voice data at greater accuracy and speed.

If you would like to test Universal-1 yourself, you can play around with speech transcription and speech understanding in the AssemblyAI playground, or sign up for a user account to get $50 in credits.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

.webp)