Biggest challenges in building AI voice agents (and how AssemblyAI & Vapi are solving them)

Building AI Voice Agents comes with challenges like handling interruptions, background noise, and latency. Learn how AssemblyAI & Vapi are solving them for faster, smarter voice AI.

AI Voice Agents are becoming a key part of how businesses handle customer interactions, scheduling, real-time transcription, and automation. But while AI-powered voice interactions have come a long way, building an AI Voice Agent that feels human-like, adapts in real-time, and integrates seamlessly into business workflows is still a complex challenge.

During our YouTube livestream with Jordan Dearsley, CEO of Vapi, we discussed some of the biggest technical challenges developers face when building AI Voice Agents and how modern AI solutions—like Vapi’s Workflows and AssemblyAI’s Streaming Speech-to-Text API—are solving them.

Whether you’re a developer, AI researcher, or business leader, understanding these challenges is crucial to building better AI-powered voice interactions.

Interruptions and overlapping speech: Making AI conversations feel natural

In human conversations, interruptions happen all the time. People talk over each other, change topics mid-sentence, and pause before finishing a thought. A well-designed AI Voice Agent needs to handle interruptions in a way that feels natural—but that’s much harder than it sounds.

Many AI systems struggle with rigid, turn-based conversation models, meaning they either talk over the user or wait too long to respond, making interactions feel robotic.

How modern AI voice agents handle interruptions

Vapi solves this by orchestrating real-time speech processing with low-latency models. This means the AI Voice Agent can:

✔ Detect when a user starts speaking and immediately stop talking.

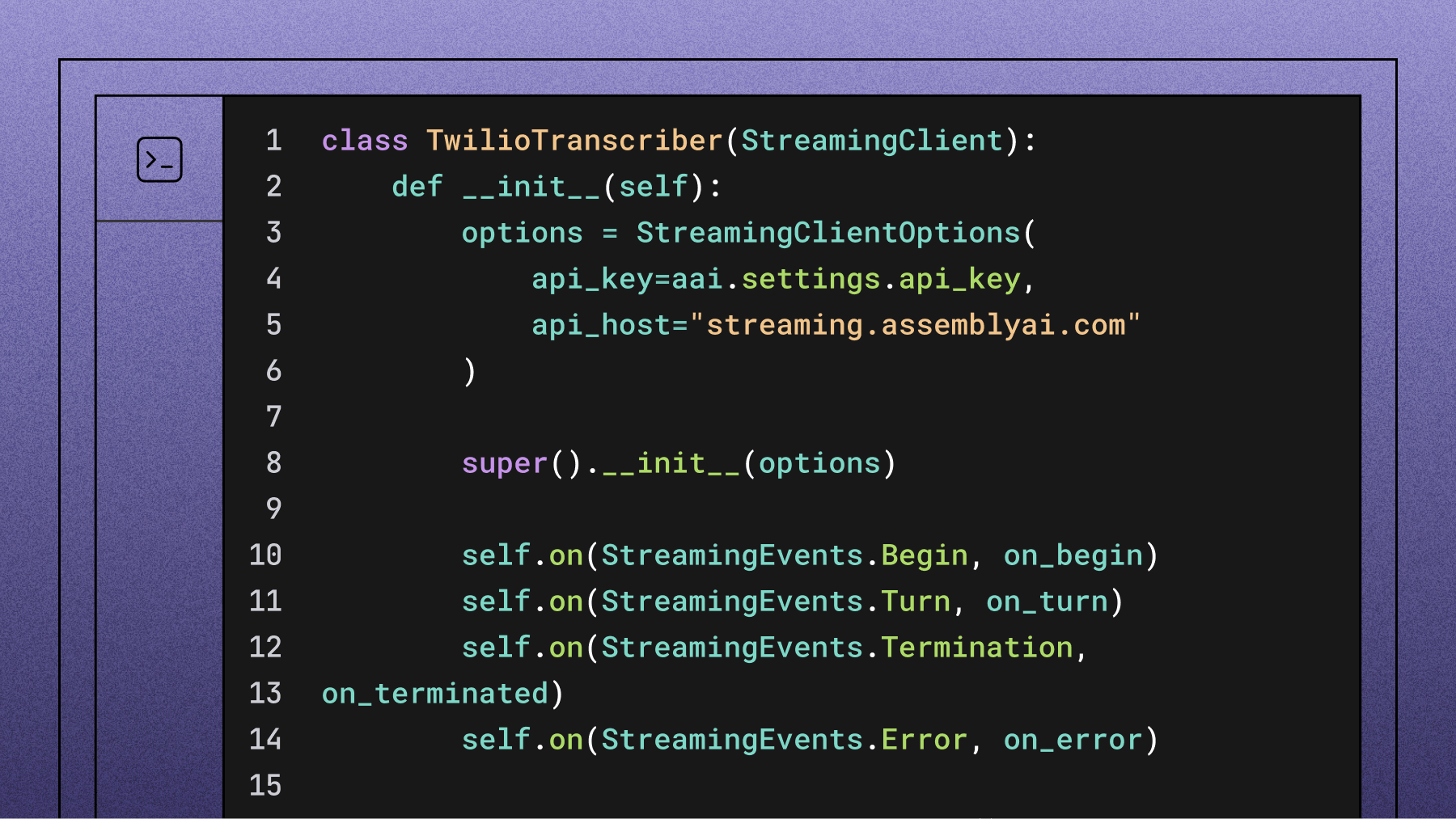

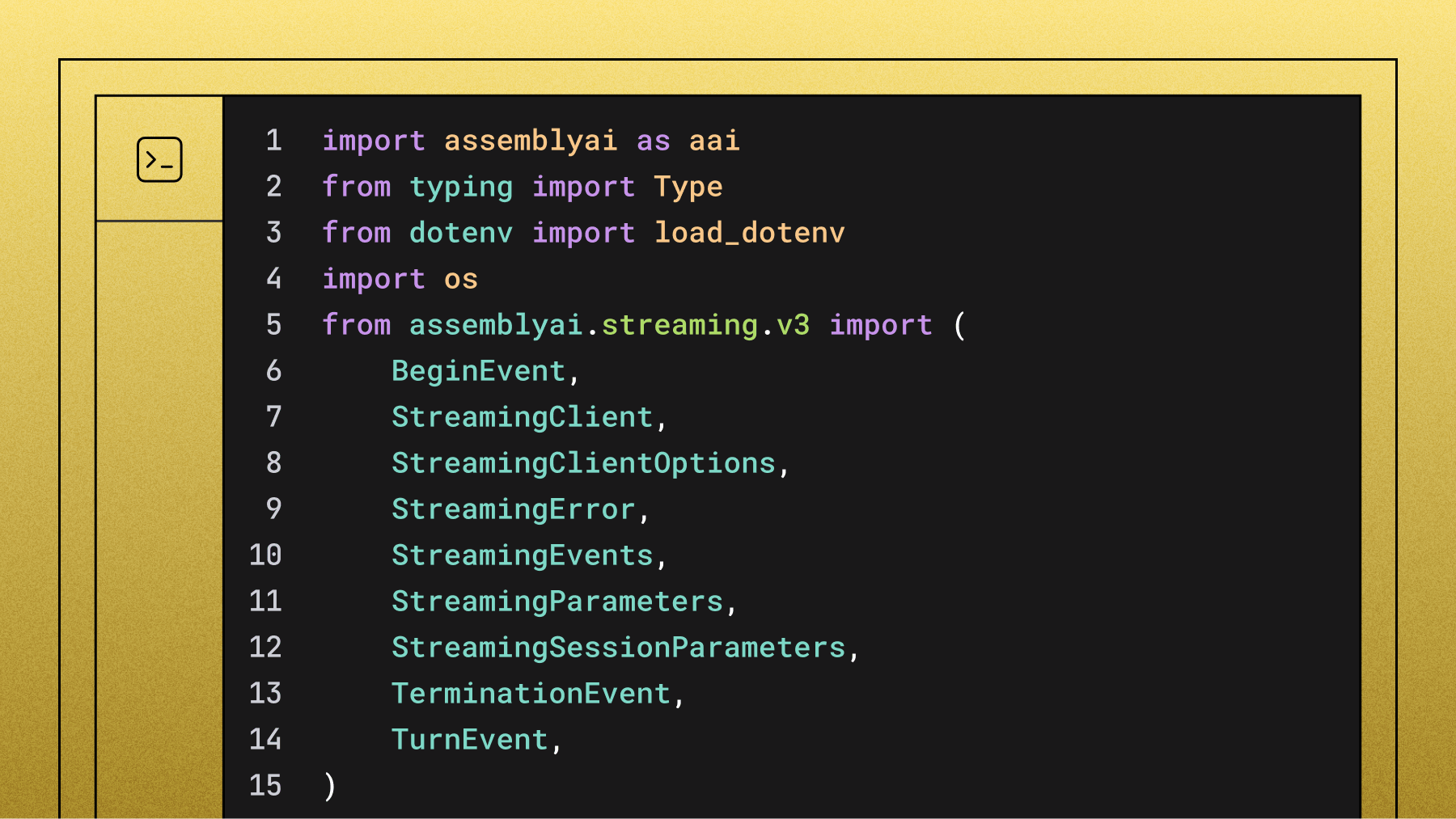

✔ Use AssemblyAI’s Streaming Speech-to-Text API, which transcribes speech in milliseconds, so the AI can react as quickly as a human.

✔ Adjust its conversation flow based on intent—if a user says “Wait, I need to change that,” the AI can pause and respond accordingly.

The result? Conversations that feel fluid and responsive, rather than frustrating and rigid.

Maintaining context in conversations

One of the biggest frustrations with AI Voice Agents is when they forget what was said earlier in the conversation. Imagine booking an appointment, providing your name at the start, and then being asked for it again later.

This happens because many AI models process conversations in short bursts, meaning they don’t inherently remember past interactions unless they have a structured way to store and recall information.

The power of AI conversation workflows

Vapi solves this by introducing structured workflows that let developers define how an AI Voice Agent should:

✔ Store important details (like a user’s name or appointment time) throughout a session.

✔ Retrieve context when needed, rather than relying on an LLM to "remember."

✔ Reduce hallucinations—LLMs sometimes make up details when trying to recall past information, so structured memory prevents errors.

With these improvements, AI Voice Agents can handle long, multi-turn conversations more intelligently—whether it’s helping a customer book a service or answering follow-up questions about a previous request.

Background noise: Filtering out distractions

AI Voice Agents often operate in noisy environments—call centers, busy offices, or homes with background conversations. Unlike humans, who can instinctively focus on one speaker, AI models tend to transcribe everything they hear, leading to messy and inaccurate results.

To improve accuracy in real-world conditions, Vapi relies on high-quality speech-to-text models like AssemblyAI to handle noisy audio effectively. AssemblyAI’s Streaming Speech-to-Text API is designed to differentiate speech from background noise, ensuring that AI Voice Agents can focus on the primary speaker even in challenging environments. By leveraging accurate transcription and noise-adaptive speech recognition, Vapi enables AI Voice Agents to process conversations more reliably, even in less-than-ideal audio conditions.

These improvements allow AI Voice Agents to work in dynamic, real-world settings without getting confused by background chatter.

Latency: The key to human-like conversations

One of the biggest factors that make an AI Voice Agent feel natural or frustrating is latency. Delays of even a second can break the flow of conversation, making interactions feel robotic.

This is particularly challenging because AI Voice Agents combine multiple steps in real time:

1️⃣ Speech-to-Text (STT) – Converting speech into text.

2️⃣ Processing & AI Logic (LLM or other models) – Understanding intent and generating a response.

3️⃣ Text-to-Speech (TTS) – Converting the AI-generated response back into speech.

If any step introduces too much delay, the entire experience suffers.

How Vapi optimizes AI voice agent latency

✔ Low-latency STT: AssemblyAI’s Streaming Speech-to-Text API transcribes speech in just a few hundred milliseconds so AI Voice Agents can react quickly.

✔ Optimized Orchestration: Vapi allows developers to choose their own models (STT, LLM, TTS) based on performance needs, ensuring minimal processing delays.

✔ Efficient AI Processing: By structuring conversations into step-by-step workflows, Vapi reduces the need for repeated API calls and unnecessary processing time.

The end result? A faster, more responsive AI Voice Agent that keeps the conversation fluid and natural.

The future of AI voice agents: More flexibility & smarter workflows

AI Voice Agents are evolving, and while technology is improving, flexibility remains key for companies that want to build custom AI solutions.

Vapi’s approach is to orchestrate different AI components—not replace them. Companies can choose their own STT, LLM, and TTS providers, allowing for granular control over performance, cost, and accuracy.

Rather than pushing toward a single speech-to-speech model, this modularity ensures that businesses can fine-tune AI Voice Agents to their exact needs.

Get started: Build your AI voice agent today

Want to start building AI Voice Agents that handle interruptions, maintain context, and work in noisy environments? Here’s where to start:

✅ Try AssemblyAI’s Streaming Speech-to-Text API → https://www.assemblyai.com/docs/speech-to-text/streaming

✅ Explore Vapi’s AI Voice Agent platform → https://vapi.ai/

✅ Watch the full livestream here → https://youtu.be/8bfX79VC4GU

The next generation of AI Voice Agents is here—faster, more flexible, and more human-like than ever.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.