Voice is rapidly becoming the primary way we interact with AI, and market projections show the speech recognition technology market is expected to reach $29.28 billion by 2026. By 2025, the shift from typing commands to simply speaking them feels less like science fiction and more like inevitable reality.

The numbers tell a compelling story. Recent industry research shows that enterprises are rapidly adopting Voice AI technology, with a recent report finding that 62% of organizations are either experimenting with or scaling AI agents, considering them foundational to their operations. Yet there's a massive gap between what's possible and what's being delivered—organizations report significant dissatisfaction with their current voice systems.

This disconnect represents an enormous opportunity. While legacy IVR systems frustrate customers with endless menu trees, modern voice AI agents understand natural language, maintain context, and solve problems in real-time—and one survey shows that around 90% of companies using them report faster complaint resolution. Organizations recognize this potential—nearly all plan Voice AI deployments as early innovators move from experimentation to production.

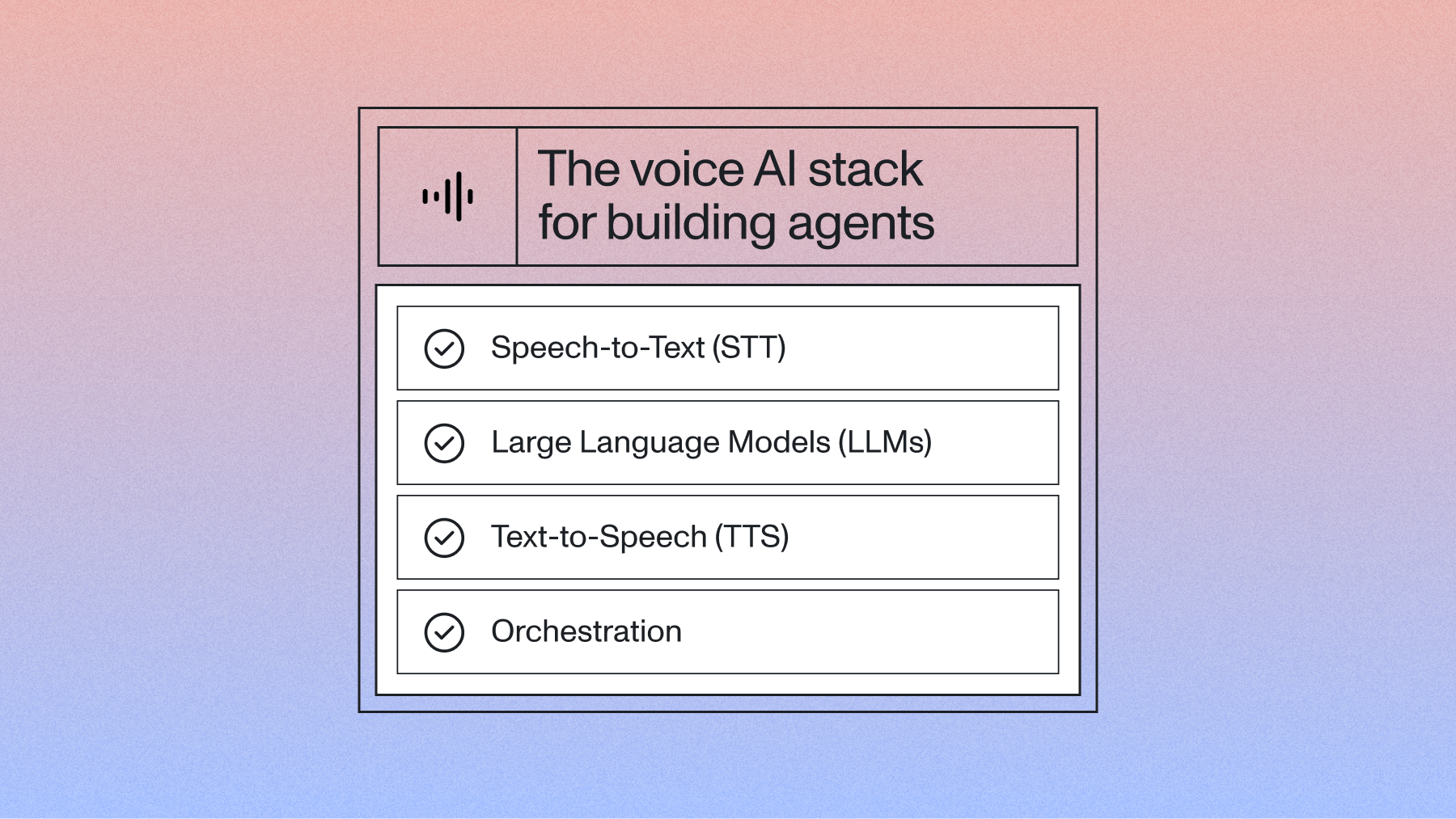

Building effective voice agents requires understanding the underlying technology stack. This isn't just about connecting an API—it's about orchestrating multiple AI systems to create seamless, human-like interactions. We'll explore the four essential components of the Voice AI stack, examine different architectural approaches, and provide guidance for making informed implementation decisions.

Deconstructing the modern AI voice agent stack

A Voice AI stack is the integrated set of AI technologies that enables computers to have natural conversations with humans. Every voice agent relies on four essential pillars working in harmony: Speech-to-Text (STT) as the "ears," Large Language Models (LLMs) as the "brain," Text-to-Speech (TTS) as the "voice," and orchestration as the "conductor" managing the real-time flow between components.

Here's how a typical interaction unfolds:

- User speaks: "Can you help me reschedule my appointment for tomorrow?"

- STT processes: Converts audio to text with proper formatting and punctuation

- LLM understands: Interprets intent, accesses calendar data, generates response

- TTS synthesizes: Converts response text to natural-sounding speech

- User hears: "I can help you reschedule. What time would work better for you?"

This modular approach persists even as end-to-end models emerge. Different components have specialized optimization requirements:

- STT: Accuracy and low latency for real-time processing

- LLMs: Advanced reasoning and context management

- TTS: Natural prosody and emotional expression

The core challenge is latency. Sequential processing creates cumulative delays that turn natural conversation into awkward exchanges where users wonder if their agent is still listening.

Voice AI agent architecture

┌─────────────────────────────────────────────────────────────┐

│ Voice AI Agent Stack │

├─────────────────────────────────────────────────────────────┤

│ User Speech Input │

│ ↓ │

│ ┌─────────────────┐ │

│ │ STT/ASR │ ← Converts speech to text │

│ │ "The Ears" │ (accuracy, latency, endpointing) │

│ └─────────────────┘ │

│ ↓ │

│ ┌─────────────────┐ │

│ │ LLM │ ← Understands intent & generates │

│ │ "The Brain" │ responses (reasoning, context) │

│ └─────────────────┘ │

│ ↓ │

│ ┌─────────────────┐ │

│ │ TTS │ ← Converts text to speech │

│ │ "The Voice" │ (naturalness, latency, emotion) │

│ └─────────────────┘ │

│ ↓ │

│ Audio Response Output │

│ │

│ ┌─────────────────────────────────────────────────────────┤

│ │ Orchestration Layer │

│ │ "The Conductor" │

│ │ • Real-time streaming management │

│ │ • Turn-taking and interruption handling │

│ │ • Conversation state tracking │

│ │ • External API integration │

│ └─────────────────────────────────────────────────────────┘

Explore real-time transcription quality

Upload audio to see punctuation and formatting in action. Evaluate accuracy on names, numbers, and domain terms for voice agents.

Open playground

Voice AI architecture patterns

Before diving into individual components, it's crucial to understand the architectural patterns that govern how they interact. The right architecture is the difference between a clunky, delayed agent and one that feels responsive and natural.

Cascading pipelines

This is the most straightforward approach. Each step in the process happens sequentially: audio is sent to the speech-to-text model, the full transcript is sent to the LLM, the LLM's full response is sent to the text-to-speech model, and finally, the audio is played back. While simple to build and debug, this pattern introduces significant cumulative latency, making it unsuitable for real-time conversations.

Streaming architectures

To solve the latency problem, modern voice agents use streaming architectures. Here, data flows continuously between components. The STT model transcribes audio in chunks, the LLM starts generating a response as soon as it receives the first few words, and the TTS model begins synthesizing audio before the LLM has finished its thought. This parallelism dramatically reduces perceived latency and is essential for building agents that can be interrupted and hold a natural conversation.

End-to-end models

A newer approach involves using a single, large AI model that handles the entire process from speech-to-speech. While promising, these models are still maturing. They often lack the flexibility and specialized performance of a modular, streaming architecture. For most production use cases today, a well-orchestrated streaming stack offers the best balance of performance, control, and quality.

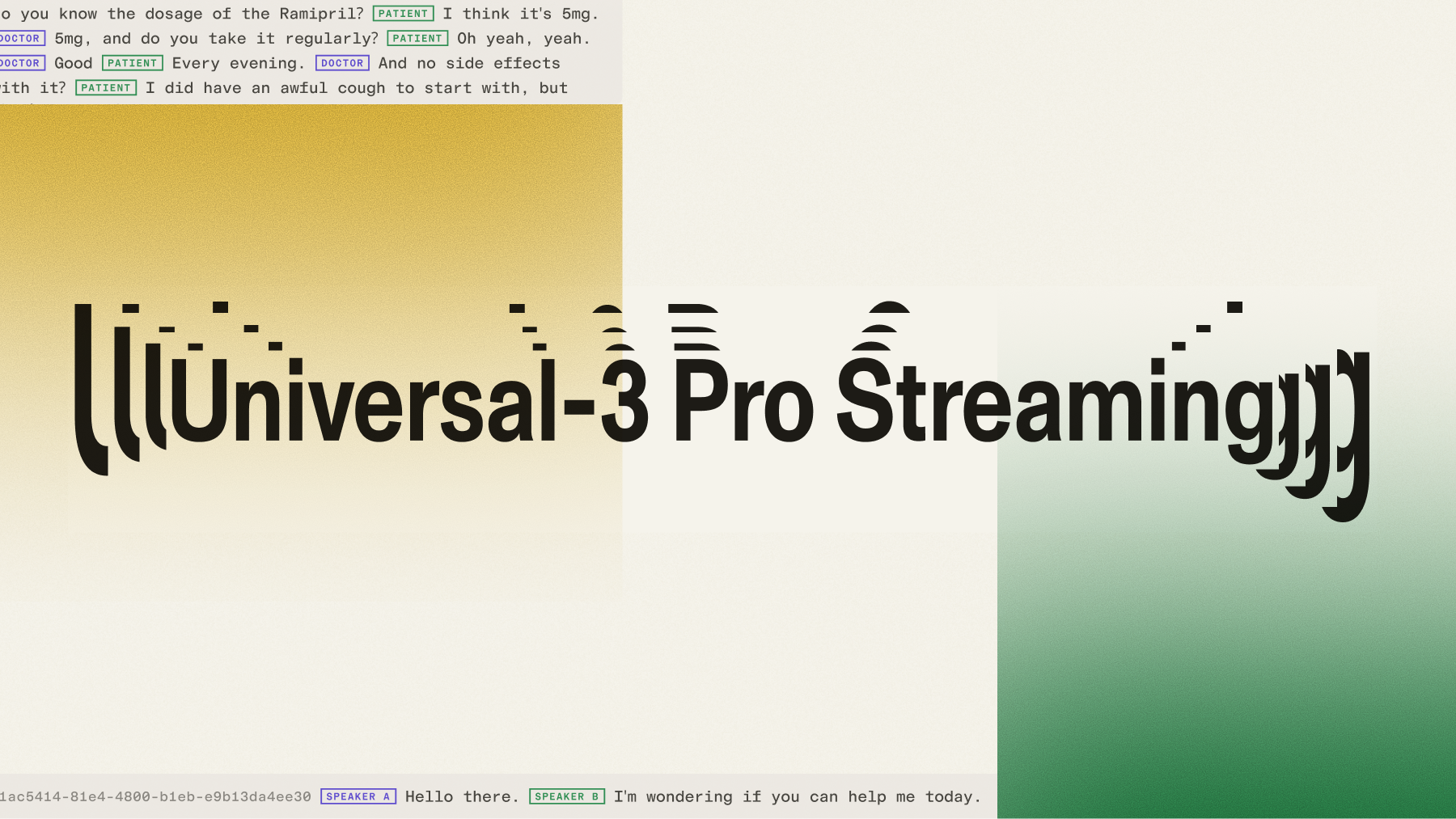

Speech-to-text: The foundation

STT serves as the critical entry point for voice agents. The "garbage in, garbage out" principle applies heavily here—poor transcription quality cascades through the entire system, leading to misunderstood requests and frustrated users.

Beyond basic accuracy, voice agents need STT systems optimized for conversation. Standard Word Error Rate (WER) metrics don't capture what matters most: proper formatting, correct punctuation, and accurate handling of domain-specific terminology. A system with excellent WER might still perform poorly on phone numbers, addresses, or technical terms that are crucial for business applications.

As implementation guides confirm, voice agents demand STT response times under 500ms to maintain a natural, truly conversational flow. This means streaming architectures process audio incrementally rather than waiting for complete utterances.

Intelligent endpointing represents another crucial capability. Voice agents need to detect natural speech boundaries—knowing when users have finished speaking versus when they're simply pausing to think. Poor endpointing leads to agents interrupting users or waiting awkwardly long for responses that aren't coming.

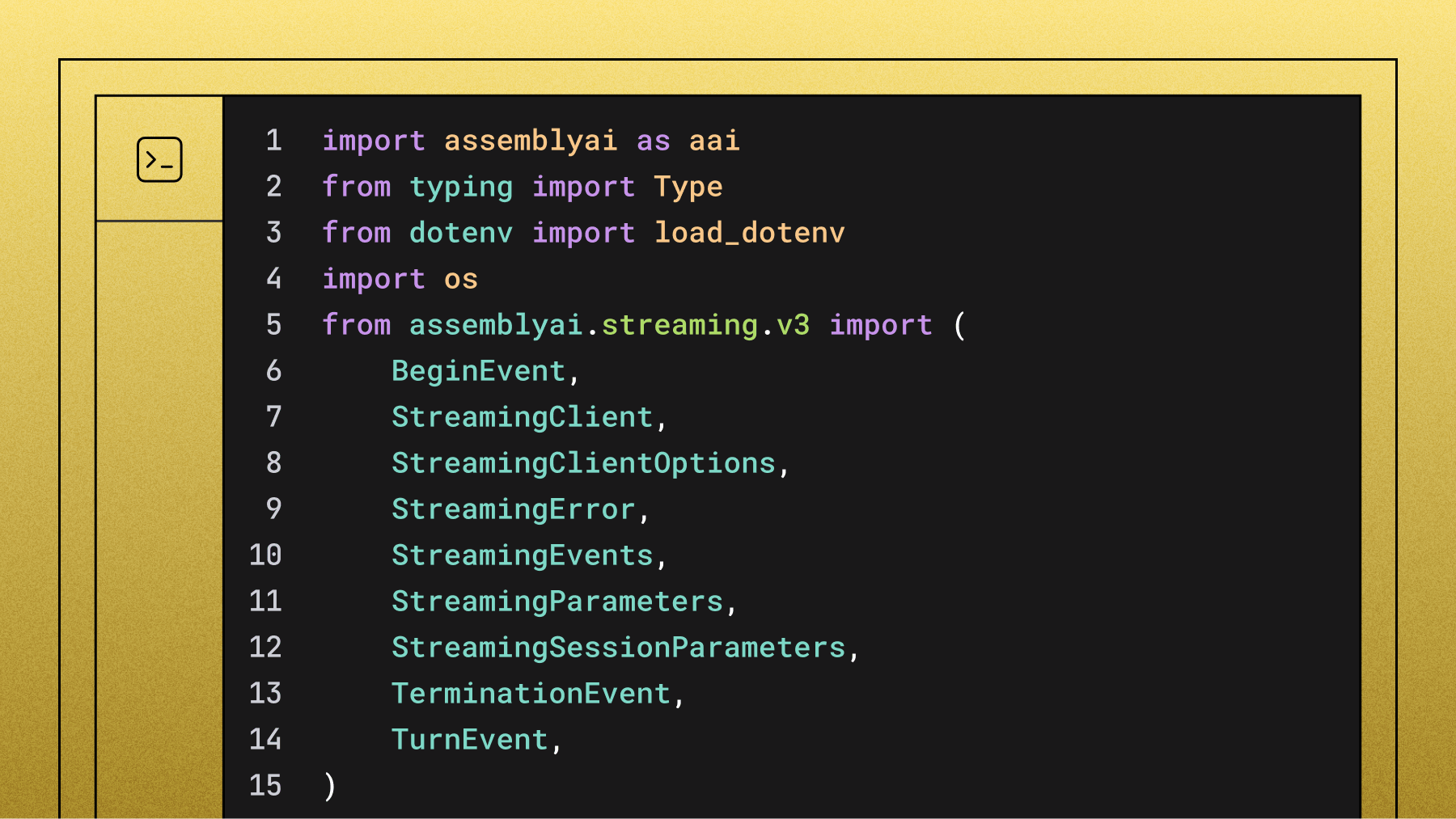

AssemblyAI's Universal-Streaming model, for example, offers real-time transcription with ~300ms immutable transcripts at $0.15 per hour, featuring intelligent endpointing optimized for voice agent applications.

The performance demonstrates consistent advantages across the metrics that matter most for voice agents: proper noun recognition, alphanumeric accuracy, and real-world conversation handling.

Build with Universal-Streaming STT

Get ~300ms real-time transcripts with intelligent endpointing. Optimized for responsive, interruptible voice agents.

Sign up free

Text-to-speech: Giving voice to AI agents

TTS quality directly impacts user perception and trust, as consumer research shows that 47% of people are concerned about AI handling customer service calls. Robotic or unnatural voices immediately signal "artificial" to users, creating psychological barriers that affect engagement and task completion rates.

Modern TTS systems are measured on several key metrics:

Time to First Byte (TTFB) should ideally stay under 200ms for responsive interaction. Streaming TTS architectures can begin audio playback while still generating the complete response. This dramatically improves perceived latency.

Mean Opinion Score (MOS) ratings above 4.0 indicate human-like quality. Recent advances in neural synthesis have pushed commercial systems well into this range. This makes artificial voices nearly indistinguishable from human speech in many contexts.

Emotional expression and prosody add naturalness to responses. Advanced TTS can convey appropriate emotions—empathy when users are frustrated, enthusiasm when sharing positive news, or urgency during time-sensitive situations.

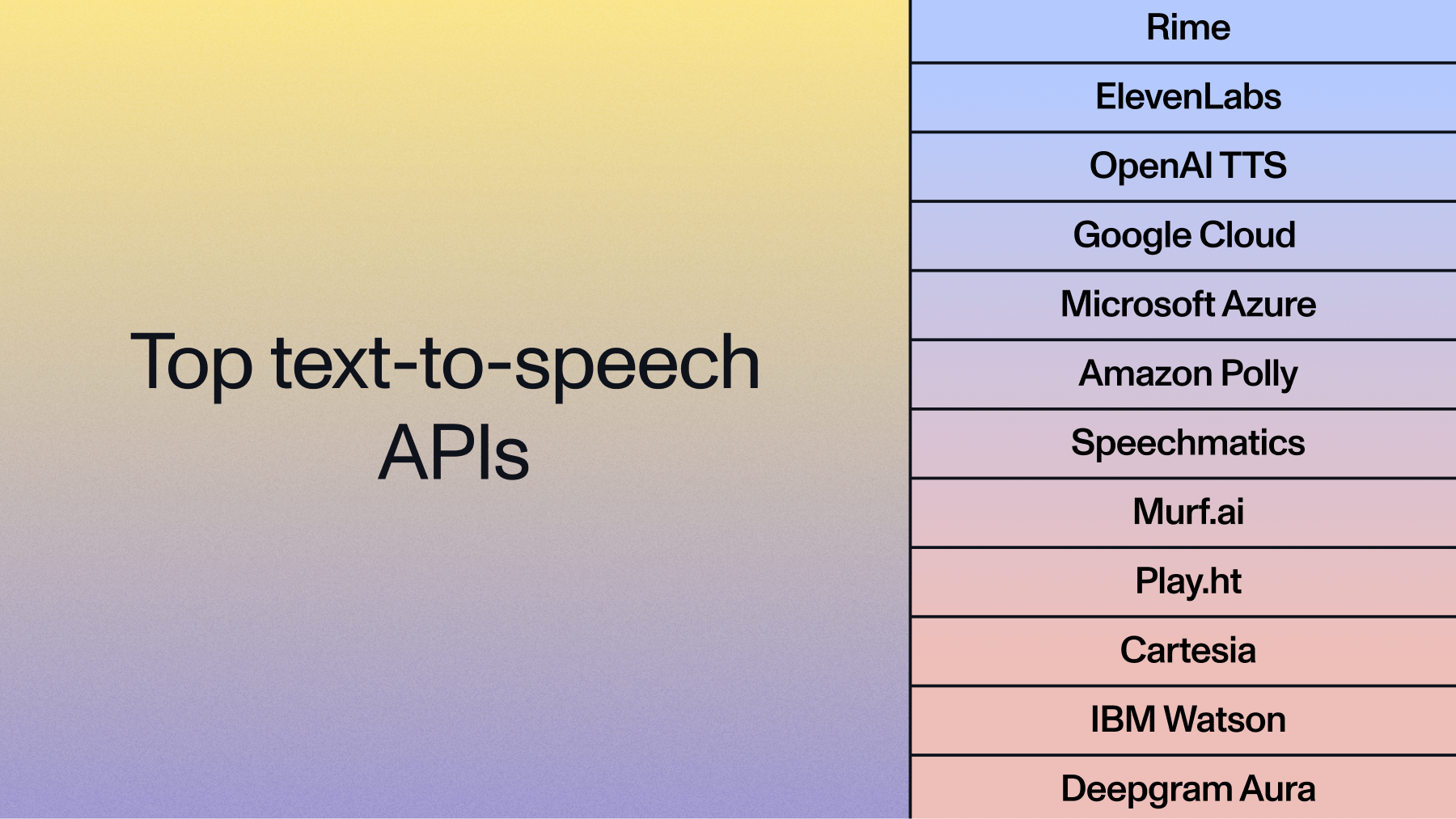

Leading TTS providers offer different latency-quality tradeoffs:

- Cartesia: Ultra-low latency applications

- ElevenLabs: Extensive voice customization options

- Rime: Realistic voices with emotion and quality focus

Voice customization allows organizations to maintain brand identity through AI interactions. Custom voices can reflect company personality while still delivering the naturalness users expect from modern voice interfaces.

LLMs: The brain of Voice AI agents

Large Language Models serve as the reasoning engine for voice agents, but their requirements differ significantly from text-based applications. Voice interactions demand faster response times, conversational ability, and seamless integration with external systems.

Latency optimization becomes paramount. While a chatbot user might accept 2-3 second response times, voice conversation feels broken with similar delays. Time to First Token (TTFT) and Time to First Byte (TTFB) metrics matter more than throughput for voice applications.

Conversational ability extends beyond simple question-answering. Voice agents need to maintain context across multiple turns. They must handle interruptions gracefully and adapt their communication style to match user preferences and emotional states.

Function calling capabilities enable agents to interact with external systems—checking calendars, placing orders, or retrieving customer data. The LLM needs to understand when to call functions, format parameters correctly, and incorporate results into natural responses.

Model selection involves balancing capability with latency. Key considerations include:

Model sizing:

- Smaller, faster models (like Gemini 2.5 Flash-Lite or Claude 4.5 Haiku) for straightforward interactions where low latency is critical, especially as, according to recent analysis, the inference cost for smaller, capable models has dropped dramatically.

- Larger, more powerful models (like Gemini 3 Pro or GPT-5.2) for complex reasoning, even if it introduces a slight latency penalty.

Optimization strategies:

- Prompt engineering for conciseness

- Response streaming to reduce perceived latency

- Intelligent caching for frequent requests

- Multiple LLM tiers with strategic handoffs

Orchestration

Orchestration manages the real-time complexity that makes voice agents work seamlessly. This goes far beyond simple data passing between components—it creates natural conversation flow in a fundamentally asynchronous system.

Leading orchestration approaches fall into two categories:

Frameworks like Vapi, LiveKit Agents, and Daily/Pipecat provide building blocks for custom voice agent development. These offer maximum flexibility but require more development expertise.

All-in-One Platforms like Bland, Retell, and Synthflow provide complete voice agent solutions with simplified deployment. These reduce development time but limit customization options for specialized use cases.

Essential orchestration capabilities include:

- Real-time streaming management coordinates audio flow between components while handling network issues, buffer management, and quality adaptation based on connection conditions.

- Turn-taking and interruption handling detect when users want to interrupt the agent. Systems gracefully stop speech synthesis and immediately process new input without losing context.

- Conversation state tracking maintains dialogue history, user preferences, and session context across potentially long interactions spanning multiple topics and function calls.

- External API integration connects voice agents to business systems, databases, and third-party services with proper error handling and fallback strategies.

The choice between frameworks and platforms depends on customization needs versus development speed. Startups often prefer all-in-one solutions for rapid prototyping. As one founder advised in a recent survey, it's best to "Use an AI provider for as long as possible. The technology is evolving quickly—you won't be able to keep pace with your own tech." Enterprises typically require the flexibility that frameworks provide.

For a voice agent, performance isn't just a technical detail—it's the core of the user experience. A slow or inaccurate agent is a failed agent.

Key performance concepts:

- Latency: Time delay between user input and agent response

- Accuracy: Correct transcription and understanding of user requests

- Concurrency: Number of simultaneous conversations the system can handle

When evaluating your stack, focus on what truly matters in live conversation.

Metric | Target Range | Impact on User Experience |

|---|

Time to First Byte (TTFB) | < 200ms | Critical for perceived responsiveness |

Total Response Time | < 1500ms | Maintains natural conversation flow |

Word Error Rate (WER) | < 5% | Ensures accurate understanding |

Concurrent Sessions | Varies by scale | Determines system scalability |

Latency: This is the most critical factor. You should measure Time to First Byte (TTFB) for both the LLM and TTS components. The goal is to get the agent speaking as quickly as possible to signal that it's responsive.

Accuracy: Word Error Rate (WER) is a starting point, but as academic analysis shows, it doesn't tell the whole story, since performance is significantly influenced by the dataset and real-world noise. You need to test for accuracy on domain-specific terms, names, and numbers that are critical to your use case. A single misrecognized digit in a phone number can break the entire interaction.

Concurrency: How many simultaneous conversations can your stack handle without degradation? This is vital for scaling your application. Test your infrastructure under load to find bottlenecks before your users do.

Optimization strategies

Achieving low latency requires a holistic approach. Start by streaming every component in your stack. Use an STT model with intelligent endpointing to know precisely when the user has finished speaking.

For your LLM, prompt engineering for concise responses can significantly reduce inference time. Consider using a faster, specialized LLM for initial responses and a more powerful one for complex reasoning to balance speed and capability.

Optimization Technique | Component | Potential Latency Reduction |

|---|

Streaming Processing | All | Major improvement |

Intelligent Endpointing | STT | Moderate improvement |

Response Caching | LLM | Significant for common queries |

Edge Deployment | Infrastructure | Reduces network overhead |

Prompt Optimization | LLM | Moderate to major improvement |

Conversational agent frameworks and end-to-end solutions

Specialized voice agent frameworks abstract away much of the technical complexity while providing voice-specific optimizations. These platforms handle the intricate details of audio processing, real-time coordination, and conversation management.

Development platforms offer comprehensive toolkits for building sophisticated voice agents. They typically include pre-built integrations with major STT, LLM, and TTS providers, conversation design tools, and deployment infrastructure. The focus remains on business logic rather than technical implementation.

Low-code/no-code options enable faster deployment for standard use cases. These platforms provide visual workflow builders, template libraries, and drag-and-drop integration tools. While less flexible than code-based approaches, they significantly reduce time-to-market for common voice agent applications.

Enterprise-focused solutions add advanced capabilities like multi-tenant management, detailed analytics, compliance features, and integration with existing business systems. These platforms often include professional services for custom development and optimization.

End-to-end platforms provide complete voice agent solutions with unified APIs. These simplify integration by handling all stack components internally, though with less flexibility for customization.

Key selection criteria include:

- Integration ecosystem: Native support for preferred STT, LLM, and TTS providers

- Scalability requirements: Concurrent user limits and geographic deployment options

- Customization needs: Ability to modify conversation flow, integrate custom logic, and access low-level controls

- Deployment flexibility: Cloud, on-premise, or hybrid hosting options

- Development team expertise: Technical capability and preferred development approaches

Choosing the right architecture for your Voice AI agent

Two primary architectural patterns emerge for Voice AI agent implementations. Each offers distinct tradeoffs between complexity and performance.

Architecture Patterns Comparison

Pattern | Latency | Complexity | Flexibility | Best For |

|---|

Cascading Pipeline | High (800-2000ms) | Low | High | Prototyping, asynchronous use cases |

Streaming Pipeline | Low (<500ms) | Medium | High | Real-time conversation, production agents |

All-in-One APIs | Medium (500-1200ms) | Low | Low | Quick deployment, standard use cases |

Cascading Pipeline architecture processes each component sequentially. User speaks → STT completes → LLM processes → TTS generates → response plays. This approach is simple to implement and debug but creates cumulative latency that makes conversation feel unnatural.

All-in-One APIs provide complete voice agent functionality through single endpoints. These platforms handle internal optimization and coordination, offering medium latency with simple integration. However, they limit flexibility for custom requirements or specialized component selection.

Latency contributions vary significantly by component and implementation:

- STT: 100-500ms depending on streaming vs. batch processing

- LLM: 200-2000ms based on model size and prompt complexity

- TTS: 200-800ms influenced by streaming architecture and synthesis quality

- Network overhead: 50-200ms for API calls and audio transmission

Optimization strategies for achieving low-latency performance include:

- Streaming STT with intelligent endpointing reduces transcription latency

- Response streaming from LLMs enables TTS to begin before complete text generation

- Predictive caching pre-computes common responses for instant delivery

- Edge deployment minimizes network latency through geographic proximity

Universal-Streaming provides the foundation for modern streaming architectures with ~300ms transcription latency, intelligent endpointing, and seamless integration with real-time orchestration platforms. This enables voice agents to achieve the natural conversation flow users expect from production systems.

The architectural choice ultimately depends on your specific requirements:

- Choose a Streaming architecture for production systems, customer-facing applications, or anywhere natural conversation is essential. This pattern minimizes latency and enables responsive, interruptible agents.

- Choose an All-in-One API for rapid deployment, standard use cases, or teams with limited Voice AI expertise who need a managed solution.

- Choose a Cascading pipeline only for asynchronous tasks or early prototypes where real-time interaction is not a requirement.

Final words

Voice AI represents a fundamental shift in how humans interact with technology. The stack we've explored—STT, LLMs, TTS, and orchestration—forms the foundation for this transformation, but success lies in the thoughtful integration of these components.

The opportunity is massive, as industry forecasts predict the conversational AI market will reach nearly $14 billion by 2025. As enterprises recognize voice as the primary interface for AI interaction, those who master the underlying technology stack will build the applications that define the next decade of human-computer interaction.

AssemblyAI's Speech AI models provide the reliable foundation voice agents require. Voice AI agents represent how we'll interact with AI systems moving forward.

The future is conversational. Make sure you're ready to be part of it. Try our API for free to start building your Voice AI agent today.

Frequently asked questions about the Voice AI stack

A Voice AI stack is the modular set of technologies you assemble to build voice applications, while conversational AI platforms are all-in-one solutions that bundle these components for faster deployment.

How do I measure the latency of my voice AI agent?

Log timestamps at each stage: when the user stops speaking, when the STT transcript is final, when the LLM returns its first token, and when the TTS returns its first audio byte. This identifies which part of your stack creates the biggest bottleneck.

Can I mix and match providers for each component of the stack?

Yes, a modular approach lets you select the optimal provider for each component, giving you competitive advantages in quality and performance.

When should I consider building a component in-house vs. using an API?

Building foundational AI models requires dedicated research teams, so most companies benefit from leveraging production-ready APIs to focus resources on their core product.

Title goes here

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

.png)