Real-time transcription in Python with Universal-Streaming

Learn how to build real-time voice applications with AssemblyAI's Universal-Streaming model.

Real-time transcription in Python enables you to convert speech to text as it happens, opening up possibilities for voice agents, live captioning, and interactive voice applications; a use case that is valuable for many applications like conversational analytics and voice-controlled systems. This tutorial shows you how to implement real-time transcription using AssemblyAI's Universal-Streaming model, which delivers immutable transcripts with ~300ms latency.

We'll walk through the complete implementation process—from setting up your development environment to handling advanced configurations and production deployment. You'll learn how to transcribe audio from your microphone in real-time, optimize for low latency, and build resilient applications that can handle network interruptions and scale to production workloads.

What is real-time transcription and when to use it

Real-time transcription converts live audio streams to text with sub-second latency using WebSocket connections and streaming APIs. Unlike batch processing that requires complete audio files, real-time systems process audio chunks as they're captured.

Choose real-time transcription when your application depends on low-latency feedback. For example, voice agents need to understand and respond to a user immediately to maintain a natural conversational flow. Other common applications include live captioning for events, real-time meeting notes, and interactive voice response (IVR) systems.

Getting started

For this tutorial, we'll be using AssemblyAI's Universal-Streaming model, which delivers immutable transcripts with ~300ms latency and intelligent endpointing designed specifically for voice agents.

You'll need an API key, so get one for free here if you don't already have one.

Universal-Streaming requires session-based pricing at $0.15/hour. Free accounts are limited to 5 new sessions per minute. Paid accounts start at a default of 100 new sessions per minute and feature unlimited, automatically scaling concurrency.

Setting up your development environment

Set up an isolated Python environment to avoid dependency conflicts:

Create project structure:

mkdir universal-streaming-demo && cd universal-streaming-demo

python -m venv venv

Next, activate the virtual environment.

On MacOS/Linux:

source ./venv/bin/activate

On Windows:

.\venv\Scripts\activate.bat

Install system dependencies:

- Debian/Ubuntu:

apt install portaudio19-dev - MacOS:

brew install portaudio - Other systems: See portaudio documentation

Install Python packages:

pip install assemblyai pyaudio

Configure API authentication:

ASSEMBLYAI_API_KEY=your-api-key-here

To prevent accidentally committing your key to source control, create a .gitignore file and add .env and venv/ to it.

Choosing the right Python approach for real-time transcription

Python developers have several real-time transcription approaches:

- Basic libraries:

speech_recognitionwith Google/Azure APIs - limited accuracy, no streaming optimization - Open-source models: Whisper, Wav2Vec2 - requires GPU infrastructure and audio preprocessing

- Managed APIs: AssemblyAI Universal-Streaming - WebSocket-based, ~300ms latency, immutable transcripts

According to an AI insights report, using a managed API allows businesses to focus on innovation without the overhead of AI maintenance. Universal-Streaming eliminates this infrastructure complexity while providing production-grade accuracy and intelligent endpointing for voice agents.

How to perform real-time transcription with Universal-Streaming

Universal-Streaming uses WebSocket connections to provide ultra-fast, immutable transcripts. Unlike traditional streaming models that provide partial and final transcripts, Universal-Streaming delivers immutable transcripts that won't change once emitted, making them immediately ready for downstream processing in voice agents.

Understanding Universal-Streaming responses

Universal-Streaming uses Turn objects for immutable transcriptions. Each Turn represents a single speaking turn with these properties:

turn_order: Integer that increments with each new turn.transcript: String containing only finalized words from the current turn.utterance: String containing the complete utterance text, including words that are not yet final. This field is crucial for voice agents as it enables pre-emptive generation before the turn officially ends.words: An array of word objects, each with its own text, start time, end time, and confidence score. This allows for word-level analysis.end_of_turn: Boolean indicating if this is the end of the current speaking turn.turn_is_formatted: Boolean indicating if the text in thetranscriptfield includes punctuation and formatting.end_of_turn_confidence: Float (0-1) representing the model's confidence that the turn has naturally concluded.

Event handlers

We need to define event handlers for different types of events during the streaming session.

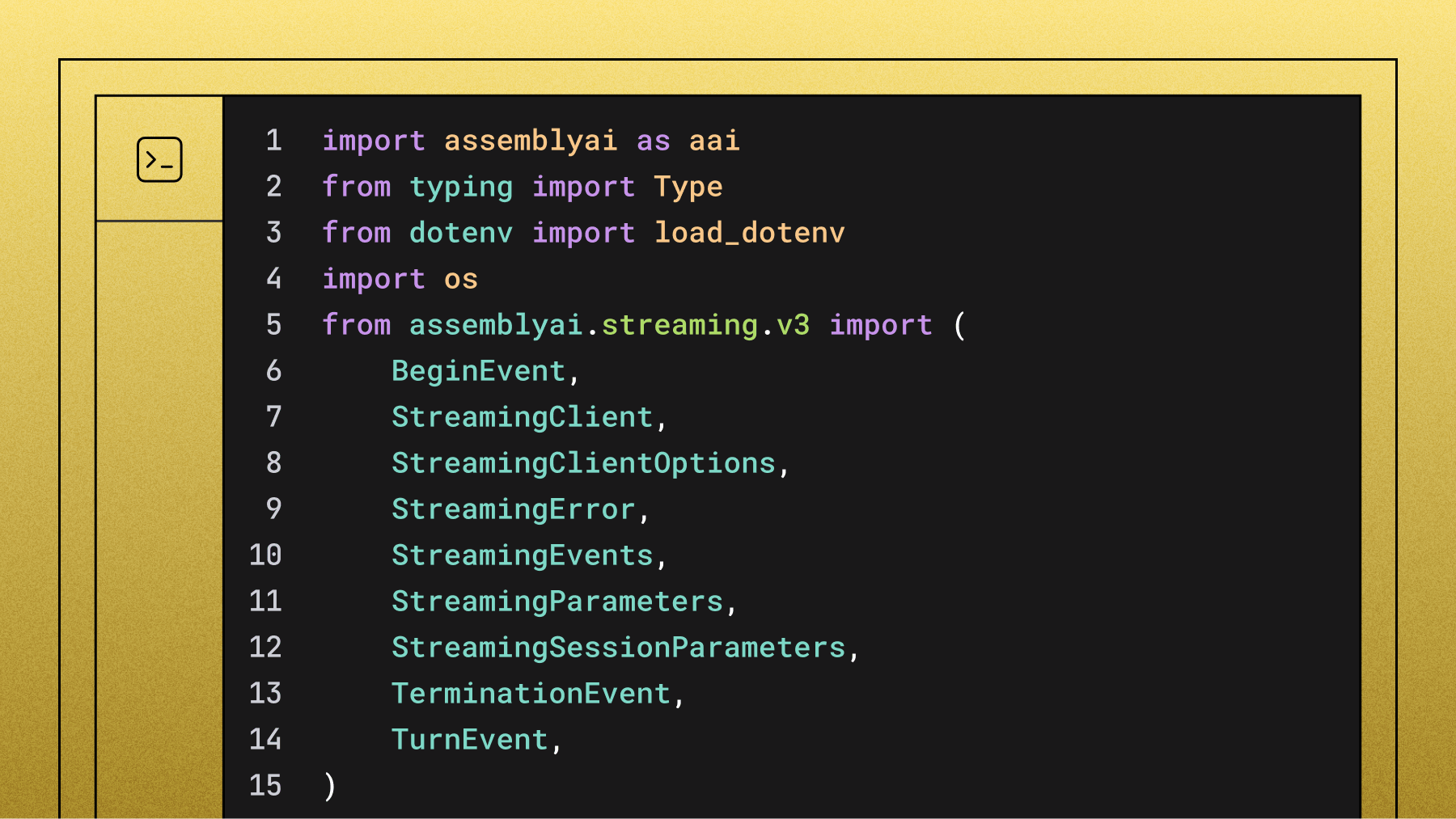

Create a file called main.py and add the following imports and event handlers:

import assemblyai as aai

from typing import Type

from dotenv import load_dotenv

import os

from assemblyai.streaming.v3 import (

BeginEvent,

StreamingClient,

StreamingClientOptions,

StreamingError,

StreamingEvents,

StreamingParameters,

StreamingSessionParameters,

TerminationEvent,

TurnEvent,

)

load_dotenv()

api_key = os.getenv('ASSEMBLYAI_API_KEY')

def on_begin(self: Type[StreamingClient], event: BeginEvent):

print(f"Session started: {event.id}")

def on_turn(self: Type[StreamingClient], event: TurnEvent):

print(f"{event.transcript} ({event.end_of_turn})")

if event.end_of_turn and not event.turn_is_formatted:

params = StreamingSessionParameters(

format_turns=True,

)

self.set_params(params)

def on_terminated(self: Type[StreamingClient], event: TerminationEvent):

print(

f"Session terminated: {event.audio_duration_seconds} seconds of audio processed"

)

def on_error(self: Type[StreamingClient], error: StreamingError):

print(f"Error occurred: {error}")

Create and run the streaming client

Now add the main script code to create and run the Universal-Streaming client:

def main():

client = StreamingClient(

StreamingClientOptions(

api_key=api_key,

api_host="streaming.assemblyai.com"

)

)

client.on(StreamingEvents.Begin, on_begin)

client.on(StreamingEvents.Turn, on_turn)

client.on(StreamingEvents.Termination, on_terminated)

client.on(StreamingEvents.Error, on_error)

client.connect(

StreamingParameters(

sample_rate=16000,

format_turns=True,

)

)

try:

client.stream(

aai.extras.MicrophoneStream(sample_rate=16000)

)

finally:

client.disconnect(terminate=True)

if __name__ == "__main__":

main()

Running the script

With your virtual environment activated, run the script:

python main.py

You'll see your session ID printed when the connection starts. Expected behavior:

- Immutable transcripts appear in real-time as you speak

- Final transcripts include punctuation and formatting after speech ends

- Press

Ctrl+Cto terminate the session

Complete example

Here's the complete working example:

import assemblyai as aai

from typing import Type

from dotenv import load_dotenv

import os

from assemblyai.streaming.v3 import (

BeginEvent,

StreamingClient,

StreamingClientOptions,

StreamingError,

StreamingEvents,

StreamingParameters,

StreamingSessionParameters,

TerminationEvent,

TurnEvent,

)

load_dotenv()

api_key = os.getenv('ASSEMBLYAI_API_KEY')

def on_begin(self: Type[StreamingClient], event: BeginEvent):

print(f"Session started: {event.id}")

def on_turn(self: Type[StreamingClient], event: TurnEvent):

print(f"{event.transcript} ({event.end_of_turn})")

if event.end_of_turn and not event.turn_is_formatted:

params = StreamingSessionParameters(

format_turns=True,

)

self.set_params(params)

def on_terminated(self: Type[StreamingClient], event: TerminationEvent):

print(

f"Session terminated: {event.audio_duration_seconds} seconds of audio processed"

)

def on_error(self: Type[StreamingClient], error: StreamingError):

print(f"Error occurred: {error}")

def main():

client = StreamingClient(

StreamingClientOptions(

api_key=api_key,

api_host="streaming.assemblyai.com"

)

)

client.on(StreamingEvents.Begin, on_begin)

client.on(StreamingEvents.Turn, on_turn)

client.on(StreamingEvents.Termination, on_terminated)

client.on(StreamingEvents.Error, on_error)

client.connect(

StreamingParameters(

sample_rate=16000,

format_turns=True,

)

)

try:

client.stream(

aai.extras.MicrophoneStream(sample_rate=16000)

)

finally:

client.disconnect(terminate=True)

if __name__ == "__main__":

main()

Advanced configuration options

Universal-Streaming offers several configuration options to optimize for your specific use case:

Intelligent endpointing

Configure end-of-turn detection to handle natural conversation flows:

client.connect(

StreamingParameters(

sample_rate=16000,

end_of_turn_confidence_threshold=0.8,

min_end_of_turn_silence_when_confident=500, # milliseconds

max_turn_silence=2000, # milliseconds,

)

)

Text formatting control

Control whether you receive formatted transcripts:

client.connect(

StreamingParameters(

sample_rate=16000,

format_turns=True

)

)

Authentication tokens

For client-side applications, use temporary authentication tokens to avoid exposing your API key. This is a critical security measure, as a recent market survey found that over 30% of respondents consider data privacy and security a significant challenge when building with speech recognition. First, on the server-side, use your API key to generate the temporary token:

# Generate a temporary token (do this on your server)

client = StreamingClient(

StreamingClientOptions(

api_key=api_key,

api_host="streaming.assemblyai.com"

)

)

token = client.create_temporary_token(expires_in_seconds=60, max_session_duration_seconds=3600)

Then on the client-side, initialize the StreamingClient with the token parameter instead of the API key:

client = StreamingClient(

StreamingClientOptions(

token=token,

api_host="streaming.assemblyai.com"

)

)

Performance optimization techniques

Optimize Universal-Streaming performance with these configurations:

- Minimal latency: Set

format_turns=Falsefor unformatted text (~50ms faster) - Audio quality: Use 16kHz sample rate for optimal accuracy/performance balance, as technical guides show that higher rates can increase bandwidth without improving transcription quality.

- Format control: Request formatted transcripts only after turn completion

- Sample rate matching: Ensure audio source matches StreamingParameters configuration

Custom audio sources and streaming

Stream audio from custom sources by replacing aai.extras.MicrophoneStream:

- File streaming: Read audio files in 4096-byte chunks

- Network streams: Process RTP/WebRTC audio streams

- VoIP integration: Connect to existing telephony systems

Create a custom streamer function that yields audio chunks to client.stream().

Troubleshooting common implementation issues

When working with real-time audio streaming, you might encounter a few common issues. Here's how to handle them.

- WebSocket Connection Errors: The SDK raises a

StreamingErrorwith specific codes. Common codes include:1008: Unauthorized connection. This can be due to an invalid API key, insufficient account balance, or exceeding your concurrency limits.3005: Session expired. This can happen if the maximum session duration is exceeded or if audio is sent faster than real-time.

- Incorrect Audio Format: The Universal-Streaming model expects a specific audio format and sample rate. Ensure you are streaming audio with a sample rate of at least 16000Hz. Mismatched sample rates can lead to poor transcription accuracy.

- Handling Network Interruptions: Network instability can disrupt the audio stream. Your application should include logic to catch connection errors and attempt to reconnect. As noted in production system guidance, network connections can be unreliable, so for production systems, building a resilient reconnection strategy is a good practice.

Production deployment considerations

Production deployment requires these technical considerations:

- Security: Use temporary tokens for client-side apps, never expose API keys

- Error handling: Implement

StreamingErrorexception handling with reconnection logic - Scalability: Leverage unlimited concurrency and session-based pricing

- Monitoring: Track connection health using SDK event handlers

Best practices for Universal-Streaming

To get the best results from Universal-Streaming:

- Audio Configuration: Use ≥16kHz sample rates for optimal accuracy

- Connection Management: Maintain persistent WebSocket connections to minimize latency

- Processing Optimization: Use

format_turns=Falsefor faster voice agent processing - Error Handling: Implement

StreamingErrorexception handling with reconnection logic - Security: Generate temporary tokens server-side for client applications

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.