On Windows, the virtual environment can be activated with the activate.bat script:

Now, install the AssemblyAI Python SDK.

Plain text summarization is straightforward, but conversational audio/video data is more complex. By passing the detailed text from a transcript, including information like speaker labels, into the LLM Gateway, you can generate context-aware summaries.

The following sections demonstrate video and audio summarization—the most robust approach for spoken content.

Start summarizing audio and video with Python

Get your AssemblyAI API key to transcribe with the Universal model and generate summaries via the LLM Gateway using our Python SDK.

Get API key

Transcription

First, we'll transcribe the podcast episode, which only takes three lines of code. Create a file called autosummarize.py and paste the below code into it:

import assemblyai as aai

transcriber = aai.Transcriber()

transcript = transcriber.transcribe("https://storage.googleapis.com/aai-web-samples/lex_guido.webm")

We import the assemblyai package, and then instantiate a Transcriber object, using its transcribe method to generate a Transcript object for the video.

Tip

Each transcript you create with AssemblyAI is assigned a unique ID, which you can access via the id attribute of a Transcript object - transcript.id in our case. You can fetch a transcript by its ID in order to avoid having to transcribe the same file again if you would like to further analyze it with LLM Gateway in the future.

Next, we'll add error-catching code in case there is an issue with the transcription. Add the following lines to the bottom of autosummarize.py:

if transcript.status == aai.TranscriptStatus.error:

raise Exception(f'Transcription error: {transcript.error}')

Automatic summarization

Now that we have our transcript object, we can use AssemblyAI's LLM Gateway to generate a summary. The LLM Gateway provides access to various Large Language Models (LLMs) that can process the transcribed text.

To do this, we'll construct a prompt that tells the LLM what to do. The prompt will include our instructions, the desired format, and the full text of the transcript. We'll send this prompt to the LLM Gateway's chat completions endpoint. For this, you'll need the requests library, so make sure to import it at the top of your autosummarize.py file.

import requests

import os

Next, we define our prompt. We'll instruct the model to create a summary with specific markdown formatting, and we'll provide context about the podcast episode. The full transcript text will be appended to this prompt.

prompt = f"""

You are an expert at summarizing podcast episodes.

Provide a summary of the following transcript.

The transcript is from an episode of the Lex Fridman podcast, where he speaks with Guido van Rossum, the creator of the Python programming language.

Format the summary with markdown, using the following structure for each topic:

**<topic header>**

<topic summary>

Transcript:

{transcript.text}

"""

We are now ready to perform the summarization. We will make a POST request to the LLM Gateway, sending our prompt in the payload. We also need to specify which model to use; in this case, we'll use a Claude model. Add the following lines to autosummarize.py:

# Note: The API key is retrieved from the environment variable or aai.settings.

# Ensure it's available for the request.

api_key = os.getenv("ASSEMBLYAI_API_KEY") or aai.settings.api_key

response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers={"authorization": api_key},

json={

"model": "claude-3-5-haiku-20241022",

"messages": [

{"role": "user", "content": prompt}

],

}

)

result = response.json()

print(result['choices'][0]['message']['content'])

Read LLM Gateway API Documentation

Running the script

To run the script, go to the terminal where you set the ASSEMBLYAI_API_KEY environment variable and activated the virtual environment, and enter the following command:

python autosummarize.py # you may have to use `python3`

After a minute or two, you will see the summary printed to the terminal:

**Python's design choices**

Guido discusses the rationale behind Python's indentation style over curly braces, which reduces clutter and is simpler for beginners. However, most programmers are familiar with curly braces from other languages. The dollar sign used before variables in PHP originated in early Unix shells to distinguish variables from program names and file names. Choosing different programming languages involves difficult trade-offs.

**Improving CPython's performance**

Guido initially coded CPython simply and efficiently, but over time more optimized algorithms were developed to improve performance. The example of prime number checking illustrates the time-space tradeoff in algorithms.

**The history of asynchronous I/O in Python**

In the late 1990s and early 2000s, the Python standard library included modules for asynchronous I/O and networking. However, over time these modules became outdated. Around 2012 to 2014, developers proposed updating these modules, but were told to use third party libraries instead. Guido proposed updating asynchronous I/O in the standard library. He worked with developers of third party libraries on the design. The new asynchronous I/O module was added to the standard library and has been successful, particularly for Python web clients.

**Python for machine learning and data science**

In the early 1990s, scientists used Fortran and C++ libraries to solve mathematical problems. Paul Dubois saw that a higher level language was needed to tie algorithms together. In the mid 1990s, Python libraries emerged to support large arrays efficiently. Scientists at different institutions discovered Python had the infrastructure they needed. Exchanging code in the same language is preferable to starting from scratch in another language. This is how Python became dominant for machine learning and data science.

**The global interpreter lock (GIL)**

The GIL allowed for multi-threading even on single core CPUs. As multi-core CPUs became common, the GIL became an issue. Removing the GIL could be an option for Python 4.0, though it would require recompiling extension modules and supporting third party packages.

**Guido's experience as BDFL**

While providing clarity and direction for the community, the BDFL role also caused Guido personal stress. He feels he relinquished the role too late, but the new steering council structure has led the community steadily.

**The future of Python and programming**

Python will become a legacy language built upon without needing details. Abstractions are built upon each other at different levels.

Automatic summarization of audio files with Python

The AssemblyAI Python SDK can also take in audio files - find a list of all supported formats here. So, to automatically summarize an audio file, we can use the same code as above but simply pass in an audio file:

import assemblyai as aai

import requests

import os

# Ensure your API key is set, e.g.:

# aai.settings.api_key = "YOUR_API_KEY"

transcriber = aai.Transcriber()

transcript = transcriber.transcribe("https://storage.googleapis.com/aai-web-samples/meeting.mp3")

if transcript.status == aai.TranscriptStatus.error:

raise Exception(f'Transcription error: {transcript.error}')

prompt = f"""

You are an expert at summarizing meetings.

Provide a summary of the following transcript, which is from a GitLab meeting to discuss logistics.

Format the summary with markdown, using the following structure for each topic:

**<topic header>**

<topic summary>

Transcript:

{transcript.text}

"""

api_key = os.getenv("ASSEMBLYAI_API_KEY") or aai.settings.api_key

response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers={"authorization": api_key},

json={

"model": "claude-3-5-haiku-20241022",

"messages": [

{"role": "user", "content": prompt}

],

}

)

result = response.json()

print(result['choices'][0]['message']['content'])

Output

Engineering Key Review

The meeting begins with a proposal to break up the engineering key review meeting into four departmental key reviews to allow for more in-depth discussion. A two-month rotation is suggested so as not to add too many new meetings. The proposal is supported.

R&D Merge Request Rates

There is discussion around the R&D overall and wider merge request rates. It is clarified that the wider rate includes community contributions while the overall rate includes internal and external requests. There is agreement to track the percentage of total requests from the community over time instead.

Postgres Replication Issue

There is an update on work to address lag in Postgres replication for the data engineering team. Actions include dedicating a host for the data team, database tuning, and improving demand on the database. More work is needed to determine the best solutions. An update will be provided at the next infrastructure key review.

Defect Tracking and SLOs

There is an update on work to track defects against service level objectives (SLOs). Iterations are being made to measure the average age of open bugs and the percentage of open bugs within SLO. More discussion is needed on the best approach.

Key Metrics

The team discusses key metrics. The decline in NPS has slowed, though more data is needed to determine if it is an actual trend. The narrow merge request rate is below target, though it is higher than the same time last year. The rate is expected to rebound in March. The target rate has been adjusted to 10 going forward to focus on other metrics like quality and security.

Closing

The meeting ends with a request for any other discussion. Hearing none, the meeting adjourns.

Try summarization in our Playground

Upload an audio or video file to test transcription and explore AssemblyAI features—no code required.

Try the playground

Evaluating summary quality

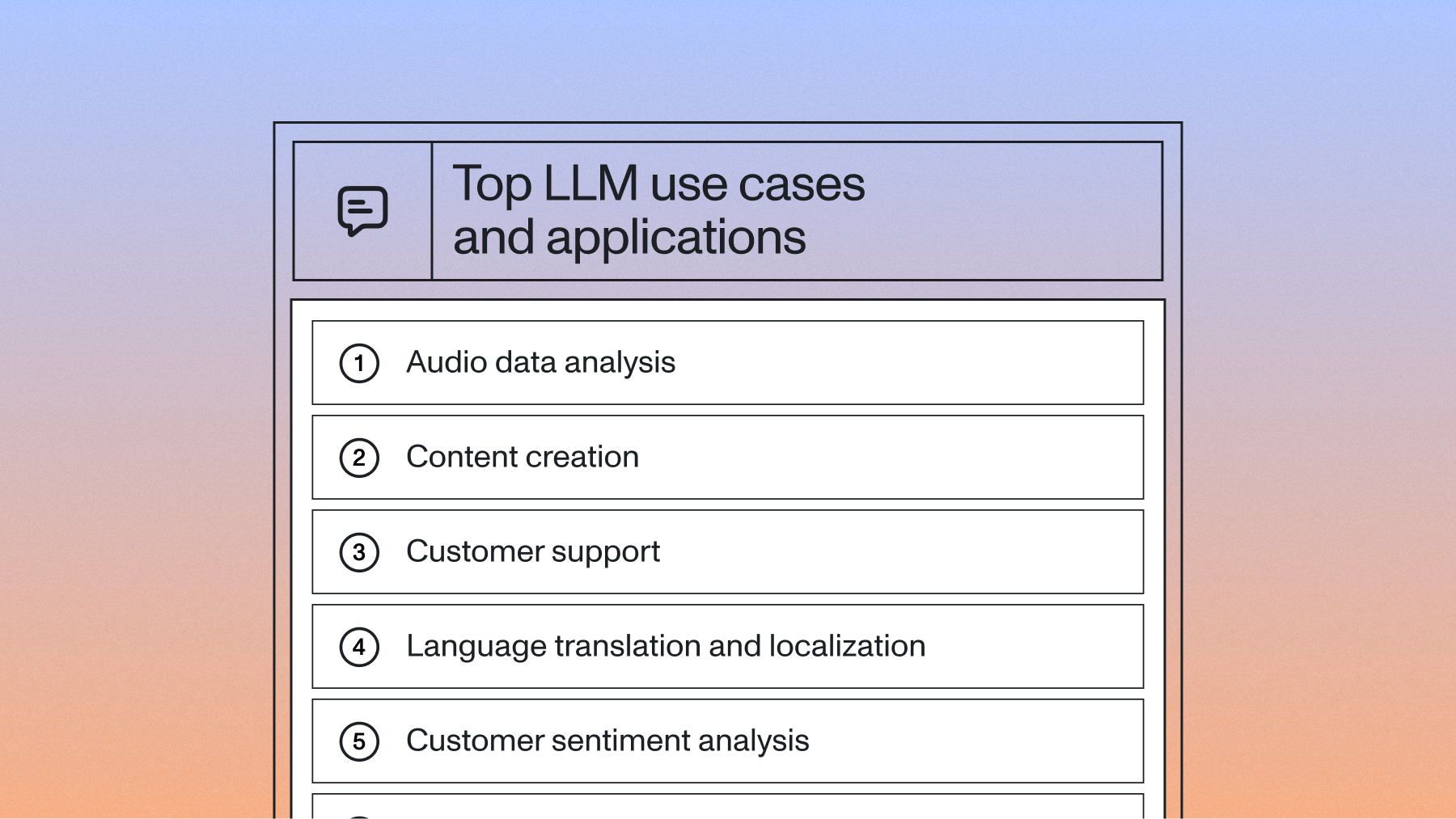

Traditional metrics like ROUGE or BLEU measure word overlap but miss semantic meaning.

Evaluation approaches:

Traditional

ROUGE/BLEU scores

Fast but superficial

LLM-based

AI evaluating AI output

Deeper, contextual analysis

LLM evaluation checks:

- Does the summary contain information not in the original?

- Are key points accurately represented?

- Are critical points missing?

This approach ensures factual accuracy and contextual relevance.

Performance optimization and best practices

Building a demo is one thing; building for production is another. Here are a few best practices for building a scalable summarization feature.

Handling long-form content

LLMs have a finite context window, meaning you can't just feed them a 3-hour podcast transcript. To handle this, you need to break the transcript into smaller chunks that fit within the model's context limit. You are responsible for this chunking logic when using the LLM Gateway.

Improving speed with asynchronous processing

Transcription and summarization can take time. For a better user experience, you should process files asynchronously. The AssemblyAI SDK handles this by default, allowing you to submit a file and then retrieve the results later without blocking your application.

Getting better summaries with custom prompts

The quality of your summary depends heavily on your prompt. When using the LLM Gateway, you build a detailed prompt that includes background information, instructions, and the desired output format. For example, you could request a JSON object with specific keys by providing a JSON structure in your prompt:

prompt = f"""

Summarize the following transcript. Structure your response as a JSON object with two keys: "main_points" and "action_items".

Transcript:

{transcript.text}

JSON Output:

{

"main_points": ["<A bulleted list of the main points>"],

"action_items": ["<A bulleted list of any action items mentioned>"]

}

"""

Frequently asked questions about text summarization with LLMs

How do I choose the right summarization strategy?

Use extractive for key quotes, and abstractive (via the LLM Gateway) for natural, context-aware summaries.

How do I handle documents that are longer than the LLM's context window?

When using the LLM Gateway, you are responsible for managing the model's context window. For long transcripts, you'll need to implement a chunking strategy to break the text into smaller parts before sending it to the model.

Can I customize the format of the summary?

Yes. With the LLM Gateway, you can specify the exact output structure you need by including formatting instructions in your prompt. You can request anything from a simple paragraph to a complex JSON object, giving you full control over the final output.

What's the difference between summarizing plain text and summarizing transcribed audio?

Transcribed audio includes speaker labels and timestamps, enabling context-aware summaries that follow conversational threads. Try our API for free.