Extract phone call insights with LLMs in Python

Learn how to automatically extract insights from customer calls with Large Language Models (LLMs) and Python.

Learn how to automatically extract insights from customer calls with Large Language Models (LLMs) and Python. By turning raw audio into structured data, Voice AI apps are creating measurable business impact, with some organizations reporting 40-60% productivity improvements in audio-heavy workflows.

In this tutorial, we'll learn how to utilize LLMs to extract call insights in just a few lines of code. In particular, we'll learn how to extract a summary, action items, and contact information from this sample customer call.

The following information will be returned by the LLM:

SUMMARY:

- The caller is interested in getting an estimate for building a house on a property he is looking to purchase in Westchester.

ACTION ITEMS:

- Have someone call the customer back today to discuss building estimate.

- Set up time for builder to assess potential property site prior to purchase.

CONTACT INFORMATION:

Name: Lindstrom, Kenny

Phone number: 610-265-1715

What are Call Center Insights?

Call center insights are structured data extracted from customer phone conversations using speech-to-text and AI analysis. Industry reports show that contact centers are experiencing a Voice AI revolution by using these tools to generate call summaries, action items, sentiment scores, and contact information automatically from audio recordings.

In practice, this means:

- Automatically identifying call reasons and customer sentiment

- Tracking agent script compliance and performance metrics

- Detecting product issues before they escalate

This tutorial shows you how to build the technical foundation using Python and AssemblyAI's speech-to-text API.

Getting Started

To follow along with this tutorial, you'll need to have Python installed and an AssemblyAI API key.

All of the code in this tutorial is available in the project repository on GitHub.

Setting Up Your Environment

First, create a directory for the project and navigate into it. Then, create a virtual environment and activate it:

# Mac/Linux

python3 -m venv venv

. venv/bin/activate

# Windows

python -m venv venv

.\venv\Scripts\activate.bat

Now, install the AssemblyAI Python SDK and the requests library, which we'll use to interact with the LLM Gateway:

pip install assemblyai requests

Finally, set your API key as an environment variable with the following command, where you replace <your_key> with your AssemblyAI API key copied from your dashboard.

# Mac/Linux

export ASSEMBLYAI_API_KEY=<your_key>

# Windows

set ASSEMBLYAI_API_KEY=<your_key>

Transcribing the Call and Extracting Insights

Now we're ready to start writing our application code. We'll first transcribe the phone call and then send the transcript to the LLM Gateway for analysis. Paste the following complete code into a file called main.py.

import assemblyai as aai

import requests

import os

# Get the API key from environment variables

api_key = os.getenv("ASSEMBLYAI_API_KEY")

if not api_key:

raise Exception("ASSEMBLYAI_API_KEY environment variable not set.")

# --- 1. Transcribe the audio file ---

transcriber = aai.Transcriber()

transcript = transcriber.transcribe("https://storage.googleapis.com/aai-web-samples/Custom-Home-Builder.mp3")

# Check for transcription errors

if transcript.status == aai.TranscriptStatus.error:

print(f"Transcription failed: {transcript.error}")

exit(1)

print(f"TRANSCRIPT:\n{transcript.text}\n")

# --- 2. Define the prompt for the LLM Gateway ---

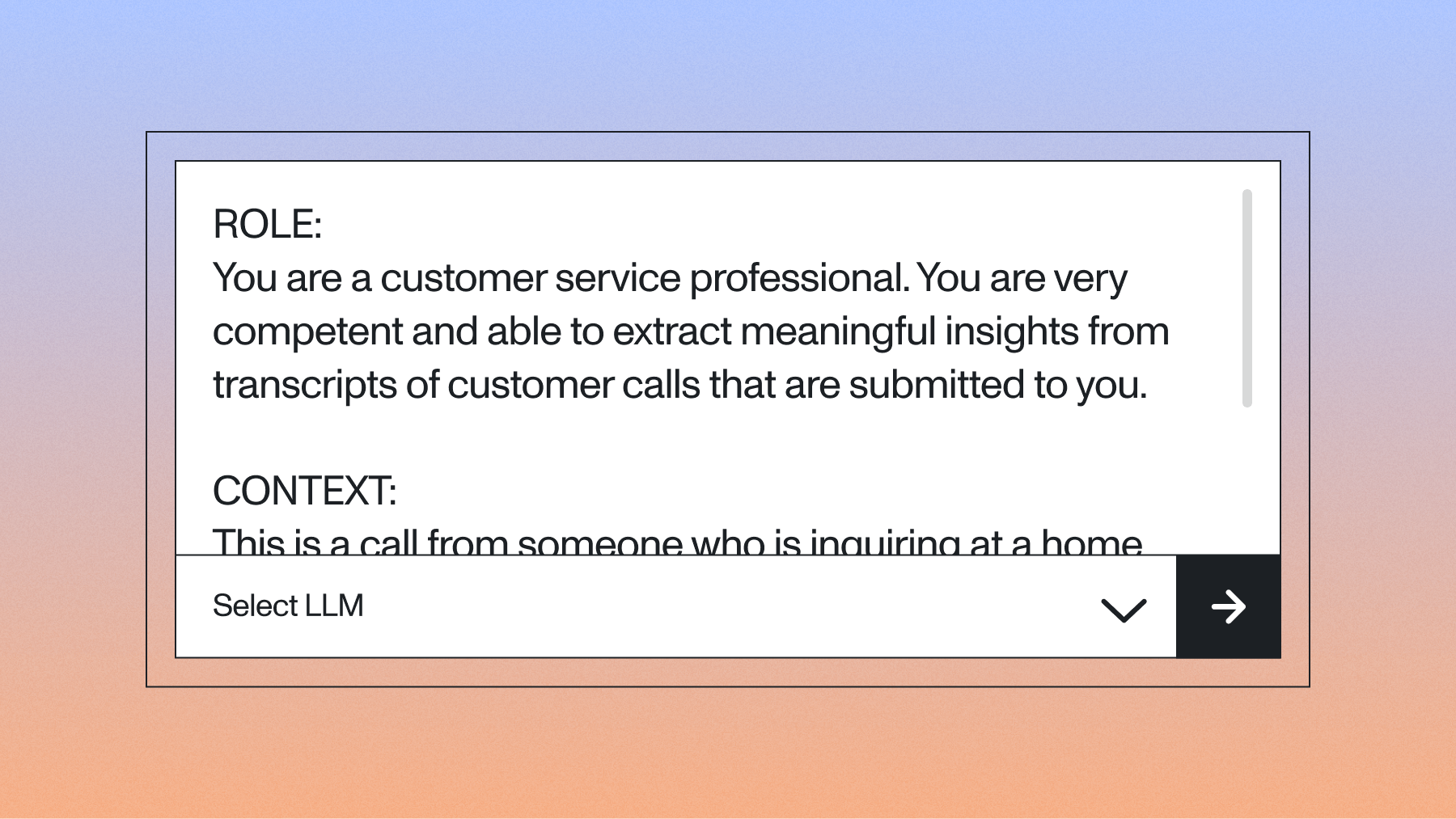

prompt = """

ROLE:

You are a customer service professional. You are very competent and able to extract meaningful insights from transcripts of customer calls that are submitted to you.

CONTEXT:

This is a call from someone who is inquiring at a home building company

INSTRUCTION:

Respond to the following command: "Provide a short summary of the phone call, and list any outstanding action items after the summary. Finally, provide the caller's contact information. Do not include a preamble."

FORMAT:

SUMMARY:

a one or two sentence summary

ACTION ITEMS:

a bulleted list of sufficiently detailed action items

CONTACT INFORMATION:

Name: Last name, first name

Phone number: The caller's phone number

""".strip()

# --- 3. Extract insights with the LLM Gateway ---

llm_gateway_data = {

"model": "claude-3-5-haiku-20241022", # A fast and capable model

"messages": [

{

"role": "user",

"content": f"{prompt}\\n\\nTranscript: {transcript.text}"

}

],

"max_tokens": 1000

}

headers = {

"authorization": api_key

}

# Make the request to the LLM Gateway

response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers=headers,

json=llm_gateway_data

)

# Check for errors

if response.status_code != 200:

print(f"LLM Gateway request failed: {response.text}")

exit(1)

# Print the result

result = response.json()

print("--- CALL INSIGHTS ---")

print(result["choices"][0]["message"]["content"].strip())

This script performs three main steps. First, it transcribes the audio file and checks for any errors. Second, it defines a detailed prompt for the LLM. Finally, it sends the transcript text and prompt to the AssemblyAI LLM Gateway via an HTTP POST request to get the structured insights.

Now, run the script from your terminal:

python main.py

After a few moments, you will be presented with the phone call insights extracted by the LLM:

SUMMARY:

- The caller is interested in getting an estimate for building a house on a property he is looking to purchase in Westchester.

ACTION ITEMS:

- Have someone call the customer back today to discuss building estimate.

- Set up time for builder to assess potential property site prior to purchase.

CONTACT INFORMATION:

Name: Lindstrom, Kenny

Phone number: 610-265-1715

If you check the call transcript (or listen to the original call), you will see that all of the information is indeed accurate.

Advanced Insight Extraction Techniques

Beyond basic summaries, you can extract more granular insights by refining your prompt:

- Sentiment analysis (positive, negative, neutral)

- Topic identification and categorization

- Structured JSON output for database integration

To get structured output, you can instruct the LLM Gateway to respond in JSON format. This enables seamless integration with other systems. For example, you could modify the FORMAT instruction in your prompt:

FORMAT:

Respond with a valid JSON object containing three keys: 'summary', 'action_items', and 'sentiment'. The sentiment should be one of 'positive', 'neutral', or 'negative'.

You can also use the response_format parameter in your LLM Gateway request to enforce a JSON output. See the API reference for more details.

Error Handling and Performance Optimization

In a production environment, you need to account for potential issues. The AssemblyAI SDK raises an aai.errors.APIError for problems like authentication or network failures. Additionally, it's crucial to check the status of the transcript object itself, as the API call can succeed while the transcription job fails. Here is a more robust error handling pattern:

import requests

try:

transcript = transcriber.transcribe(AUDIO_URL)

if transcript.status == aai.TranscriptStatus.error:

raise Exception(f"Transcription failed: {transcript.error}")

# Assuming 'headers' and 'llm_gateway_data' are defined

response = requests.post(

"https://llm-gateway.assemblyai.com/v1/chat/completions",

headers=headers,

json=llm_gateway_data

)

response.raise_for_status() # Raises an HTTPError for bad responses (4xx or 5xx)

result = response.json()

# ... process result ...

except aai.errors.APIError as e:

# Handles SDK-specific API errors

print(f"AssemblyAI API Error: {e}")

except requests.exceptions.RequestException as e:

# Handles network errors for the LLM Gateway request

print(f"LLM Gateway Request Error: {e}")

except Exception as e:

# Handles other errors, including transcription job failures

print(f"An error occurred: {e}")

For performance, especially when dealing with a high volume of calls, avoid blocking your application while waiting for a transcription to complete. You can process files asynchronously by providing a webhook URL in your transcription request. AssemblyAI will send a notification to your webhook once the job is complete, allowing you to build a more resilient, event-driven system.

Use Cases for Extracting Call Insights

Extracting insights from phone calls has many use cases across many industries, with sales intelligence being one of the fastest-growing Voice AI categories. Just a few of them are:

- lead intelligence

- conversational data analysis

- sales coaching

To learn more about potential use cases for extracting call insights, you can check out this blog.

Scaling Call Insight Extraction for Production

Production systems require robust architecture beyond single scripts. Here's the recommended pattern:

- Task Queue: Use Celery or RQ to manage transcription jobs

- Worker Process: Handles API calls and LLM processing

- Data Pipeline: Store insights in database, display on dashboard

This decoupled architecture provides better scalability and resilience.

Build Production-Ready Applications with Voice AI

You now have the fundamental building blocks to transcribe and understand phone calls at scale. By combining our speech-to-text and LLM Gateway, you can build sophisticated applications that uncover deep insights from voice data, helping your team make smarter, faster decisions.

Now that you see how it works, you can start building your own application. Try our API for free to begin extracting insights from your own audio data. You can also test the LLM Gateway without writing any code in our Playground.

Frequently Asked Questions About Extracting Call Insights with Python

How do I handle different audio formats?

The AssemblyAI Python SDK supports most audio and video formats automatically, with FLAC or 16-bit PCM WAV recommended for optimal accuracy.

What's the best way to process very long phone calls?

For calls longer than several hours, break transcripts into smaller chunks by speaker or time intervals before processing with the LLM Gateway. This is necessary because of LLM context window limitations. According to technical guidance, voice conversations generate 125-150 tokens per minute, meaning long calls can exceed an LLM's 'memory' and cause important context to be lost.

How can I improve the accuracy of the extracted insights?

Use specific instructions with ROLE, CONTEXT, and FORMAT sections in your prompt, and include examples for complex tasks (few-shot prompting). Additionally, it is a best practice to mitigate hallucinations by using techniques like retrieval-augmented generation (RAG) to ground the LLM's responses in verified data, which is especially important for customer service use cases.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.