The State of Python Speech Recognition in 2021

A complete tutorial on speech recognition with Python. Compare results from popular libraries like wav2letter, SpeechRecognition, and DeepSpeech, as well as popular cloud APIs.

So you want to do Automatic Speech Recognition, or ASR, in Python and you’ve found that there are many different options available. Have no fear, we’re here to help. We can separate our Python speech recognition and Speech-to-Text options into two main categories: open source and cloud.

Open source solutions are libraries that are open source (usually on github) and that you import into your program and use programmatically, doing the computation on your own resources. Cloud solutions for Python speech recognition do the computation on cloud resources and are usually exposed via API endpoints you can send use.

Open Source vs Cloud Python Speech Recognition Options

One of the biggest advantages of Open Source Python Speech Recognition solutions is that they are open source. Open source code means you can see the source code. You can know exactly what’s being done, how it’s being done, and when it’s being done. If you are a highly advanced engineer, another big advantage of open source python speech recognition solutions is that you can go in and change the code yourself. The biggest drawback of open source solutions is that the compute power required to do speech recognition will have to come from you. Either locally, or on your own cloud resources. For many developers, that’s a hassle. If you’re developing for a company or corporation that has a lot of cloud resources and money to use, this is not a deal breaker. However, if you’re not made of money, this is a drawback. Another important consideration is that open source python speech recognition options are usually a lot less accurate than cloud based API options. If accuracy is important in your project, you’re probably better off with a cloud solution.

Cloud solutions for building a speech recognition project in Python have the big advantage of being easy to use, way more accurate than open source options, and don’t require you to host any models on your own hardware.. The main drawback about some cloud solutions is the cost.. Luckily, there are free options out there that offer customization such as customizing the vocabulary, paragraph detection, and Speaker Diarization for building a simple speech recognition project in Python. One example is AssemblyAI’s Speech-to-Text API. Another big advantage of cloud solutions is that they are much easier to implement than open source options.

Key Takeaway: when you’re picking your solution for your python speech recognition project, the main things to think about are accuracy, cost, and ease of implementation.

Open Source Python Speech Recognition Options

There are many open source Python speech recognition options. We’ll cover the three most prolific ones here. These open source python speech recognition libraries are wav2letter, SpeechRecognition, and DeepSpeech.

wav2letter

The open source library wav2letter was first developed by Facebook. It is now ported to a new open source library called Flashlight, but still mostly known to developers by it’s old name, wav2letter. The cool thing about wav2letter is that it is completely built on convolutional neural networks (CNNs) from the acoustic modeling to the language modeling. This is a rare sight to see because natural language processing (NLP) has been dominated by recurrent neural network (RNN) based models since 2014.

Another interesting thing about wav2letter, and a drawback for programmers that are not very experienced, is that it needs to be built to be used in Python, and cannot be simply installed with a pip command. In fact, you’ll need a C++ compiler in order to install wav2letter! If you’re building a simple speech recognition project in Python, this will certainly throw a wrench in your plans. Another drawback is that since it was ported to Flashlight, now you’ll also need Flashlight as a dependency to use wav2letter.

Just the act of installing wav2letter itself could be a mini-project for you to do. If you’re interested in pursuing a wav2letter project because you like the sound of a Python speech recognition project that is a) difficult to start, b) has many dependencies, and c) is built on a cool neural network structure that is slightly different than most other speech to text libraries, then wav2letter is for you and you can start by reading this guide on how to install wav2letter.

SpeechRecognition

How could a library called SpeechRecognition not be the best open source library for speech recognition? Unfortunately, it’s hard to say it’s a real open source solution for speech recognition because what it really does is wrap other speech recognition technologies. Now admittedly, it does support a lot of these other technologies including Google Cloud speech to text, CMU Sphinx, Wit, Azure, Houndify, IBM, and Snowboy. Of course, the only two that you can use offline (locally) are CMU Sphinx and Snowboy. What are the other solutions? Cloud based solutions that are wrapped in an open source library so it looks like you have more customizability than you do.

If you’re not looking for a cloud solution, then the SpeechRecognition Python open source library is probably not for you. The only real offline solution it offers is the use of CMU Sphinx, Snowboy is no longer supported by the creators and I couldn’t even find the link to it’s documentation online anymore. If you’re able to find it, please me tweet at @yujian_tang because out of all the speech recognition libraries I’ve seen, it has the second coolest name (Sphinx is for sure cooler).

The nice thing about CMU Sphinx is that it has a lot of developer documentation and FAQ. It’s built on over 20 years of research conducted by Carnegie Mellon University, a top tier (some may argue the best) computer science school in the world. It is not as resource intensive as some other speech to text Python solutions, and it supports multiple languages. If at this point, you feel like I’m not even talking about the SpeechRecognition library anymore, it is because I’m not. SpeechRecognition is a simple wrapper around other speech to text solutions. In fact, it LITERALLY calls another speech to text service to do it’s transcription. Although it is nice that it integrates them all into one package so you can use multiple services with only one library download! I would suggest this library if you are testing out all of the Cloud services that it provides wrappers for.

import speech_recognition as sr

# obtain audio from the microphone

r = sr.Recognizer()

with sr.Microphone() as source:

print("Say something!")

audio = r.listen(source)

# recognize speech using Sphinx

try:

print("Sphinx thinks you said " + r.recognize_sphinx(audio))

except sr.UnknownValueError:

print("Sphinx could not understand audio")

except sr.RequestError as e:

print("Sphinx error; {0}".format(e))DeepSpeech

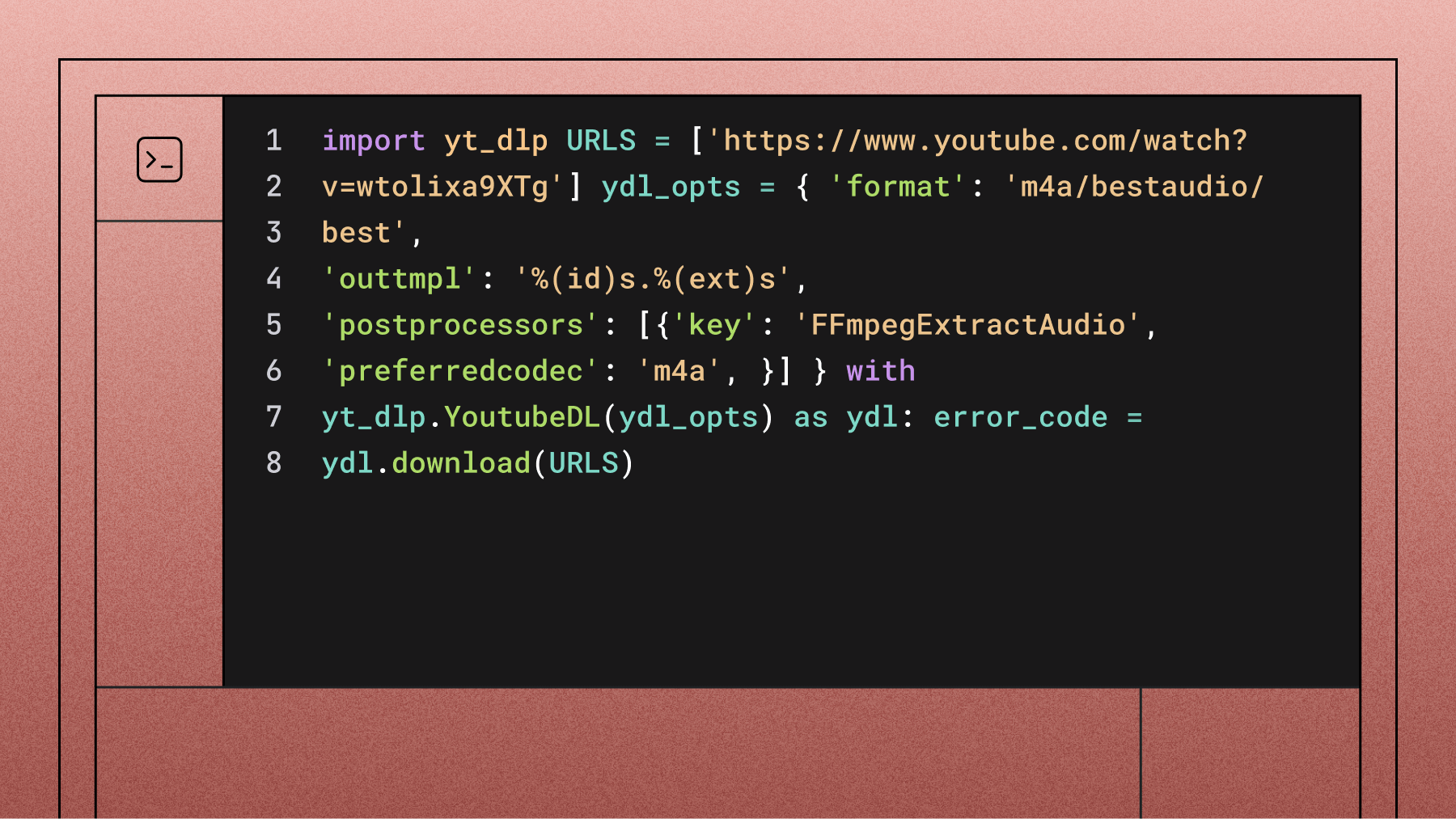

DeepSpeech was originally a paper about speech recognition techniques produced by Baidu’s research team. The library I linked is the open source project that is on Mozilla’s GitHub. DeepSpeech can run offline and on devices. DeepSpeech works on a wide range of devices from Raspberry Pi devices to actual GPUs that are used to train models in industry.

DeepSpeech is fairly easy to download and get started with, all you have to do is use pip to install it and then download the audio files like the code snippet below. There’s actually no rules that say you have to use DeepSpeech’s English models either, if you have your own you can totally use those instead.

# Install DeepSpeech

pip3 install deepspeech

# If using GPU

pip3 install deepspeech-gpu

# Download pre-trained English model files

curl -LO https://github.com/mozilla/DeepSpeech/releases/download/v0.9.3/deepspeech-0.9.3-models.pbmm

curl -LO https://github.com/mozilla/DeepSpeech/releases/download/v0.9.3/deepspeech-0.9.3-models.scorerRemember that things have to run on-device for you to use DeepSpeech. This means that it’s going to take up a lot of local compute resources. If you’re going to train a DeepSpeech model on a GPU, make sure you have the required CUDA files. DeepSpeech for GPUs depends on CUDA 10.1 and CuDNN 7.6. Additionally, you’ll have to install deepspeech-gpu with pip. I recommend DeepSpeech if you personally want to run your models to do speech recognition locally. I also think that DeepSpeech is a fairly advanced library to be used as intended, so I also recommend this for advanced programmers who will be doing a lot of customization.

Cloud Python Speech Recognition

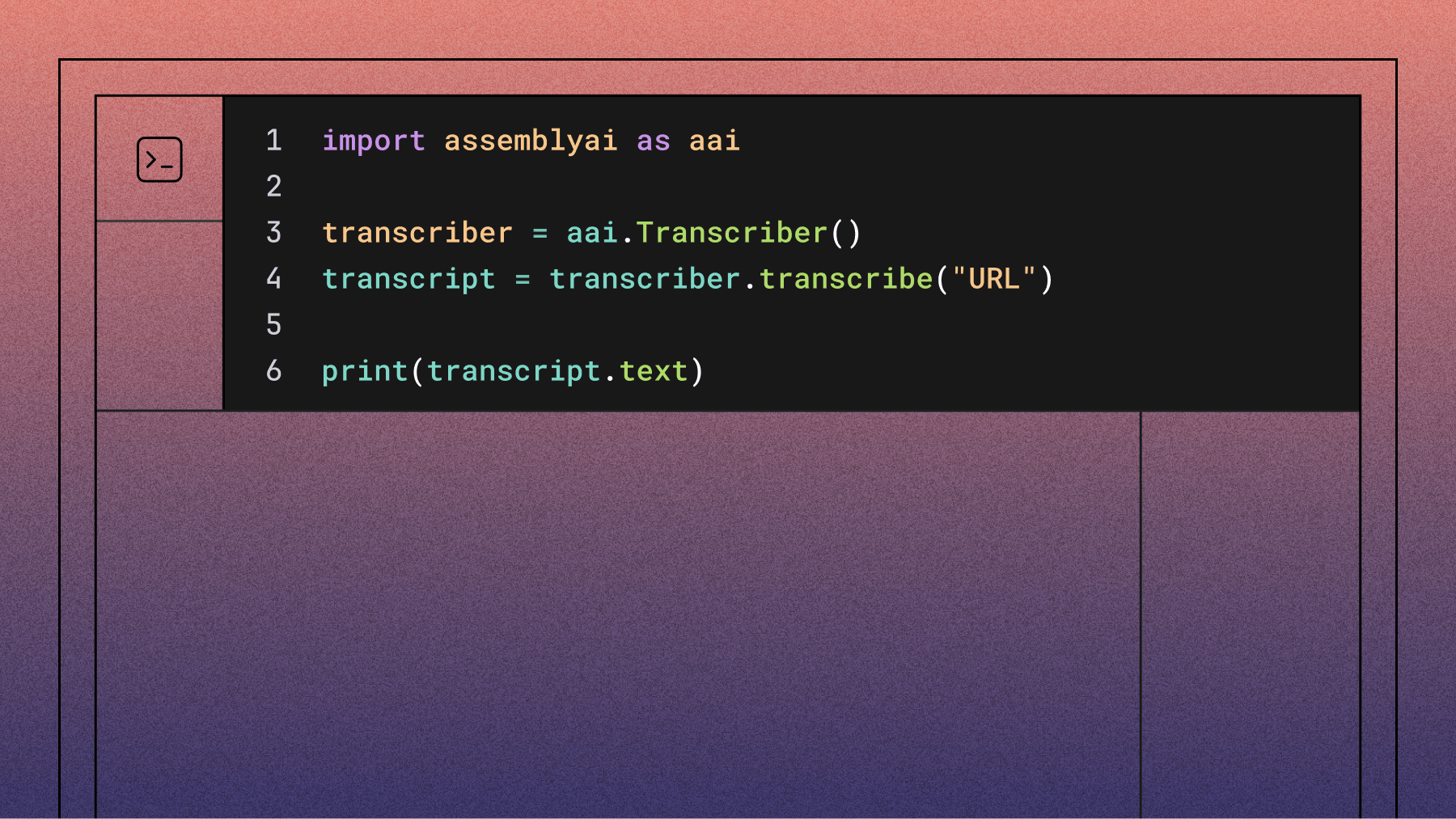

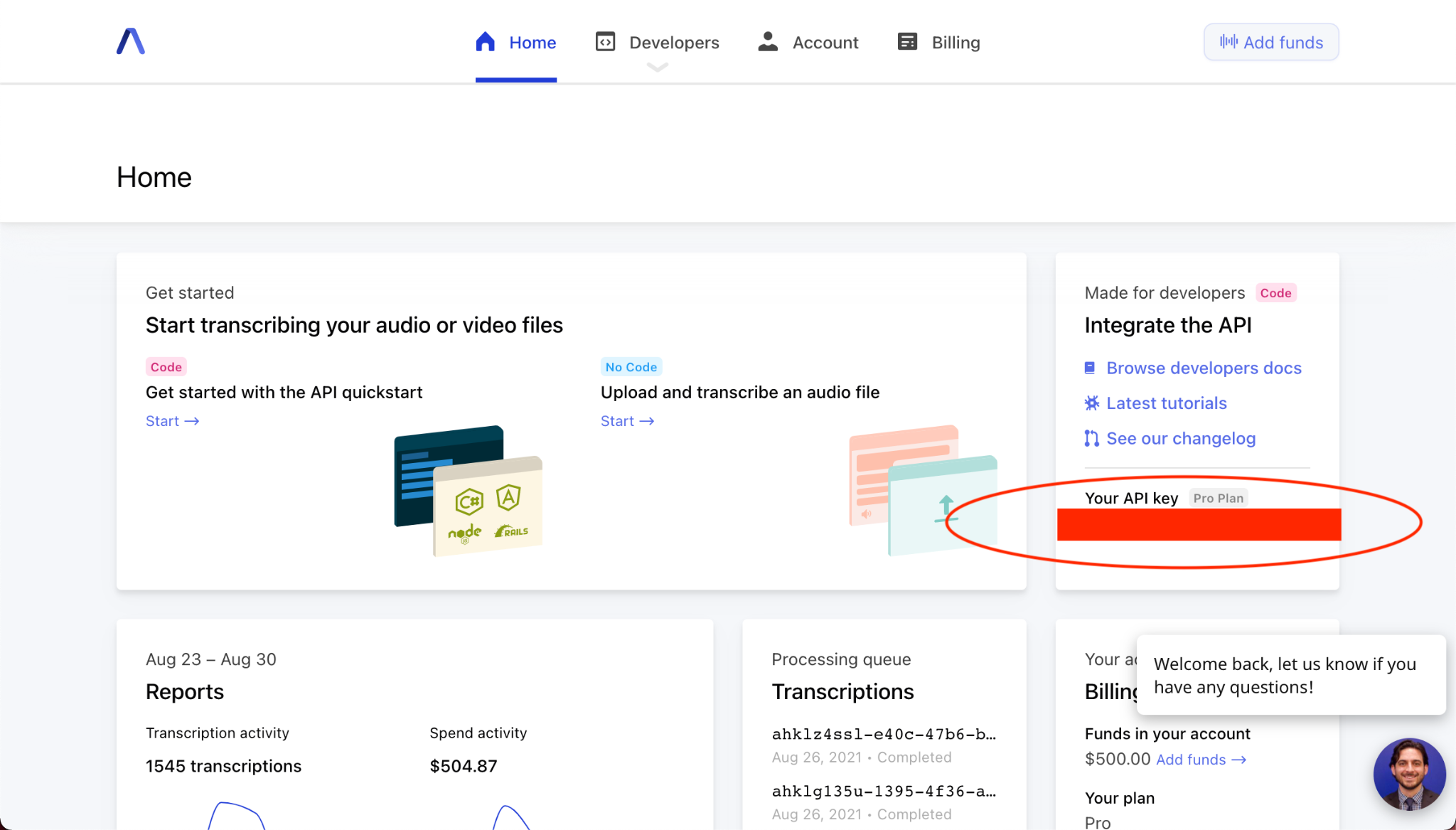

AssemblyAI creates a fast, automatic speech recognition API that is free for developers to use. The cloud hosted API is extremely simple to use and includes many features. In this section I’ll go over how to use the AssemblyAI speech to text API to do transcription, how to do speaker diarization, how to add custom vocabulary to your transcription, and how to create paragraphs from your generated transcription. All you need to do to follow the rest of this tutorial is to get a free speech to text API key and grab an audio file you want to transcribe.

You should see your API key where I’ve circled in the picture.

Using AssemblyAI’s Speech to Text API

AssemblyAI’s Speech-to-Text API is fast, accurate, and super simple to use. It also great data privacy and security. Starting with our audio file, first we’ll upload it to the AssemblyAI upload endpoint, then we’ll send a transcription request to transcribe the file we uploaded. If you have your audio file uploaded somewhere on the web (like in an S3 bucket), you can skip this step.

auth_key = '<your AssemblyAI API key here>'

headers = {"authorization": auth_key, "content-type": "application/json"}

def read_file(filename):

with open(filename, 'rb') as _file:

while True:

data = _file.read(5242880)

if not data:

break

yield data

upload_response = requests.post('https://api.assemblyai.com/v2/upload', headers=headers, data=read_file('<path to your file here>'))

audio_url = upload_response.json()[‘upload_url’]All you need to get your transcription is make a request to the AssemblyAI transcription endpoint with a link to the uploaded audio file, wait a little bit, and then make another request to the transcription endpoint with the id of your request to get your transcription back in the response.

transcript_request = {'audio_url': audio_url}

transcript_response = requests.post("https://api.assemblyai.com/v2/transcript", json=transcript_request, headers=headers)

_id = transcript_response.json()['id']After sending the transcription response, we will wait around for a brief interval. The docs currently suggest 30% of the length of the audio file, but in my empirical usage, I’ve found it to be a bit faster than that. Once we’ve waited a reasonable amount of time, we just send a polling request and download our transcription.

polling_response = requests.get("https://api.assemblyai.com/v2/transcript/" + _id, headers=headers)

if polling_response.json()['status'] != 'completed':

print(polling_response.json())

else:

with open(_id + '.txt', 'w') as f:

f.write(polling_response.json()['text'])

print('Transcript saved to', _id, '.txt')Custom Vocabulary with AssemblyAI’s Speech Recognition API

One of the coolest things about AssemblyAI’s speech to text API is the simplicity of requesting different features for your transcription. You can pass custom vocabulary to the AI transcription by using a word boost parameter and passing in a list of words that you want the model to recognize. The transcript request would then look like the code below. You don’t need to add the boost param keyword unless you’re looking to increase or decrease the strength of your word boost.

transcript_request = {

'audio_url': audio_url,

'word_boost': ['boost', 'these', 'words'],

'boost_param': 'high

}Some rules to follow around using custom vocabulary with the AssemblyAI speech to text API - 1) remove punctuation, 2) each word should be in it’s spoken form, and 3) there should be no spaces between letters of an acronym.

Speaker Diarization with AssemblyAI’s Speech Recognition API

You can also get speaker diarization from your transcript with the pass of an additional API parameter. A transcript request that will also return speaker labels to you can be made like the code below.

transcript_request = {

'audio_url': audio_url,

'speaker_labels': 'true'

}An example of a response from a transcript with speaker diarization could look like the JSON below. You can expect to find your speaker diarization results under the “utterances” key. The speakers will be labeled from A to Z.

{

"acoustic_model": "assemblyai_default",

"audio_duration": 150.766167800454,

"audio_url": "https://app.assemblyai.com/static/media/phone_demo_clip_1.wav",

"confidence": 0.922175805047867,

"dual_channel": true,

"format_text": true,

"id": "5552830-d8b1-4e60-a2b4-bdfefb3130b3",

"language_model": "assemblyai_default",

"punctuate": true,

"status": "completed",

"text": "Hi, I'm joy. Hi, I'm sharon. Do you have kids in school. ...",

# the "utterances" key below is a list of the turn-by-turn utterances found in the audio

"utterances": [

{

# speakers will be marked as "A" through "Z"

"speaker": "A",

"confidence": 0.97,

"end": 1380,

"start": 0,

# the text for the entire speaker "turn"

"text": "Hi, I'm joy.",

# the individual words from the speaker "turn"

"words": [

{

"speaker": "A",

"confidence": 1.0,

"end": 320,

"start": 0,

"text": "Hi,"

},

...

]

},

# the next "turn" by speaker "B" - for example

{

"speaker": "B",

"confidence": 0.94,

"end": 3260,

"start": 0,

"text": "Hi, I'm sharon.",

"words": [

{

"speaker": "B",

"confidence": 1.0,

"end": 480,

"start": 0,

"text": "Hi,"

},

...

]

},

{

"speaker": "A",

"confidence": 0.94,

"end": 5420,

"start": 2820,

"text": "Do you have kids in school.",

"words": [

{

"speaker": "A",

"confidence": 1.0,

"end": 4300,

"start": 2820,

"text": "Do"

},

...

]

},

],

# all of the words found in the audio across all speakers

"words": [

{

"speaker": "A",

"confidence": 1.0,

"end": 320,

"start": 0,

"text": "Hi,"

},

{

"speaker": "A",

"confidence": 1.0,

"end": 720,

"start": 320,

"text": "do"

},

...

]

}Getting Paragraphs from AssemblyAI’s Speech Recognition API

After you’ve done your transcription and passed in whatever parameters you needed such as custom vocabulary or speaker diarization, there’s also an additional endpoint you can hit if you also want to further process the transcript into paragraphs with almost no additional work. All you have to do is send a GET request to the transcript endpoint of your transcript id + ‘/paragraphs’ like shown below.

paragraphs_endpoint = "https://api.assemblyai.com/v2/transcript/" + _id + "/paragraphs"

paragraphs_response = requests.get(paragraphs_endpoint, headers=headers)

pprint.pprint(paragraphs_response.json())

{

"paragraphs":[

{

"text":"Hello. I'm Sunny Williams. I'm up here on the International Space Station. So this is No two. This is a really cool module.",

"start":770,

"end":10710,

"confidence":0.97,

"words":[

{"text": "Hello.", "start": 100, "end": 1000, "confidence": 0.99},

"..."

]

},

...

],

"id": "3otc28umy-25ae-4446-b133-70e244be5208",

"confidence": 0.951530612244907,

"audio_duration": 521.352

}Real Time Speech Recognition in Python

Finally, I’ll touch on one more thing, AssemblyAI’s speech to text API has the ability to do real time streaming speech recognition. Real time speech recognition requires an upgrade to your account so it’s not part of the free speech to text API.

A Summary of the State of Python Speech Recognition in 2021

In this post, I’ve gone over the current state of the art technologies in speech recognition for Python users from three popular open source libraries, wav2letter, SpeechRecogntion, and DeepSpeech, to a cloud solution like AssemblyAI’s speech recognition API. Wav2letter is an open source library that uses a pure CNN architecture in their models and was initially created by Facebook. SpeechRecognition is mostly a wrapper library around some cloud APIs and a model based on CMUSphinx, research done at Carnegie Mellon University. DeepSpeech was originally conceptualized by scientists from Baidu who published a paper on it; the open source library is maintained by Mozilla, and it is the only library I covered that runs on-device.

The only cloud provider I covered here was AssemblyAI’s automatic speech recognition API. AssemblyAI’s speech to text API is fast, accurate, and simple to use. Tons of features such as speaker diarization, custom vocabulary, and paragraph extraction are also provided and as easy to implement as sending an HTTP request. For more information about AssemblyAI follow us on Twitter @assemblyai, for more Python info or programming memes you can follow the author @yujian_tang.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.