Top 8 speaker diarization libraries and APIs in 2026

In this blog post, we'll look at how speaker diarization works, why it's useful, some of its current limitations, and the top eight speaker diarization libraries and APIs for product teams and developers to use.

In its simplest form, speaker diarization answers the question: who spoke when?

In the field of Automatic Speech Recognition (ASR), speaker diarization refers to (A) the number of speakers that can be automatically detected in an audio file, and (B) the words that can be assigned to the correct speaker in that file.

Today, many modern speech-to-text APIs and speaker diarization libraries apply advanced AI models to perform tasks (A) and (B) with what industry analysis shows is near human-level accuracy, significantly increasing the utility of speaker diarization APIs. Recent advances in 2025 have dramatically improved performance in challenging real-world conditions, with updates like AssemblyAI's new speaker embedding model achieving documented improvements of 30% in noisy environments.

In this blog post, we'll look at how speaker diarization works, why it's useful, some of its current limitations, and the top eight speaker diarization libraries and APIs for product teams and developers to use. We'll examine the different approaches available and help you choose the right solution for your specific needs.

What is speaker diarization?

Speaker diarization answers the question: "Who spoke when?" It involves segmenting and labeling an audio stream by speaker, allowing for a clearer understanding of who is speaking at any given time. This process is essential for automatic speech recognition (ASR), meeting transcription, and call center analytics, transforming raw audio into structured, actionable insights.

Speaker Diarization performs two key functions:

- Speaker Detection: Identifying the number of distinct speakers in an audio file.

- Speaker Attribution: Assigning segments of speech to the correct speaker.

The result is a transcript where each segment of speech is tagged with a speaker label (e.g., "Speaker A," "Speaker B"), making it easy to distinguish between different voices. This improves the readability of transcripts and increases the accuracy of analyses that depend on understanding who said what.

How does speaker diarization work?

The fundamental task of speaker diarization is to apply speaker labels (i.e., "Speaker A," "Speaker B," etc.) to each utterance in the transcription text of an audio/video file.

Accurate speaker diarization requires many steps. The first step is to break the audio file into a set of "utterances." What constitutes an utterance? Generally, utterances are at least a half second to 10 seconds of speech. To illustrate this, let's look at the below examples:

Utterance 1:

Hello my name is Bob.

Utterance 2:

I like cats and live in New York City.

AI models require sufficient audio data for accurate speaker identification, similar to human recognition patterns. Audio segmentation uses silence detection and punctuation markers to create utterances between 0.5-10 seconds.

Our research shows diarization accuracy drops significantly when utterances are less than one second:

- Optimal range: 1-10 seconds per utterance

- Minimum threshold: 0.5 seconds for basic detection

- Accuracy degradation: Below 1 second shows measurable performance loss

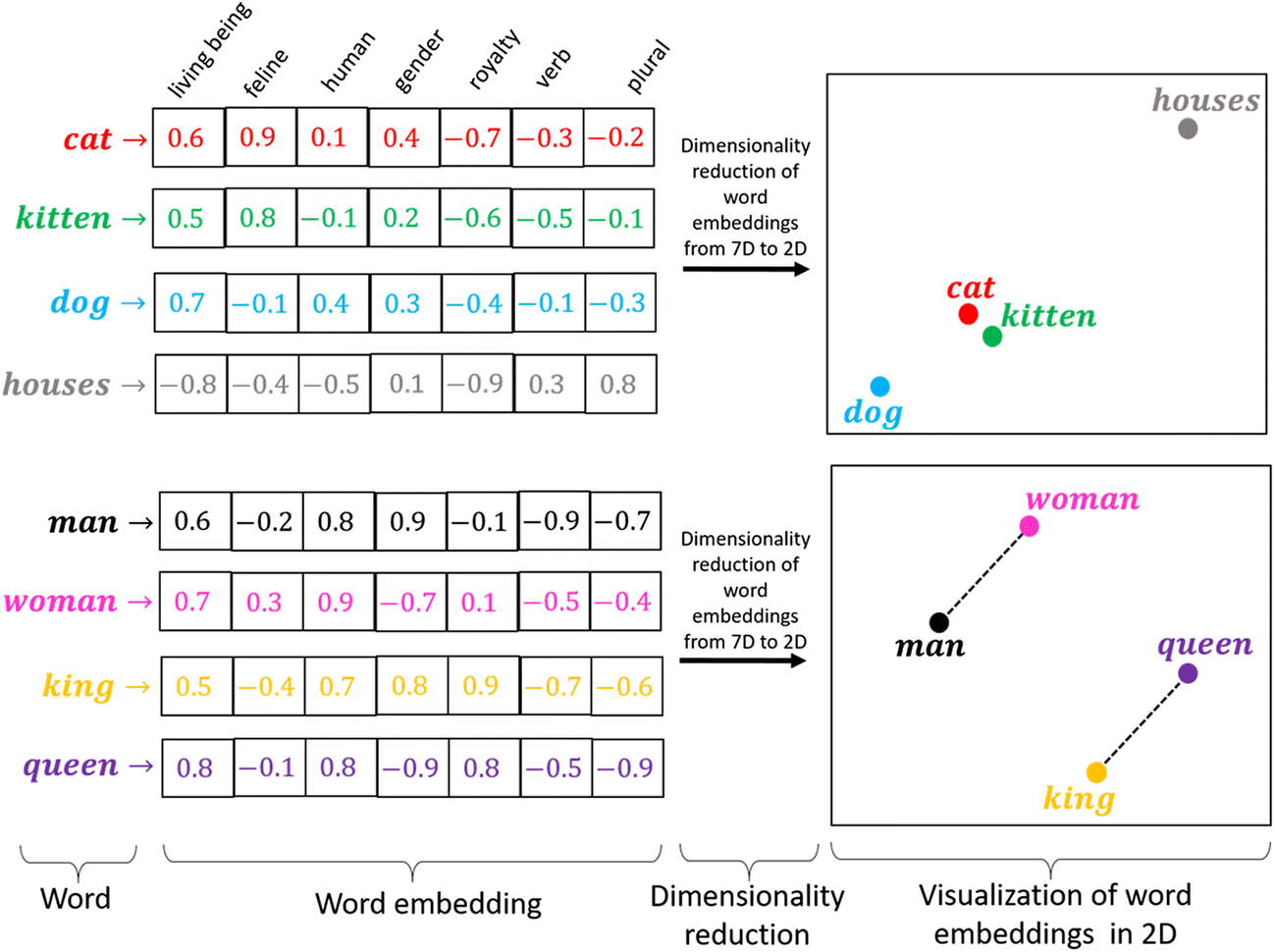

Once an audio file is broken into utterances, those utterances get sent through a deep learning model that has been trained to produce "embeddings" that are highly representative of a speaker's characteristics. An embedding is a deep learning model's low-dimensional representation of an input. For example, the image below shows what the embedding of a word looks like:

We perform a similar process to convert not words, but segments of audio, into embeddings as well.

Next, we need to determine how many speakers are present in the audio file--this is a key feature of a modern speaker diarization model. Legacy speaker diarization systems required knowing how many speakers were in an audio/video file ahead of time, but a major benefit of modern speaker diarization models is that they can accurately predict this number.

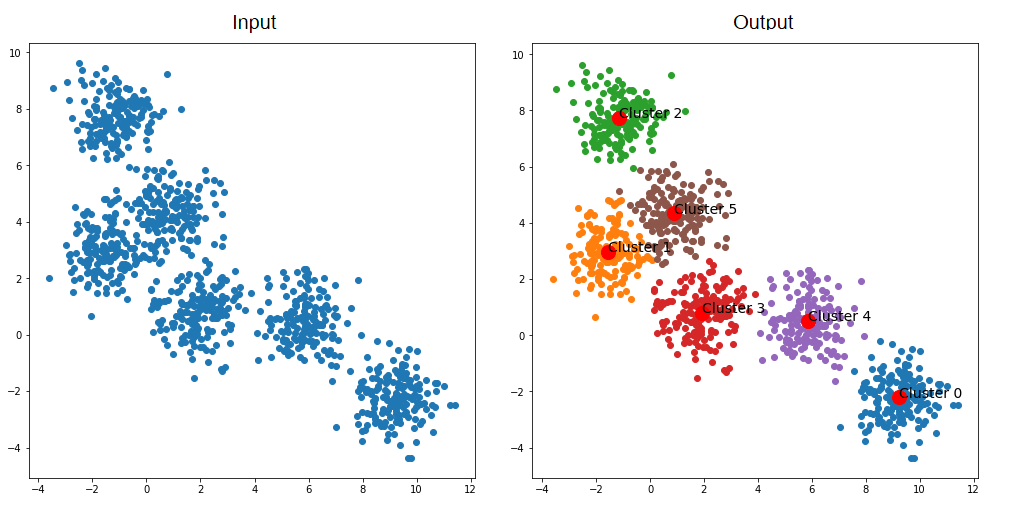

Our first goal here is to overestimate the number of speakers. Using a clustering method, you want to determine the greatest number of speakers that could reasonably be heard in the audio. Why overestimate? It's much easier to combine the utterances of one speaker that has been incorrectly identified as two than it is to disentangle the utterances of two speakers which have incorrectly been combined into one.

After this initial step, we go back and combine speakers, or disentangle speakers, as needed to get an accurate number.

Finally, speaker diarization models take the utterance embeddings (produced above), and cluster them into as many clusters as there are speakers. For example, if a speaker diarization model predicts there are four speakers in an audio file, the embeddings will be forced into four groups based on the "similarity" of the embeddings.

For example, in the below image, let's assume each dot is an utterance. The utterances get clustered together based on their similarity — with the idea being that each cluster corresponds to the utterances of a unique speaker.

There are many ways to determine similarity of embeddings, and this is a core component of accurately predicting speaker labels with a speaker diarization model. After this step, you now have a transcription complete with accurate speaker labels!

Recent research confirms that end-to-end approaches which eliminate traditional pipeline stages are on the rise, treating diarization as a unified problem. These newer architectures can better handle overlapping speech and brief utterances that previously challenged traditional systems.

Today's speaker diarization models can be used to determine multiple speakers in the same audio/video file with high accuracy. It's important to note that speaker diarization differs from speaker recognition — diarization identifies different speakers without knowing their identities, while recognition matches voices to known individuals.

Speaker diarization example process

To illustrate this process, let's consider an example:

- Audio Segmentation: An audio file containing a conversation is segmented into utterances based on pauses and punctuation.

- Feature Extraction: Each utterance is processed by an AI model to create embeddings that represent the unique vocal characteristics of each speaker.

- Clustering: The embeddings are clustered into groups based on the proximity in the embedding space. Each cluster is expected to correspond to one person.

- Speaker Attribution: The utterances within each cluster are labeled with the same speaker tags, and these tags are used to annotate the transcript.

Why is speaker diarization useful?

Speaker diarization transforms unstructured transcripts into labeled conversations. Without speaker labels, readers must manually assign utterances to speakers, creating cognitive overhead and processing delays.

For example, let's look at the before and after transcripts below with and without speaker diarization:

Without speaker diarization: [Example of wall of text]

With speaker diarization: [Example with speaker labels]

See how much easier the transcription is to read with speaker diarization?

Speaker diarization is also a powerful analytics tool. By identifying and labeling speakers, product teams and developers can analyze each speaker's behaviors, identify patterns/trends among individual speakers, make predictions, and more. For example:

- A call center might analyze agent/customer calls or customer requests or complaints, to identify trends that could help facilitate better communication.

- A podcast service might use speaker labels to identify the host and guest, making transcriptions more readable for end users.

- A telemedicine platform might identify doctor and patient to create an accurate transcript, attach a readable transcript to patient files, or input the transcript into an EHR system.

Enterprises are already leveraging these capabilities to transform their operations and create powerful transcription and analysis tools.

Additional practical applications include:

- Legal compliance: Accurate identification of speakers in legal proceedings can be critical for maintaining accurate records, as speaker attribution carries legal weight and helps guarantee all parties are properly represented.

- Meeting records: In business settings, detailed speaker labels help track contributions and action items, turning transcripts into what one guide calls actionable documents to guarantee accountability.

- Educational use: In educational settings, speaker-labeled transcripts can help students follow along with lectures more easily and review material more effectively.

- Research and analysis: For researchers, being able to distinguish between different speakers can provide deeper insights into conversational dynamics and interaction patterns.

Top 8 speaker diarization libraries and APIs

Choosing the right speaker diarization solution depends on your specific needs: accuracy requirements, processing speed, integration complexity, and whether you need a managed API or open-source flexibility. Here's our comprehensive analysis of the leading solutions:

Let's examine each solution in detail:

1. AssemblyAI

AssemblyAI is a leading speech recognition startup that offers highly accurate speech-to-text transcription through its latest models, including Universal-3-Pro. In addition to transcription, AssemblyAI provides a suite of Speech Understanding models for tasks like sentiment analysis, Topic Detection, summarization, and entity detection.

AssemblyAI's speaker diarization has seen dramatic improvements, achieving a 10.1% improvement in Diarization Error Rate (DER) and 13.2% improvement in cpWER. The latest models demonstrate 30% better performance in noisy environments and handle speaker segments as short as 250ms with 43% improved accuracy compared to previous versions. For even greater precision, AssemblyAI also offers Speaker Identification, which can replace generic labels like "Speaker A" with actual names or roles (e.g., "John Smith" or "Customer") inferred from the conversation.

Key improvements:

- Industry-leading 2.9% speaker count error rate (based on internal benchmarking)

- Enhanced handling of similar voices and short utterances

- Broad language support: 6 languages with Universal-3-Pro and 99 languages with Universal-2

- Improved timestamp accuracy through the Universal-3-Pro model

- Pricing: Tiered pricing starting at $0.15/hr for Universal-2 and $0.21/hr for Universal-3-Pro

2. Deepgram

Deepgram's diarization feature emphasizes processing speed. Their latest model shows a 53.1% improvement from their previous version and operates in a language-agnostic manner.

Key features:

- No limit on number of speakers

- Language-agnostic operation

- Focus on processing speed (10X faster per their benchmarks)

- Integrated with their Nova-2 speech recognition model

Deepgram's speed-first approach makes it suitable for applications where rapid processing is the primary requirement.

3. Speechmatics

Speechmatics claims to be 25% ahead of their closest competitor in accuracy according to their benchmarks. They offer speaker diarization through their Flow platform with both cloud and on-premise deployment options.

Key features:

- Enhanced accuracy through punctuation-based corrections

- Configurable maximum speakers (2-20)

- Support for 30+ languages

- Processing time increase of 10-50% when diarization is enabled (per their documentation)

Speechmatics provides deployment flexibility for enterprise environments that require on-premise options or have specific compliance needs.

4. Gladia

Gladia combines Whisper's transcription capabilities with PyAnnote's diarization, providing an integrated solution for developers using Whisper. Their enhanced diarization option includes additional processing for edge cases.

Key features:

- Whisper + PyAnnote integration

- Enhanced diarization mode for challenging audio

- Configurable speaker hints

- Streaming support available

This integration provides a pathway for teams already using Whisper to add speaker diarization capabilities without managing multiple services.

5. PyAnnote

PyAnnote is a widely-used open-source speaker diarization toolkit, now in version 3.1. It achieves approximately 10% DER with optimized configurations on standard benchmarks and processes with a 2.5% real-time factor on GPU.

Recent improvements include better handling of overlapping speech and enhanced speaker embeddings. PyAnnote serves as the foundation for several commercial solutions, including Gladia.

Key considerations:

- Requires training or fine-tuning for optimal performance on specific use cases

- Supports Python 3.7+ on Linux and MacOS

- Requires Hugging Face authentication token for pre-trained model access

- Active research community and regular updates

PyAnnote is well-suited for research projects and teams with ML expertise who need customizable diarization solutions.

6. NVIDIA NeMo

NVIDIA NeMo introduces Sortformer, an end-to-end diarization approach using an 18-layer Transformer architecture. This innovative design eliminates traditional pipeline stages by treating diarization as a unified problem.

Key features:

- End-to-end neural architecture

- Multi-scale diarization decoder (MSDD)

- Seamless ASR integration

- GPU-optimized processing

The system supports both oracle VAD (using ground-truth timestamps) and system VAD (using model-generated timestamps). NeMo is designed for researchers and teams building custom multi-speaker ASR systems who have access to GPU resources and ML expertise.

7. Kaldi

Kaldi is a speech recognition toolkit widely used in academic research that includes speaker diarization capabilities. It offers extensive customization options and has been the foundation for many speech processing research projects.

With Kaldi, users can either:

- Train models from scratch with full control over the pipeline

- Use pre-trained X-Vectors network or PLDA backend from the Kaldi website

Getting started with Kaldi requires understanding its unique architecture and recipe-based approach. This Kaldi tutorial provides an introduction to the framework. For speaker diarization specifically, this tutorial covers the implementation details.

Kaldi is best suited for academic research and teams with speech processing expertise who need maximum flexibility and control over their diarization pipeline.

8. SpeechBrain

SpeechBrain is a PyTorch-based toolkit offering over 200 recipes for various speech tasks, including speaker diarization. It provides both pre-trained models and training frameworks for researchers and developers.

Key features:

- Extensive recipe collection covering 20+ speech tasks

- PyTorch-based architecture for easy integration

- Modular design allowing component customization

- Active development community and regular updates

The toolkit includes features like dynamic batching, mixed-precision training, and support for single and multi-GPU training. SpeechBrain aims to bridge research and production by providing structured recipes that can be adapted for specific use cases.

It's particularly suitable for teams familiar with PyTorch who want to experiment with different diarization approaches or need to customize their pipeline for specific requirements.

Implementation best practices for speaker diarization

Moving from theory to practice requires navigating the nuances of real-world audio. While no AI model is perfect, you can significantly improve your results by following a few best practices. Companies like CallSource, Veed, and Podchaser build world-class products that depend on getting these details right.

- Optimize for your use case: The ideal setup depends on your needs. For batch processing of large audio files, you can afford more processing time to achieve the highest accuracy. For real-time applications, you'll need to balance accuracy with low latency to ensure a smooth user experience.

- Handle real-world audio: Most audio isn't recorded in a studio. Background noise, overlapping speech, and speakers with similar voices are common challenges. Always test models using a sample of your own audio data to see how they perform under the conditions you expect. Models trained on diverse, noisy datasets will consistently outperform those trained only on clean audio, as the best systems are built to handle challenging audio found in real-world environments.

- Debug common errors: When you encounter errors, check for common failure modes. Is the model misidentifying the number of speakers? Are two different speakers being merged into one label? Listen to the specific audio segments where the errors occur. This often reveals issues with audio quality or very short speaker turns that you can address.

- Think in pipelines: Speaker diarization is rarely the final step. It's a critical component in a larger speech understanding pipeline. Once you have accurate speaker labels, you can perform downstream tasks like per-speaker sentiment analysis, topic tracking, or summarization to extract deeper insights.

How to choose a speaker diarization solution

For production applications:

- High accuracy in noisy conditions → AssemblyAI's 30% improvement in real-world audio and 43% improvement on short segments (250ms)

- Whisper ecosystem → Gladia provides integrated Whisper + diarization

- Enterprise deployment flexibility → Speechmatics offers both cloud and on-premise options

For Conversation intelligence use cases: Consider your specific requirements:

- Conference room recordings: Look for solutions tested on noisy, multi-speaker environments

- Call center analytics: Accuracy on brief utterances and speaker count precision are critical

- Meeting transcription: Real-time capabilities and handling of overlapping speech matter

- Interview processing: Clear speaker separation and accurate timestamps are essential

AssemblyAI's recent improvements specifically target these real-world scenarios with documented performance gains in noisy conditions (30% improvement) and very short utterances (43% improvement at 250ms).

For Research/Development:

- Maximum control → NVIDIA NeMo's Sortformer architecture for custom implementations

- Established framework → PyAnnote 3.1 with pre-trained models and active community

- Academic benchmarking → Kaldi's extensive configuration options

- PyTorch ecosystem → SpeechBrain's recipe collection

Open-source vs managed API decision criteria:

- Development time: APIs offer faster implementation

- Customization needs: Open-source provides model control

- Infrastructure: Self-hosted requires GPU resources

- Maintenance overhead: APIs handle updates automatically

Limitations of speaker diarization

While speaker diarization has improved dramatically, some limitations remain. For real-time applications, diarization is best achieved by using multichannel audio, where each speaker is on a separate channel. This approach provides the clearest speaker separation for live transcription. For pre-recorded files, multichannel transcription can also be an effective alternative to diarization when separate speaker channels are available.

Several factors can still impact the accuracy of diarization models on single-channel audio:

- Speaker talk time

- Conversational dynamics

Historically, speaker talk time—which has the biggest impact on accuracy—directly correlated with identification accuracy, with older systems requiring at least 15-30 seconds of speech for reliable detection. However, modern models like AssemblyAI's Universal-3-Pro have significantly improved performance on short utterances. With features like prompted speaker diarization, the model can accurately attribute even single-word acknowledgments, though more audio data generally still leads to higher confidence.

Conversational dynamics also impact accuracy through specific audio characteristics:

- Turn-taking patterns: Clear speaker transitions improve labeling.

- Overlapping speech: Crosstalk can reduce accuracy and may create phantom speakers, though advanced systems are now better at detecting and labeling these overlapping segments to maintain accuracy.

- Background noise: High noise levels (low SNR) can degrade performance.

- Speech energy: Rapid interruptions and very similar voices can challenge clustering algorithms.

These challenging conditions—noisy environments, overlapping speech, and similar voices—are where speaker diarization accuracy varies most between different solutions. Recent advances in the field are specifically targeting these real-world challenges.

For teams building production applications, it's important to evaluate how different solutions perform on your specific audio conditions. Solutions optimized for clean audio may struggle with real-world recordings, while those designed for challenging conditions (like AssemblyAI's 30% improvement in noisy environments) can provide more consistent results across varying audio quality.

Build live transcription solutions with AssemblyAI

AssemblyAI provides production-ready speaker diarization with documented performance improvements in challenging audio conditions. By integrating our speaker diarization and other advanced Voice AI features, organizations gain:

- Proven accuracy: Industry-leading 2.9% speaker count error rate and 30% improvement in noisy conditions based on extensive testing

- Real-world performance: Specific optimizations for challenging audio environments, including 43% improvement on brief utterances (250ms)

- Simple implementation: Straightforward API integration with comprehensive documentation and SDKs

- Comprehensive features: From speaker diarization and custom vocabulary to auto punctuation and confidence scores

- Enterprise security: SOC2 Type 2 certified with enterprise-grade security practices

- Continuous improvements: Regular model updates based on customer feedback and research advances

Test our speaker diarization capabilities with your own audio files in the AssemblyAI API Playground. Sign up for a free account to get started with $50 in credits.

Frequently asked questions about speaker diarization

How do I handle overlapping speech?

Use multi-channel recording for optimal speaker separation, or select end-to-end models with documented crosstalk performance.

What's the minimum audio duration for a speaker to be detected?

Reliable speaker detection requires 15-30 seconds of total speech time per individual across the audio file.

How can I debug common speaker diarization errors?

Check speaker count accuracy, voice similarity merging, and audio quality at error segments. Test with representative audio samples to identify model-specific failure patterns.

What is the difference between speaker diarization and speaker recognition?

Speaker diarization answers the question 'who spoke when?' by clustering voices and assigning generic labels like 'Speaker A' and 'Speaker B'. It does not know the speakers' actual identities. Speaker recognition, on the other hand, identifies a specific person by matching their voice to a pre-existing voice profile, answering the question, 'Is this person John Doe?'

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

%20influence%20automatic%20speaker%20labeling_.png)