Voice AI guardrails: Built-in protection for compliance, quality, and cost control

Learn how Voice AI guardrails protect compliance, quality, and costs in production. Discover PII redaction, profanity filtering, and efficiency controls for healthcare, finance, and contact centers.

Voice AI applications handle increasingly sensitive conversations across healthcare, financial services, and customer support. But without systematic controls, these same applications expose organizations to compliance violations, quality degradation, and unnecessary processing costs.

Voice AI guardrails solve this problem by embedding protection directly into your transcription pipeline. Rather than bolting on external filters or manual review processes, guardrails apply automated controls at the API level, blocking sensitive data exposure, filtering unwanted content, and preventing waste before it reaches your systems.

This article covers three categories of voice AI guardrails: safety controls that prevent compliance violations, quality filters that maintain content standards, and efficiency measures that reduce unnecessary processing costs.

Why transcription without protection fails

Organizations deploying voice AI without systematic guardrails face three distinct problems: regulatory exposure from unprotected sensitive data, quality degradation from unfiltered content, and operational waste from processing everything indiscriminately.

Safety risks without systematic controls

Healthcare applications processing patient conversations without PII protection create HIPAA violations with every unredacted transcript. Financial services applications capturing credit card numbers in call recordings expose cardholder data that triggers PCI-DSS penalties.

The 2024 HIPAA Enforcement Report shows violations from improper PHI disclosure continue to drive major penalties. Organizations that process protected health information through voice AI systems without built-in redaction mechanisms carry this risk across every API call.

Beyond healthcare and finance, any application processing voice data containing names, addresses, social security numbers, or phone numbers without systematic removal creates liability. The default state of unprotected transcription is non-compliance.

Quality problems from unfiltered content

Training data quality determines AI model performance. When profanity, irrelevant audio, or low-quality recordings enter your training pipeline without filtering, model accuracy degrades systematically.

Contact centers building quality assurance systems need clean transcripts for coaching and evaluation. Customer support teams analyzing sentiment require profanity-filtered text for accurate assessment. Meeting intelligence platforms depend on relevant content extraction, not transcripts padded with hold music and silence.

Without quality guardrails, your voice AI applications process and store everything, and then pass degraded data to downstream systems that depend on clean inputs.

Operational waste draining resources

Transcription costs scale with audio volume. Processing files that contain mostly silence, hold music, or irrelevant content drains budgets without providing value.

Without controls to limit transcription scope, you process, and pay for, content you'll never use. Call centers transcribe hold music. Meeting platforms process pre-call silence. Healthcare applications transcribe ambient noise between patient interactions.

The Voice AI Market Report 2024 projects the conversational AI market will reach $32.62 billion by 2030, driving 26.7% compound annual growth. As voice AI adoption accelerates, processing efficiency becomes a competitive advantage, not just a cost consideration.

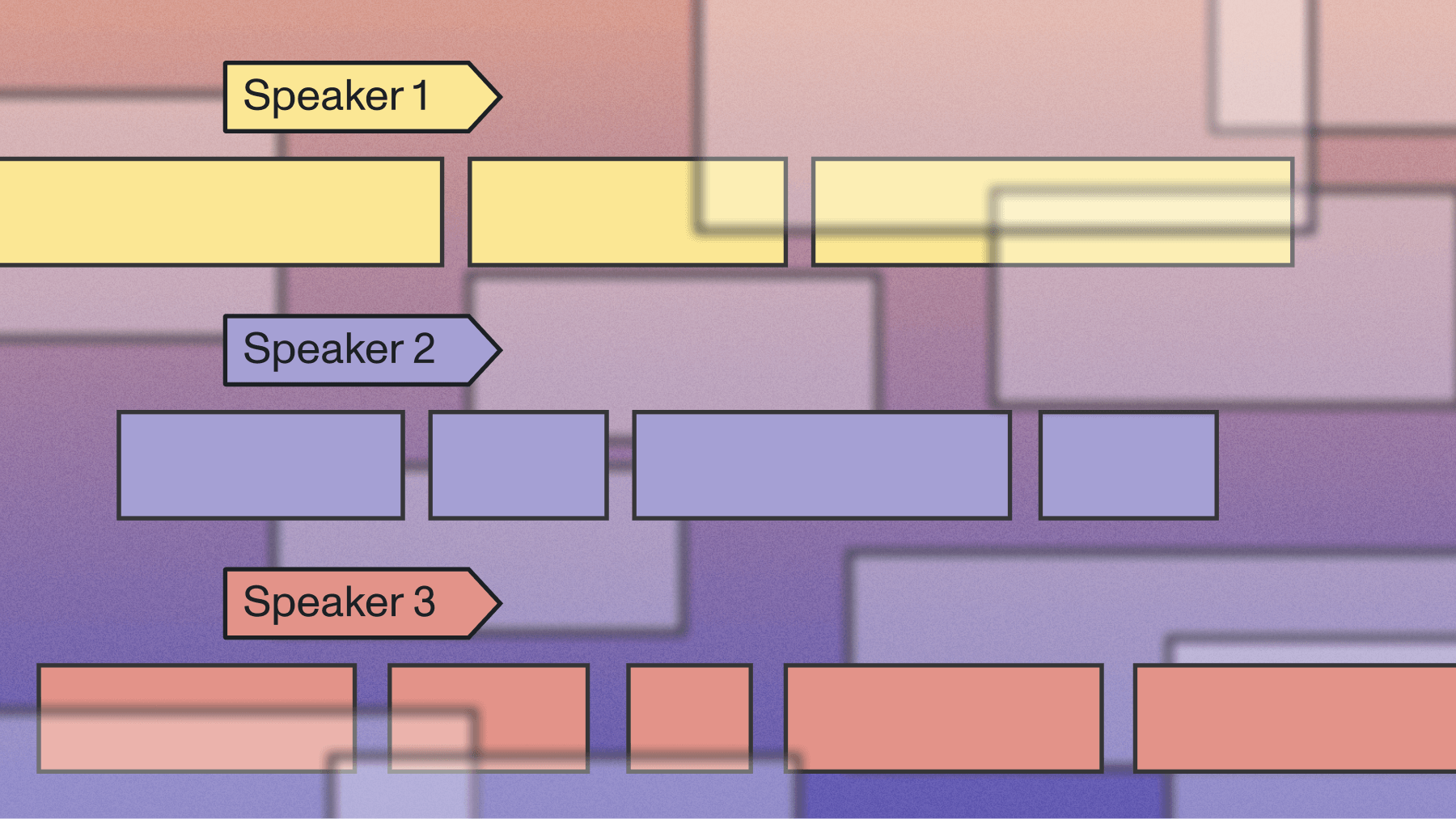

Introducing guardrails

Guardrails provide multi-stage protection across your voice AI pipeline: from input validation through output filtering.

Unlike post-processing approaches that filter transcripts after generation, guardrails apply controls during transcription. This prevents sensitive data from ever entering your systems, blocks unwanted content before storage, and stops unnecessary processing before costs accumulate.

AssemblyAI implements three categories of guardrails: safety controls, quality filters, and efficiency measures. Each category addresses specific protection requirements while maintaining a single API integration.

Safety guardrails

Safety guardrails prevent compliance violations by automatically removing or blocking sensitive information before it reaches your systems.

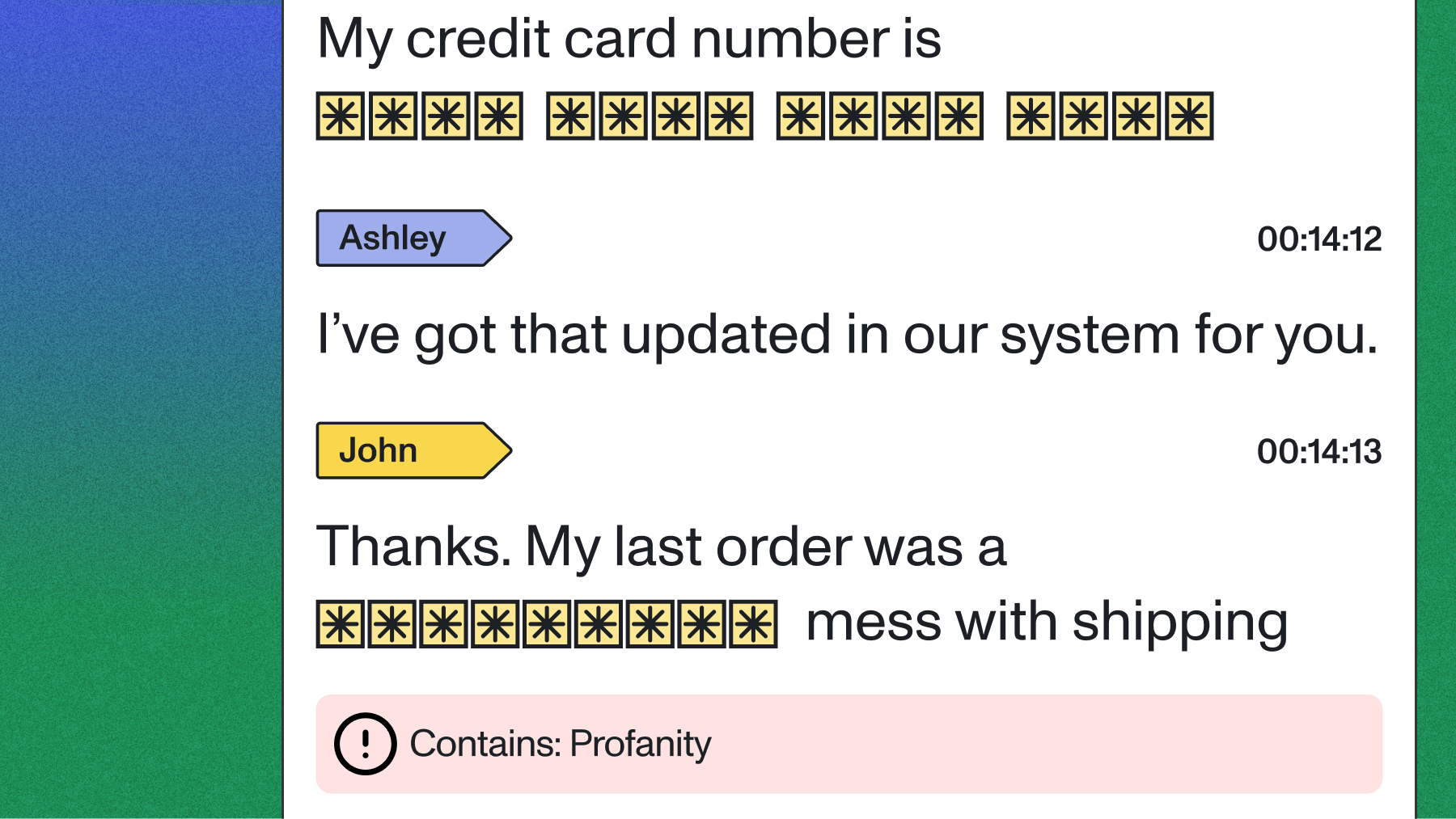

PII redaction for compliance-critical applications

Healthcare, finance, and legal applications require systematic removal of personally identifiable information. Patient names, account numbers, social security digits, and addresses create compliance liability when stored in transcripts or passed to downstream AI models.

PII Redaction removes personal information from both transcript text and audio outputs across dozens of languages. The system detects and redacts:

- Person names and ages

- Phone numbers and email addresses

- Social security numbers and credit card digits

- Physical addresses and locations

- Medical record numbers and patient identifiers

- Bank account and routing numbers

Healthcare platforms use PII Redaction to process clinical conversations while maintaining HIPAA compliance. Financial services applications remove cardholder data before storing call transcripts. Legal tech platforms redact client information from recorded consultations.

Implementation requires a single API parameter. When enabled, PII Redaction processes transcripts during generation, with no post-processing filters or manual review required.

The redaction system supports entity-level filtering through the redact_pii_policies parameter. Rather than removing all PII categories, you can specify which entities require redaction based on your compliance requirements. A healthcare application might redact patient names and medical record numbers while preserving location data needed for demographic analysis.

Audio redaction: Remove sensitive content from source files

Text redaction protects transcripts, but the original audio files still contain sensitive information. Organizations that store, share, or analyze audio recordings need content removal at the source level.

Audio Redaction generates new audio files with sensitive content replaced by silence. This enables compliant audio storage, safe file sharing with third parties, and protected training data for speech models.

Contact centers use Audio Redaction to share customer calls with quality assurance teams without exposing payment information. Healthcare platforms create anonymized audio datasets for clinical research. Training platforms distribute coaching materials without revealing customer identities.

The system applies the same PII detection used in text redaction, but operates on the audio timeline. When the transcription system identifies a credit card number spoken between 1:34 and 1:42 in a recording, Audio Redaction mutes that specific segment while preserving the surrounding conversation.

Quality guardrails

Quality guardrails filter unwanted content and maintain transcript standards across your voice AI pipeline.

Profanity filtering for quality assurance

Training data quality determines model performance. Profanity in transcripts degrades sentiment analysis accuracy, creates inappropriate training examples, and compromises customer-facing AI applications.

Profanity filtering detects and removes offensive language from transcripts. The filter operates during transcription, blocking profanity before it enters your training pipeline or appears in customer-facing applications.

Contact centers use profanity filtering to generate clean transcripts for quality assurance reviews. Customer support platforms apply filtering before running sentiment analysis. Meeting intelligence applications remove offensive language from searchable transcripts.

The system supports language-specific profanity detection across multiple languages and provides filtering options from light to aggressive based on your application requirements.

Content moderation for safe AI applications

Beyond profanity, voice content may contain hate speech, threats, or other harmful material that requires systematic detection and filtering. Applications processing user-generated content need automated content moderation to prevent policy violations.

Content Moderation analyzes transcripts for sensitive content categories including violence, hate speech, harassment, and adult content. The system generates severity scores for each category, enabling automated filtering or flagging based on your content policies.

Social platforms use Content Moderation to identify policy violations in voice messages. Community applications filter harmful content from voice chat. Moderation teams prioritize review queues based on automated severity scoring.

Efficiency guardrails

Efficiency guardrails reduce unnecessary processing costs by filtering low-value audio before transcription.

Speech threshold: Stop paying for silence

Call recordings often contain extended hold periods, pre-call silence, or post-conversation dead air. Transcribing files with minimal speech content wastes processing resources and inflates costs.

Speech Threshold solves this by transcribing only files that meet your minimum speech percentage requirement. Files that fall below your threshold are rejected before transcription, preventing unnecessary API charges.

A contact center might set a 30% speech threshold to skip recordings that contain mostly hold music. A meeting platform could require 50% speech content to filter out recordings where participants never joined. Healthcare applications might use a 20% threshold to exclude ambient recordings between patient visits.

The system calculates speech percentage during initial audio analysis, then applies your threshold before starting transcription. This prevents processing costs for low-value files while maintaining accuracy for content-rich recordings.

Word boost: Prioritize accuracy for domain-specific terminology

General-purpose speech recognition models struggle with specialized vocabulary. Medical terminology, brand names, technical jargon, and proper nouns frequently appear as transcription errors, creating downstream problems for search, analytics, and AI applications.

Word Boost increases transcription accuracy for domain-specific terms by allowing you to provide a custom vocabulary list. The system prioritizes these terms during transcription, improving recognition accuracy for the vocabulary that matters most to your application.

Healthcare platforms use Word Boost for medical terminology, drug names, and procedure codes. Legal applications boost case names, statute references, and legal terms of art. Contact centers boost product names, competitor brands, and industry-specific vocabulary.

The system accepts up to 200 custom terms per request and supports phonetic hints for ambiguous pronunciations. Rather than retraining models or maintaining custom vocabularies, you specify important terms through a simple API parameter.

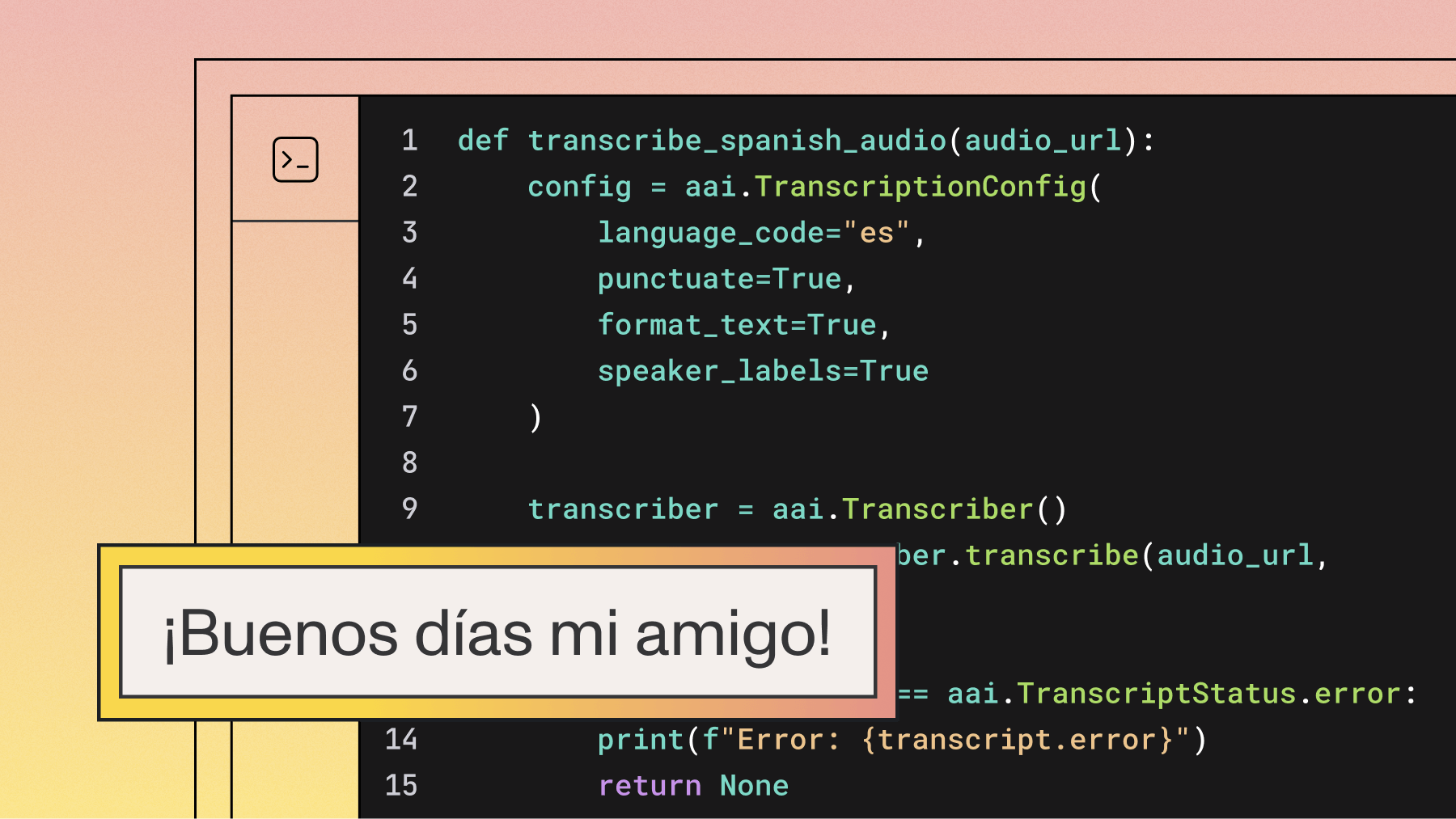

Implementing guardrails in production

Voice AI guardrails integrate directly into your existing AssemblyAI implementation through API parameters. No separate authentication, additional endpoints, or post-processing pipelines required.

Adding PII redaction

Enable PII redaction by including the redact_pii parameter in your transcription request:

import axios from "axios";

import fs from "fs-extra";

const baseUrl = "https://api.assemblyai.com";

const headers = {

authorization: "<YOUR_API_KEY>",

};

const path = "./my-audio.mp3";

const audioData = await fs.readFile(path);

const uploadResponse = await axios.post(`${baseUrl}/v2/upload`, audioData, {

headers,

});

const uploadUrl = uploadResponse.data.upload_url;

const data = {

audio_url: uploadUrl, // You can also use a URL to an audio or video file on the web

redact_pii: true,

redact_pii_policies: ["person_name", "organization", "occupation"],

redact_pii_sub: "hash",

};

const url = `${baseUrl}/v2/transcript`;

const response = await axios.post(url, data, { headers: headers });

const transcriptId = response.data.id;

console.log("Transcript ID: ", transcriptId);

const pollingEndpoint = `${baseUrl}/v2/transcript/${transcriptId}`;

while (true) {

const pollingResponse = await axios.get(pollingEndpoint, {

headers: headers,

});

const transcriptionResult = pollingResponse.data;

if (transcriptionResult.status === "completed") {

console.log(transcriptionResult.text);

break;

} else if (transcriptionResult.status === "error") {

throw new Error(`Transcription failed: ${transcriptionResult.error}`);

} else {

await new Promise((resolve) => setTimeout(resolve, 3000));

}

}The redact_pii_policies parameter accepts an array of entity types to redact. Available policies include person names, phone numbers, email addresses, social security numbers, credit card numbers, dates of birth, medical conditions, and more.

Performance and latency considerations

Guardrails apply during transcription without adding separate processing steps. PII redaction and profanity filtering add negligible latency compared to baseline transcription times. Speech threshold actually reduces processing time by rejecting low-value files before transcription starts.

Audio redaction requires additional processing to generate modified audio files, adding approximately 10-15% to total processing time. This overhead is often acceptable given the compliance benefits of source-level content removal.

Getting started with guardrails

Voice AI applications handle increasingly sensitive and regulated content across healthcare, finance, emergency services, and beyond. Guardrails provide systematic protection without requiring separate security tools, manual review processes, or post-processing pipelines. Start with the protections your application needs most, then expand as your requirements evolve.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

.png)