Speech

Understanding

Transform raw transcripts into structured, actionable data. These pre-built, LLM-powered features turn transcripts into intelligence instantly.

Feature-rich AI models

Leverage our AI-powered Translation models to transcribe languages in your products at scale automatically. Supporting over 99 languages.

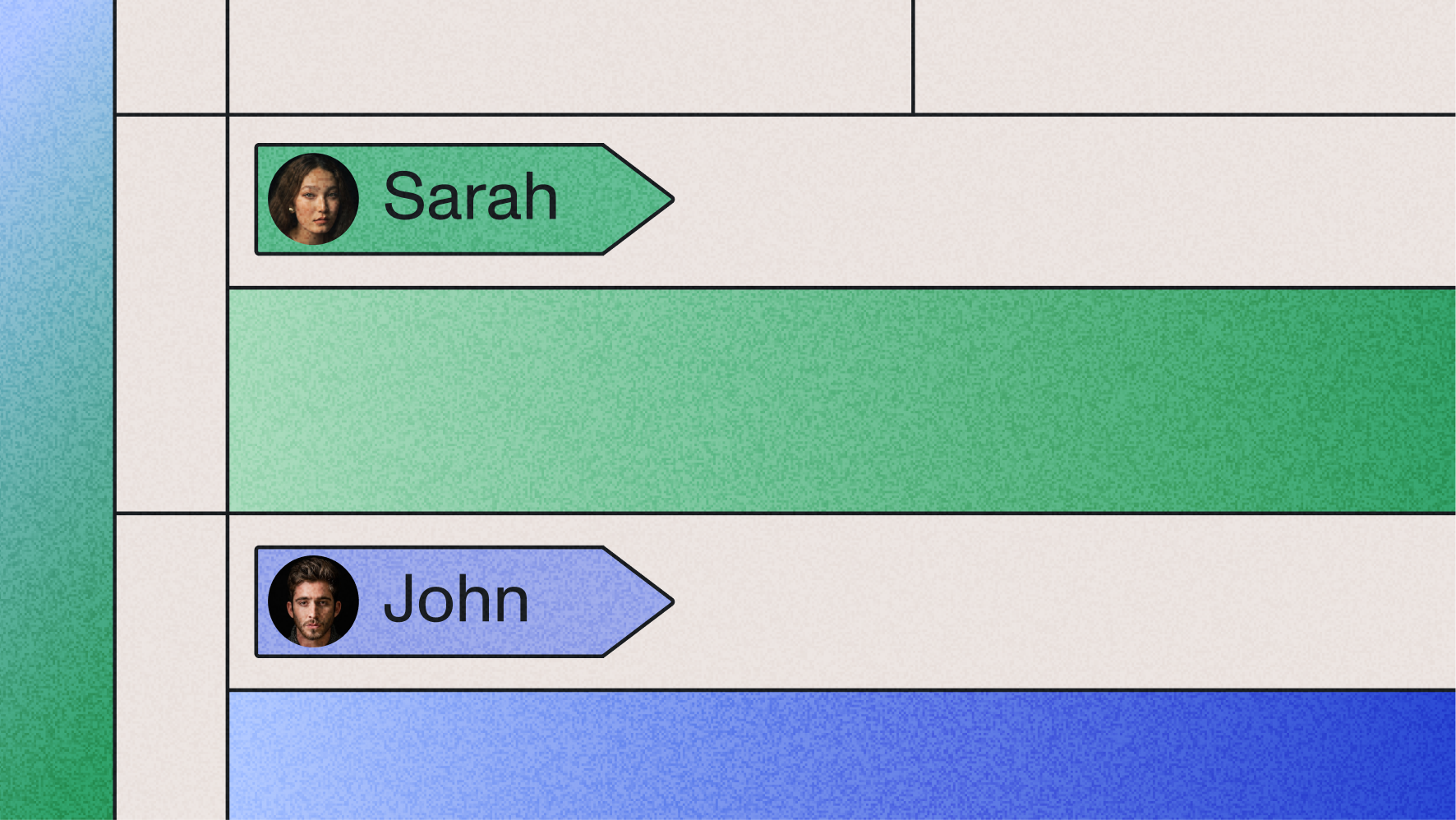

Go beyond "Speaker A" and "Speaker B" by leveraging our Advanced Speaker Identification, labeling speakers by name through audio context.

.avif)

Automatically detect and normalize key text elements in transcripts — including dates, phone numbers, and email addresses — to standardized, machine-readable formats.

Leverage our AI-powered Summarization models to automatically summarize audio/video data in your products at scale. Customize the summary types to best fit your use case.

With Sentiment Analysis, AssemblyAI can detect the sentiment of each sentence of speech spoken in your audio files.

Identify a wide range of entities that are spoken in your audio files, such as person and company names, email addresses, dates, and locations.

Label the topics that are spoken in your audio and video files. The predicted topic labels follow the standardized IAB Taxonomy, which makes them suitable for contextual targeting.

Automatically generate a summary over time for audio and video files.

Accurately identify significant words and phrases, enabling you to extract the most pertinent concepts or highlights from your audio/video file.

We're focused on delivering incredible products that meet the specific needs of our customers. To do that effectively and efficiently, it makes sense for us to partner with experts in AI versus building something from the ground up—which can feel like a space race and act as a barrier to bringing valuable solutions to market in a timely manner.

Frequently Asked Questions

Speech Understanding applies LLM‑powered tasks to completed transcripts via the LLM Gateway. You send a transcript_id and a speech_understanding.request (e.g., translation, speaker_identification, custom formatting). The service returns the original transcript augmented with structured outputs, like translated texts and updated utterance speaker labels, so raw text becomes machine‑readable, actionable fields for downstream workflows.

AssemblyAI first diarizes audio into speaker clusters using embeddings. Then Advanced Speaker Identification maps these clusters to real names or roles via the Speech Understanding API, using audio context and optional known_values you provide. Enable speaker_labels and request speaker_identification to return utterances labeled by name.

Yes. AssemblyAI lets you choose summary types and styles. Set summary_type to bullets, bullets_verbose, gist, headline, or paragraph, and pair it with a summary_model (informative, conversational, or catchy). If you specify one, you must specify the other. For fully custom formats, use LeMUR via LLM Gateway.

Get an AssemblyAI API key. Create a transcript (POST /v2/transcript). Add Speech Understanding either: 1) inline—include speech_understanding.request (e.g., translation) in the transcription request, or 2) after—POSThttps://llm-gateway.assemblyai.com/v1/understanding with transcript_id and your speech_understanding.request. Tasks include translation, speaker identification, and custom formatting.

Speech Understanding is billed by audio duration and the models you enable. Pay‑as‑you‑go per hour: Speaker Identification $0.02, Translation $0.06, Custom Formatting $0.03, Entity Detection $0.08, Sentiment Analysis $0.02, Auto Chapters $0.08, Key Phrases $0.01, Topic Detection $0.15, Summarization $0.03.

Speech Understanding runs on the transcript produced by the speech-to-text model you choose. For pre-recorded audio, it works with Universal (default) or Slam‑1. You can specify the model in your request, and pricing follows the model used.

Unlock the value of voice data

Build what’s next on the platform powering thousands of the industry’s leading of Voice AI apps.