Top APIs and models for real-time speech recognition and transcription in 2026

Compare the best real-time speech recognition APIs and models for 2026. Evaluate latency, accuracy, and integration complexity across cloud APIs and open-source solutions.

Compare the best real-time speech recognition APIs and models for 2026. As technical guides explain, this technology transforms live conversations into immediate, searchable text, making it critical to evaluate latency, accuracy, and integration complexity across cloud APIs and open-source solutions.

Real-time speech recognition APIs convert spoken audio to text with sub-second latency through streaming WebSocket connections. Developers choose between cloud APIs offering 300-500ms response times and open-source models requiring significant engineering overhead.

The core technical challenge involves managing persistent connections, audio buffering, and endpointing decisions. According to a survey of tech leaders, evaluation criteria include cost (64%), performance (58%), and accuracy (47%).

This guide examines the technical foundations of real-time speech recognition, analyzes the leading cloud APIs and open-source models available in 2026, and provides frameworks for evaluating solutions based on latency, accuracy, language support, and implementation complexity. We'll explore how these technologies work, where they excel, and which approach makes sense for different applications.

What is real-time speech recognition?

Real-time speech recognition is an API technology that converts live audio streams to text through persistent WebSocket connections with latency under 1 second. Unlike batch processing that requires complete audio files, streaming recognition processes audio chunks as they're captured.

Key differences from traditional speech recognition:

- Batch processing: Requires complete audio file before transcription begins

- Real-time processing: Transcribes speech as it's generated through streaming connections

- Latency: Sub-second response times versus minutes for batch processing

- Use cases: Powers live conversations, voice agents, and interactive applications

The distinction is critical for developers building interactive applications. For an AI voice agent to hold a natural conversation, it can't wait for the user to finish speaking, process the entire recording, and then respond. Real-time speech recognition enables the fluid, back-and-forth dialogue that users expect from modern voice experiences.

How real-time speech recognition works

Real-time speech recognition uses a persistent WebSocket connection between your application and the transcription server.

The process works through four key stages:

The system generates a continuous stream of Turn Events. This architecture uses immutable transcription, meaning once a word is transcribed, it will not change. This differs from older systems that used mutable partials.

- Interim results: As the user speaks, new words are added to the transcript within the current turn. The transcript grows but existing words are not changed.

- Final turns: When the model detects a natural pause, it signals the end of the turn. If formatting is enabled, a final, formatted version of the turn's transcript is also sent.

This architecture allows developers to display text to users almost instantly while ensuring the final transcript is accurate. Efficient endpointing—detecting natural pauses in speech—minimizes perceived latency by determining how quickly the system can finalize an utterance and trigger a response.

Real-world applications and use cases

Real-time speech recognition powers applications where immediate feedback determines user experience:

AI Voice Agents

Sub-500ms latency is required for natural conversation flow, as performance requirements detail that this delay needs to feel instantaneous—typically under 500 milliseconds for the first partial results. Companies like CallSource and Bland AI rely on this speed for seamless customer interactions.

Live Captioning

Captions must appear in sync with speakers. While 1-3 second delays are acceptable, lower latency improves accessibility in meetings and broadcasts.

Voice Commands

Interactive control systems need quick responses. Delays over one second make features feel slow and unresponsive.

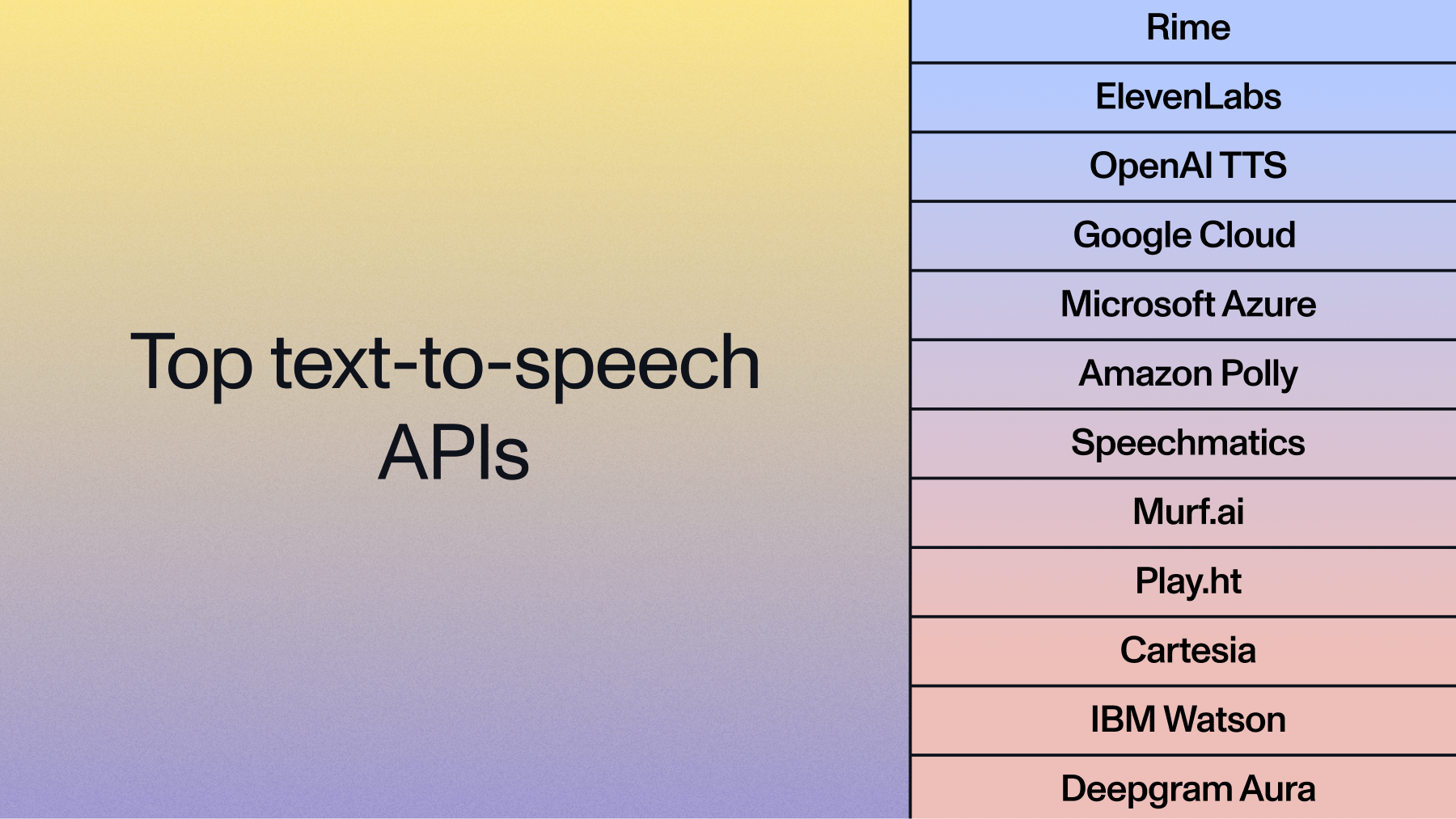

Quick comparison: top real-time speech recognition solutions

Before diving into detailed analysis, here's how the leading solutions stack up across key performance criteria:

Key criteria for selecting speech recognition APIs and models

Choosing the right solution depends on your specific application requirements. Here's what actually matters when evaluating these services:

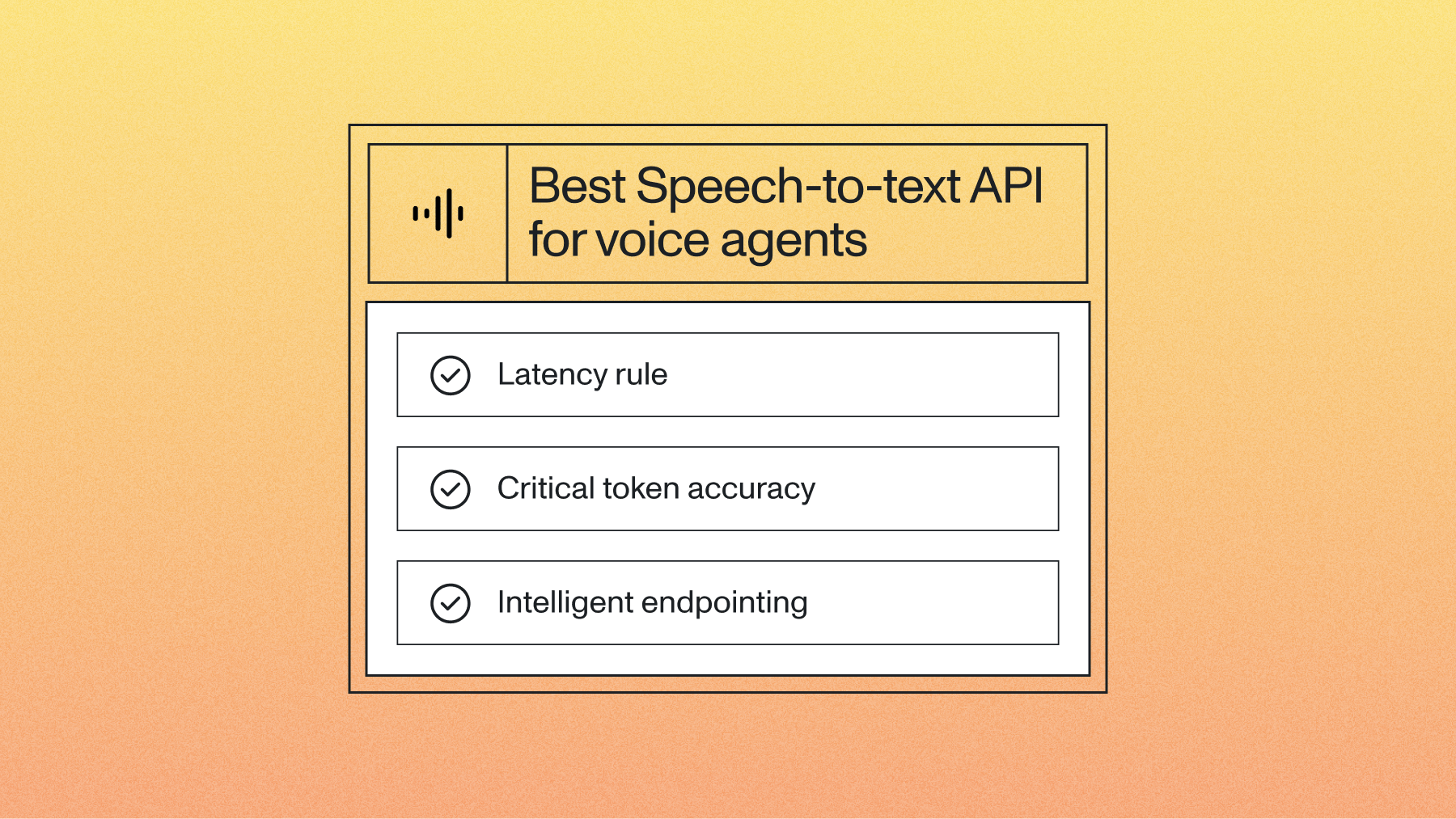

Latency requirements drive everything

Real-time applications demand different latency thresholds, and this drives your entire architecture. Voice agent applications targeting natural conversation need sub-500ms initial response times to maintain conversational flow. Live captioning can tolerate 1-3 second delays, though users notice anything beyond that.

The golden target for voice-to-voice interactions is 800ms total latency under optimal conditions. This includes speech recognition, LLM processing, and text-to-speech synthesis combined. Most developers underestimate how much latency impacts user experience until they test with real users. This is a critical oversight, as a recent study reveals that 95% of people have been frustrated with voice agents at some point. In fact, performance analysis shows that a 95% accurate system that responds in 300ms often provides a better user experience than a 98% accurate system that takes 2 seconds.

Accuracy vs. speed trade-offs are real

Independent benchmarks reveal that when formatting requirements are relaxed, AssemblyAI and AWS Transcribe achieve the best real-time accuracy. However, applications requiring proper punctuation, capitalization, and formatting often see different performance rankings.

Your accuracy requirements determine everything else:

Don't assume higher accuracy always wins. A 95% accurate system that responds in 300ms often provides better user experience than a 98% accurate system that takes 2 seconds.

Language support complexity

Real-time multilingual support remains technically challenging. Most solutions excel in English but show degraded performance in other languages. Marketing claims about "100+ languages supported" rarely translate to production-ready performance across all those languages.

If you're building for global users, test your target languages extensively. Accuracy can drop significantly for non-English languages, particularly with technical vocabulary or regional accents.

Integration complexity matters more than you think

Cloud APIs offer faster time-to-market but introduce external dependencies. Open-source models provide control but require significant engineering resources. Most teams underestimate the engineering effort required to get open-source solutions production-ready, and industry research highlights drawbacks like extensive customization needs, lack of dedicated support, and the burden of managing security and scalability.

Consider your team's expertise and infrastructure constraints when evaluating options. A slightly less accurate cloud API that your team can integrate in days often beats a more accurate open-source model that takes months to deploy reliably.

Cloud API solutions

AssemblyAI Universal-Streaming

AssemblyAI's Universal-Streaming API delivers 300ms latency (P50) with immutable transcripts that won't change mid-conversation.

Key advantages:

- 99.95% uptime SLA for production reliability

- Processes audio faster than real-time speed

- Consistent performance across accents and audio conditions

- Comprehensive documentation for quick integration

Best for: Production voice applications requiring reliable, low-latency transcription, particularly when integrated with voice agent orchestration platforms.

Deepgram Nova-2

Deepgram's Nova-2 model offers real-time capabilities. The platform reports improvements in word error rates and offers some customization options for domain-specific vocabulary.

Best for: Applications requiring specialized domain adaptation where English-only solutions won't work.

OpenAI Whisper (Streaming)

While OpenAI does not offer a dedicated real-time API, developers commonly use the Whisper model for streaming speech-to-text through custom implementations. These solutions typically involve chunking audio and sending it to the Whisper API, achieving latencies around 500ms for conversational AI applications.

This approach offers the high accuracy of the Whisper model but requires engineering effort to manage the streaming logic, endpointing, and connection handling. It provides flexibility but lacks the managed, low-latency infrastructure of dedicated real-time APIs.

AWS Transcribe

Amazon's Transcribe service provides solid real-time performance within the AWS ecosystem. Pricing starts at $0.024 per minute with extensive language support (100+ languages) and strong enterprise features.

Perfect for applications already using AWS infrastructure or requiring extensive language support. It's not the best at anything specific, but it works reliably and integrates well with other AWS services.

Google Cloud Speech-to-Text

Google's Speech-to-Text API offers broad language support (125+ languages) but consistently ranks last in independent benchmarks for real-time accuracy. The service works adequately for basic transcription needs but struggles with challenging audio conditions.

Choose this for legacy applications or projects requiring Google Cloud integration where accuracy isn't critical. We wouldn't recommend it for new projects unless you're already locked into Google's ecosystem.

Microsoft Azure Speech Services

Azure's Speech Services provides moderate performance with strong integration into Microsoft's ecosystem. The service offers reasonable accuracy and latency for most applications but doesn't excel in any particular area.

Best for organizations heavily invested in Microsoft technologies or requiring specific compliance features. It's a middle-of-the-road option that works without being remarkable.

Open-source models

WhisperX

WhisperX extends OpenAI's Whisper with real-time capabilities, achieving 4x speed improvements over the base model. The solution adds word-level timestamps and speaker diarization while maintaining Whisper's accuracy levels.

Advantages: 4x speed improvement over base Whisper, word-level timestamps and speaker diarization, 99+ language support, and full control over deployment and data.

Challenges: Significant engineering effort for production deployment, variable latency (380-520ms in optimized setups), and limited real-time streaming capabilities without additional engineering. Don't underestimate the engineering effort required.

Whisper Streaming

Whisper Streaming variants attempt to create real-time versions of OpenAI's Whisper model. While promising for research applications, production deployment faces significant challenges.

Advantages: Proven Whisper architecture, extensive language support (99+ languages), no API costs beyond infrastructure, and complete control over model and data.

Challenges: 1-5s latency in many implementations, requires extensive engineering work for production optimization, and performance highly dependent on hardware configuration. We wouldn't recommend it for production applications unless you have a dedicated ML engineering team.

Which API or model should you choose?

Your choice depends on specific application requirements and constraints. Here's our honest assessment:

For production voice agents requiring reliability and low latency: AssemblyAI Universal-Streaming provides the best balance of performance and reliability. The 99.95% uptime SLA and ~300ms latency make it suitable for customer-facing applications where downtime isn't acceptable.

For AWS-integrated applications: AWS Transcribe provides solid performance within the AWS ecosystem, particularly for applications already using other AWS services. It's not the best at anything, but it integrates well.

For self-hosted deployment with engineering resources: WhisperX offers a solid open-source option, providing control over deployment and data while maintaining reasonable accuracy levels. Consider this alongside other free speech recognition options if budget constraints are a primary concern.

Proof-of-concept testing methodology

Before committing to a solution, test with your specific use case. Most developers skip this step and regret it later:

- Evaluate with representative audio samples that match your application's conditions

- Test latency under expected load to ensure performance scales

- Measure accuracy with domain-specific terminology relevant to your application

- Assess integration complexity with your existing technology stack

- Validate pricing models against projected usage patterns

Don't trust benchmarks alone. Test with your actual use case. Performance varies significantly based on audio quality, speaker characteristics, and domain-specific terminology.

Common challenges and limitations

Real-time speech recognition faces four core technical limitations that directly impact application performance:

- Background Noise: Models must distinguish speech from ambient noise, which can be difficult in real-world environments like call centers or public spaces.

- Accents and Dialects: Performance can vary significantly across different accents, dialects, and languages. Thorough testing with representative audio is crucial.

- Speaker Diarization: Identifying who said what in a multi-speaker conversation is complex in a streaming context, as the model has limited information to differentiate voices.

- Cost Management: Streaming transcription is often priced by the second, so managing WebSocket connections efficiently is important to control costs, especially at scale. As cost analysis shows, the total cost of ownership also includes licensing, implementation, and support, making efficient management even more critical.

Final words

Real-time speech recognition in 2026 offers proven solutions for production applications. Cloud APIs lead in reliability and ease of integration, while open-source models provide control for teams with engineering resources.

Success factors:

- Match solution to your specific latency and accuracy requirements

- Test with representative audio samples from your application

- Evaluate total cost of ownership, not just API pricing

Start with proof-of-concept testing using your actual use case data. This approach reveals which solution performs best for your specific requirements and prevents costly provider switches later.

Ready to implement real-time speech recognition? Explore these step-by-step voice agent examples or try AssemblyAI's real-time transcription API free to see how low-latency speech recognition can transform your voice applications.

Frequently asked questions about real-time speech recognition APIs

Can speech-to-text APIs identify silence or overlapping speech in audio?

Yes, through word-level timestamps and turn detection events. APIs like AssemblyAI provide TurnEvent objects with start/end times and word_is_final flags for managing speaker transitions.

Can AI identify important keywords in a conversation?

Yes. For real-time transcription, you can improve the recognition of specific keywords or phrases by using the keyterms_prompt parameter when establishing the WebSocket connection. Provide an array of important terms to bias the model towards recognizing them correctly. For example, you can pass keyterms_prompt=["Universal-3-Pro"] in your connection URL. This is ideal for product names, jargon, or proper nouns.

How does real-time speech recognition differ from batch processing?

Real-time speech recognition processes audio through streaming WebSocket connections with sub-second latency, while batch processing requires a complete audio file before transcription begins. This makes batch unsuitable for interactive applications but often more accurate for recorded content.

What causes latency in real-time speech recognition systems?

Four factors create latency: network transmission (50-100ms), audio buffering (250ms chunks), model processing (100-300ms), and endpointing detection (200-500ms). Optimize by reducing chunk sizes and using edge servers.

Can real-time speech recognition work reliably offline?

Yes, but requires significant computational resources (4GB+ RAM, GPU recommended). On-device models like WhisperX achieve 380-520ms latency but with reduced accuracy compared to cloud APIs.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.