JavaScript and Node.js Speech-to-Text

Learn how to convert audio and video files from speech to text using AssemblyAI's API and JavaScript.

As a recent market survey shows, companies are rapidly moving from merely experimenting with conversation intelligence to full-scale execution. This guide will help you get started by showing you how to convert audio and video files from speech to text using AssemblyAI's API and JavaScript.

Getting started with a new technology can be daunting, but we're going to break down speech-to-text transcription with Node.js to make it easier!

In this post, we will make a basic Node.js command-line interface app (often abbreviated to CLI app) using the official AssemblyAI JavaScript SDK. According to a recent SDK update, this versatile SDK has evolved from the original Node SDK and now supports environments like the browser, Deno, and Cloudflare Workers in addition to Node.js. We will pass a URL or local file path of an audio recording as a command-line argument. The SDK will handle uploading the file and polling for the results, returning the completed transcript once it's ready. Finally, we'll print the transcribed text to the screen!

Prerequisites

- A free AssemblyAI account

- A code editor such as VS Code

- Node.js and npm

If you would like to see the completed code project, it is available at this GitHub repository.

Getting Your development environment setup

Create a project directory and initialize Node.js:

mkdir transcribe

cd transcribe

npm init -y

Create the required project files:

- Windows:

New-Item transcribe.js, .env - macOS/Linux:

touch transcribe.js && touch .env

Install required dependencies:

assemblyai- The official AssemblyAI JavaScript SDK.dotenv- Manages API key securely.

npm i assemblyai dotenv

Open the .env file in your code editor and add an environment variable to store your AssemblyAI API Key. You can find your API key in the AssemblyAI dashboard and add it as the value to the above variable.

ASSEMBLYAI_API_KEY = "YOUR_API_KEY"

Setting up secure authentication

Your API key is a secret that grants access to your AssemblyAI account. Since data privacy and security represent a significant industry challenge, you should never expose your key in client-side code or commit it to public repositories.

The dotenv package we installed loads these variables from the .env file into process.env in your Node.js application. This allows you to access your key securely in your code without hardcoding it.

Throughout this guide, we'll access the key using process.env.ASSEMBLYAI_API_KEY. Always ensure your .gitignore file includes .env to prevent accidental exposure.

Transcribing an Audio File with the AssemblyAI SDK

Before starting, add "type": "module" to your package.json file. This will allow you to use modern ES Modules syntax.

Open the transcribe.js file we created earlier. The entire process of uploading a file and retrieving the transcript can be handled in a single file with just a few lines of code using the AssemblyAI JavaScript SDK.

Copy and paste the following code into transcribe.js:

import 'dotenv/config';

import { AssemblyAI } from 'assemblyai';

// Initialize the AssemblyAI client with your API key

const client = new AssemblyAI({

apiKey: process.env.ASSEMBLYAI_API_KEY,

});

// Get the audio source from command-line arguments

const audioSrc = process.argv[2];

async function run() {

console.log(`Transcribing ${audioSrc}...`);

try {

const transcript = await client.transcripts.transcribe({

audio: audioSrc

});

if (transcript.status === 'error') {

console.error('Transcription failed:', transcript.error);

return;

}

console.log('Transcription successful!');

console.log('Text:', transcript.text);

} catch (error) {

console.error('An error occurred:', error);

}

}

run();

This modern approach simplifies the process significantly:

- We import the

AssemblyAIclient from the SDK. - We instantiate the client, which automatically uses the API key from our environment variables.

- The

client.transcripts.transcribe()method takes the audio source (a local file path or a public URL) as an argument. - The SDK handles the entire process in the background: uploading the file, queueing the transcription job, and polling for the result until it's complete.

- Using

async/await, we wait for the promise to resolve with the final transcript object. - We then check the status and print the transcribed text or any errors.

This eliminates the need for manual HTTP requests, polling logic, and separate upload/download functions.

Transcribing local files and public URLs

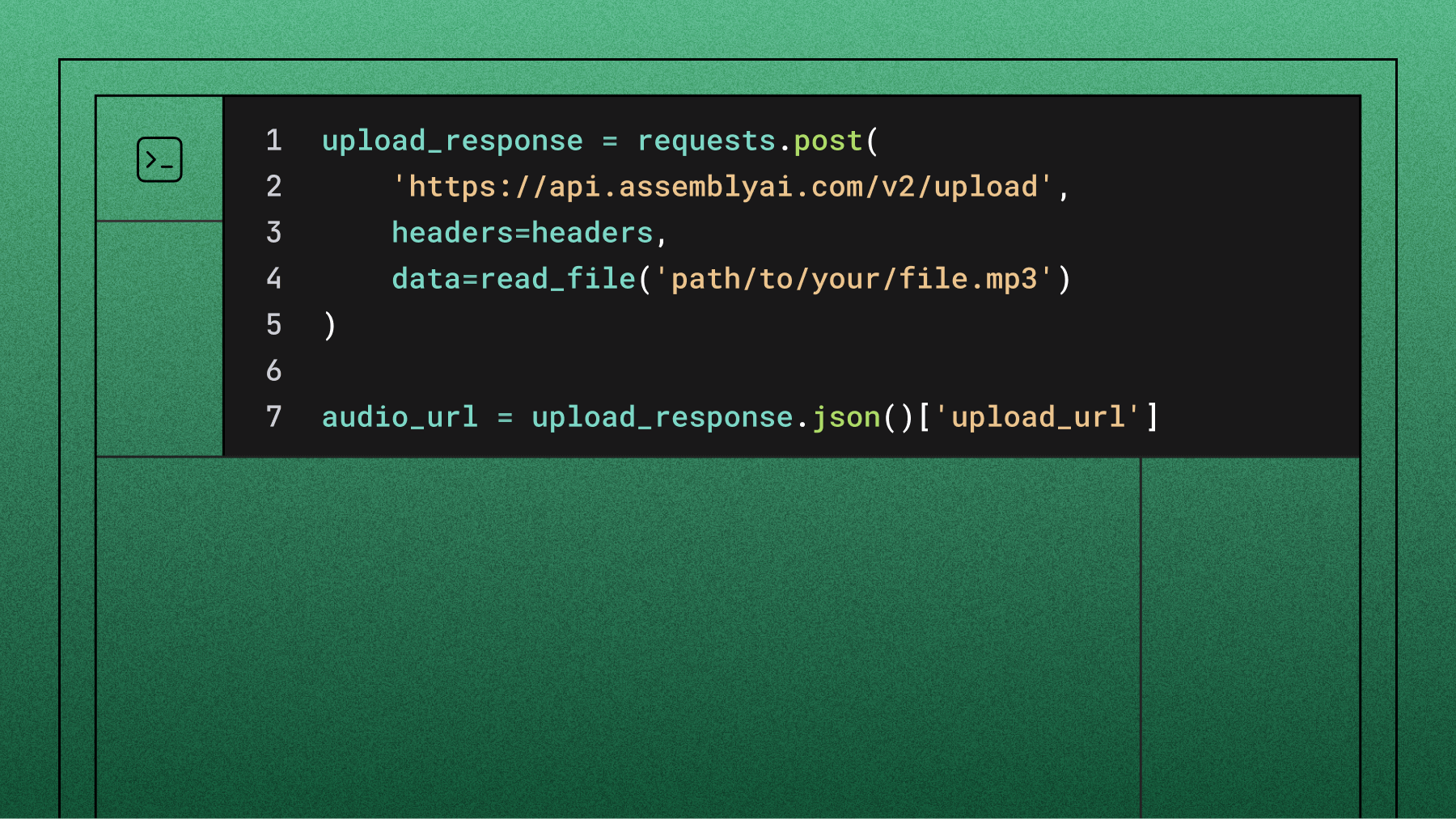

The AssemblyAI JavaScript SDK abstracts away the distinction between local files and public URLs. The same client.transcripts.transcribe() method can be used for both, making your code cleaner and more versatile.

The SDK automatically handles the two-step process of uploading a local file to a temporary URL and then submitting it for transcription. You only need to provide the file path.

const transcript = await client.transcripts.transcribe({

// can be a local file path or a public URL

audio: './path/to/your/local/audio.mp3'

});

console.log(transcript.text);

This single method works for both local files and remote URLs, simplifying the development process significantly.

Getting the transcription result

The AssemblyAI JavaScript SDK simplifies the process of getting a transcription result by abstracting away the manual polling. When you call client.transcripts.transcribe(), the method handles the entire lifecycle for you:

- Submits the audio file for transcription.

- Polls the API in the background to check the transcription status.

- Waits until the status is

completedorerror. - Returns a promise that resolves with the final transcript object or rejects with an error.

This means you no longer need a separate download.js file or any custom polling logic. The entire operation is handled by a single asynchronous call.

Error handling with the SDK

With the SDK, error handling becomes much simpler. You can wrap the transcribe call in a standard try...catch block to handle any issues that occur during the process, whether it's a network failure or a transcription error from the API.

Our transcribe.js script already includes this robust error handling:

// ... inside the run() async function

try {

const transcript = await client.transcripts.transcribe({ audio: audioSrc });

// The SDK automatically polls until the transcript is complete or failed.

// We just need to check the final status.

if (transcript.status === 'error') {

console.error('Transcription failed:', transcript.error);

return;

}

console.log('Transcription successful!');

console.log('Text:', transcript.text);

} catch (error) {

// This will catch network errors or other issues with the request itself.

console.error('An error occurred:', error);

}

This modern approach is more reliable and significantly reduces the amount of boilerplate code you need to write and maintain.

Trying it out

From your command line, run the script with a path to a local audio file or a public URL. Remember to be in the same directory as your transcribe.js file.

You can use the URL provided below or one of your choosing. Just make sure you can play it in a browser first.

node transcribe.js https://s3-us-west-2.amazonaws.com/blog.assemblyai.com/audio/8-7-2018-post/7510.mp3

If all went well, the script will wait for the transcription to complete and then print the final text to your console.

Production deployment considerations

Moving from a simple script to a production application introduces new challenges. While our CLI app is a great start, here are a few things to consider for a real-world system.

Webhooks vs. Polling: Our current script uses the SDK's transcribe() method, which polls for results automatically. This is simple and effective for a command-line tool. However, for a scalable web service, polling can be inefficient. A better approach is to use webhooks. The SDK supports this with the submit() method, where you can provide a webhook_url in your transcription request. AssemblyAI will then send a POST request to your specified URL once the transcription is complete, which is a more resource-efficient pattern for server-based applications.

Concurrency and Scale: The AssemblyAI API is built to handle high volumes of concurrent requests. Your application's architecture, whether it's a monolithic server, microservices, or serverless functions, should be designed to manage this concurrency. For example, you could use a message queue to manage transcription jobs, allowing your system to scale horizontally as demand increases.

What next?

You have successfully written a command-line application to upload an audio file URL to AssemblyAI and then download the completed transcription and print it on screen.

For more information on JavaScript and AssemblyAI, take a look at some of our other resources:

- Summarize audio with LLMs in Node.js

- Node.js Speech-to-Text with Punctuation, Casing, and Formatting

- How To Convert Voice To Text Using JavaScript

- How to Create SRT Files for Videos in Node.js

With accuracy and performance ranking as top criteria for developers choosing an AI vendor according to research on developer priorities, you're ready to build with a leading speech-to-text API. Try our API for free and start transcribing with just a few lines of code.

Frequently asked questions about JavaScript and Node.js speech-to-text

Can I get speaker labels with my transcript?

Yes. Set speaker_labels: true in your transcription request. The primary result is the utterances array in the transcript object, which provides a turn-by-turn breakdown of the conversation with each utterance assigned to a speaker (e.g., Speaker A, Speaker B). Each word in the transcript also includes a speaker key, but iterating through the utterances array is the most common way to work with diarized transcripts.

How can I improve accuracy for specific names or jargon?

You can use the keyterms_prompt parameter to increase the recognition probability for specific words or phrases. This is particularly useful for proper nouns, industry-specific terminology, or acronyms. For our Slam-1 model, this feature leverages contextual understanding to improve accuracy for not just the exact terms but also related phrases. For the Universal model, it directly boosts the likelihood of the specified terms appearing in the transcript.

What is the best way to handle very large audio files?

Our asynchronous API, which the JavaScript SDK uses by default, is designed to handle audio files of any size, up to our service limits (currently 10 hours). Simply provide the local file path or a public URL to the transcribe() method, and the SDK will manage the entire process efficiently, regardless of file size.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.