Top 3 ways to enhance AI video editing tools with Speech AI

Learn how Speech AI models help create valuable tools for AI video editing platforms.

Top video editing platforms, like Veed, are investing in AI technology to launch AI-powered tools that make it easier for users to create high-quality videos quickly.

This article examines how Speech AI models can serve as foundational building blocks for these advanced AI video editing tools and platforms.

What is AI video editing?

Before we jump into Speech AI models, let’s first look more closely at what AI video editing is.

What is AI video editing?

AI video editing replaces clunky video editing software to help turn anyone into a content creator—from new YouTubers to classroom teachers to global enterprise marketers. While tool suites vary by platform, many AI video editing platforms include tools that allow users to add AI-generated captions to videos, remove background noise, or add visual effects, sound design, looping, cropping, compression, and more.

What are the benefits of using AI video editing tools?

The top three benefits of using AI video editing tools include:

- Eliminating the tedious manual review process of editing videos and making long videos more digestible for more efficient collaboration.

- Quickly discovering and surfacing important sections of videos to create highlights and summaries.

- Adding captions to videos for better accessibility and compliance, as well as enhanced searchability, indexing, and discovery.

Speech AI for AI video editing

Speech AI models can be used to build tools that transcribe and analyze large amounts of video and audio data, making them well-suited for AI video editing tools and platforms.

Speech AI consists of three main components:

- AI Speech-to-Text: Automatic Speech Recognition, or ASR, systems are more accurate, affordable, and accessible than ever. Top speech recognition models can now transcribe audio and video streams at near-perfect accuracy for both real-time and asynchronous transcription.

- Audio Intelligence: Audio Intelligence models help users analyze these transcriptions and build high-ROI tools on top of the transcription data. Audio Intelligence models include Summarization, Topic Detection, Sentiment Analysis, PII Redaction, and more.

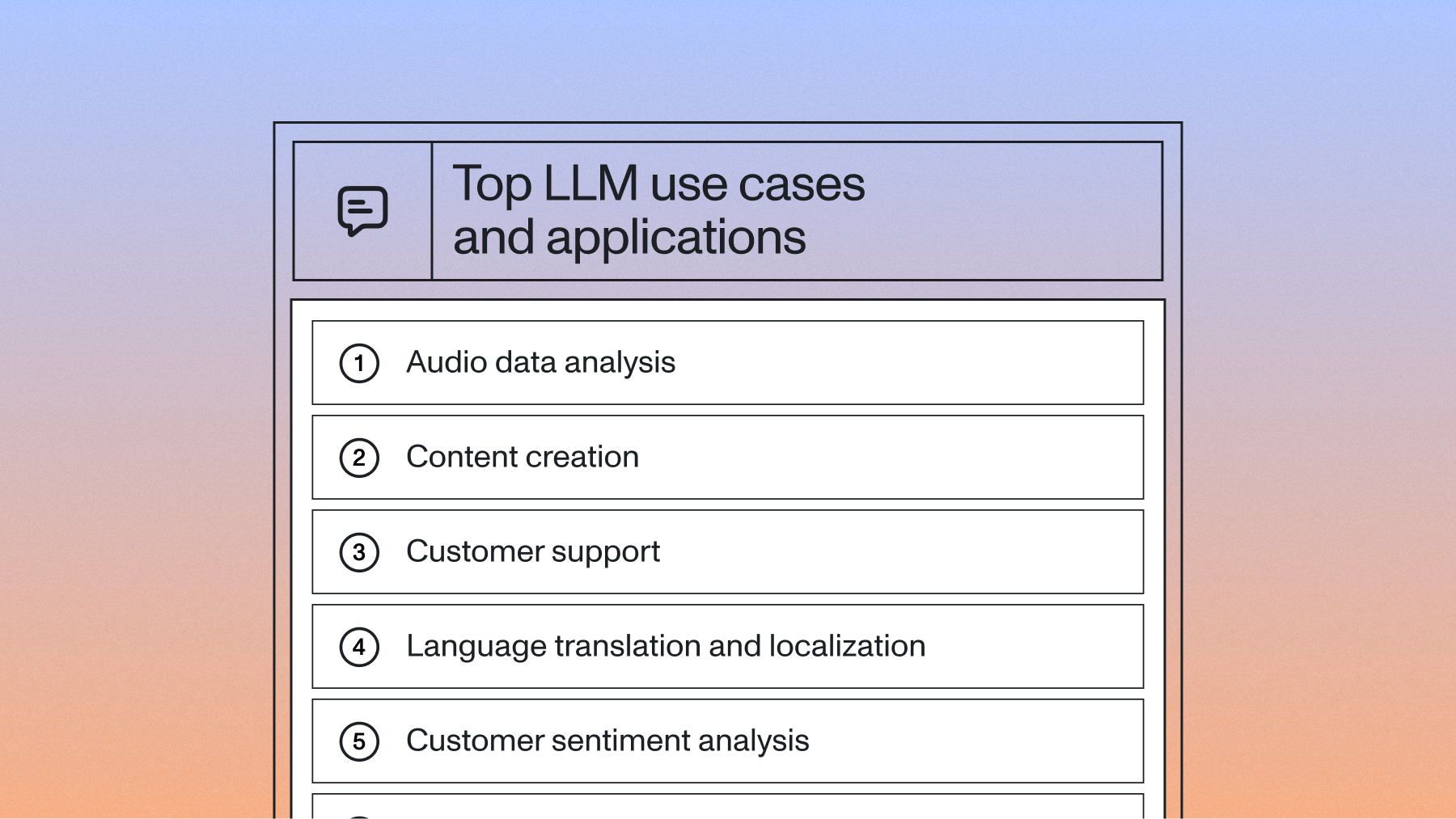

- Large Language Models (LLMs): Large Language Models, or LLMs, are another powerful analysis tool for voice data. For example, LLM gateway is a framework that lets users leverage a multitude of LLM capabilities for voice data, such as generating custom summaries, providing answers to specific questions, or generating lists of action items.

Let’s look more closely at three important ways Speech AI can improve AI video editing for users.

1. Add captions automatically

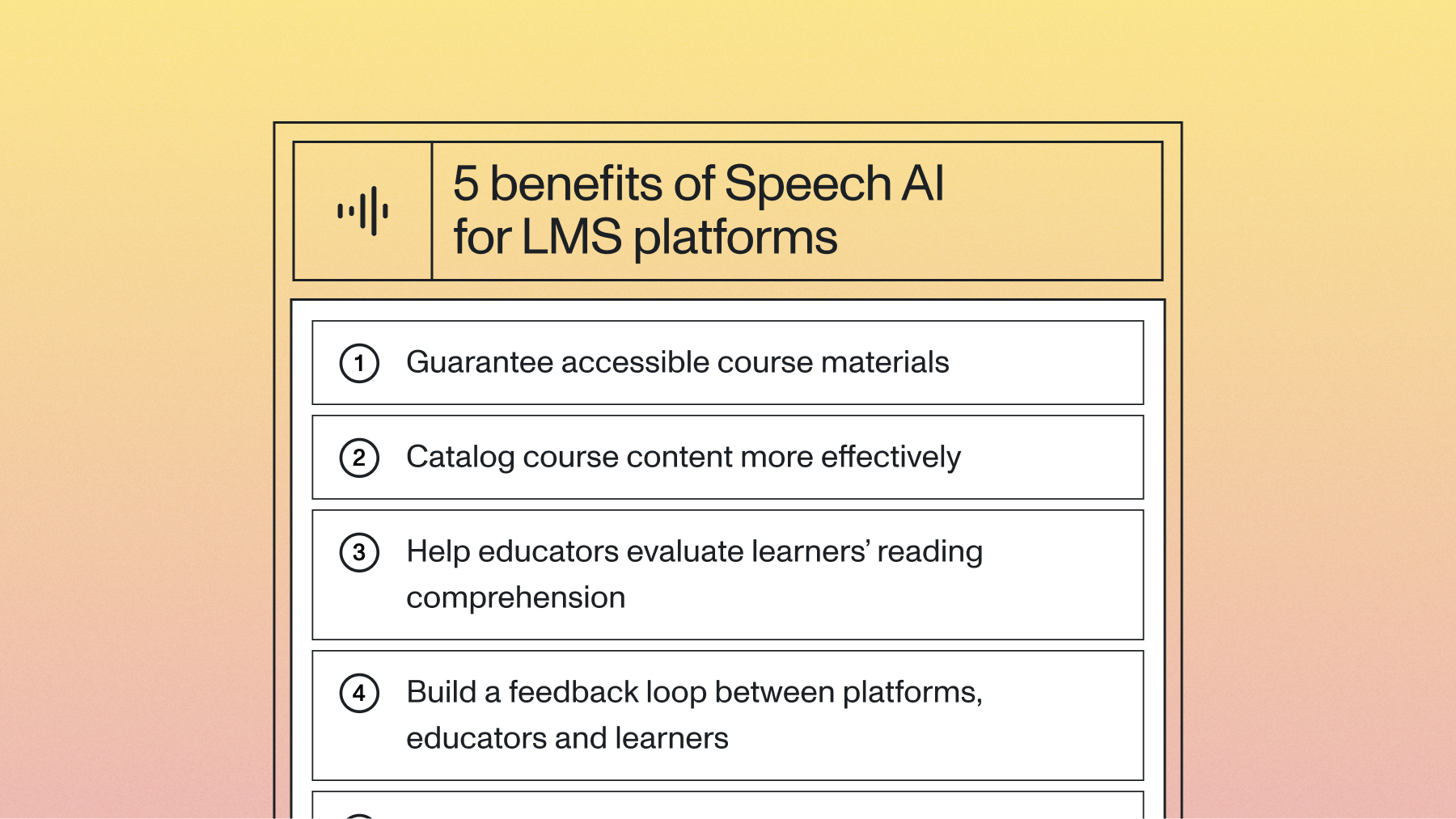

Video editing platforms must facilitate easy, automatic audio transcription so users can add accurate captions at the click of a button. This feature increases the accessibility of videos, whether it be for a personal YouTube video or a company Zoom meeting.

Captions also make it easier for viewers to watch and understand video, even when their sound is off. Sixty-nine percent of people view video without sound in public spaces; more than 25% do so in private spaces as well. Viewers are also 80% more likely to watch a video with captions than one without. Video editing platforms need to make it easy to automatically add these accurate captions to videos so users can meet the needs of their viewers.

Captions can also be used as “previews” that highlight a video’s content when potential viewers hover over the video, increasing user experience and engagement. Adding captions also makes videos more discoverable, and thus searchable, as search engines can crawl the text for relevant keywords. It even makes them more shareable–videos with captions are shared 15% more than those without captions.

Video editing tools are also using transcriptions to automatically cut out silences and mistakes from videos to streamline the editing process.

If accuracy is a concern, look for an API that offers Confidence Scores for its transcriptions. ASR technology is getting close to human-level accuracy, but it isn’t at 100% just yet. Typically, Confidence Scores work by providing a value from 0 to 100, with 0 being not accurate at all and 100 being perfect accuracy. If a platform or users needs a perfect, 100% accurate transcript, Confidence Scores drastically cut down on the time required to manually edit and review transcripts as areas of deficiency (or where there are lower confidence scores) can be noted and resolved with human transcription–without having to transcribe the entire audio stream over again manually.

Transcriptions can be also translated into a multitude of languages, for greater reach and utility.

The following use case is one specific example of how Speech-to-Text transcription can used to create an AI subtitle generator, one popular subset of video editing platforms.

Use case: AI subtitle generators

AI subtitle generators automatically transcribe speech from audio and video files with the help of AI Speech-to-Text models. Users typically must follow a five-step process when using an AI subtitle generator:

- Upload a video file.

- Use the AI subtitle generators to generate AI-powered subtitles.

- Manually edit the subtitles for accuracy, where needed.

- Alter the style, color, font, animation, etc. of the subtitles on the video itself.

- Export the video with the hardcoded subtitles displayed directly on the video.

Learn how to create video subtitles in just 5 lines of code

2. Support video searchability and indexing

While accurate transcription helps support basic searchability, incorporating additional Audio Intelligence models and LLMs into AI video editing tools can support searchability and indexing to a greater degree.

In addition to adding captions, video editing platforms can provide AI-powered tools that allow users to:

- Auto-tag video content with relevant tags. This categorizes videos and automates SEO, significantly improving search discoverability.

- Search across videos, create highlight videos (for meeting digests or social media posts), or review long videos in a few minutes.

- Add timestamps to quickly scan through video segments or improve a table of contents.

- Add speaker labels to videos with multiple speakers.

- Flag the most important sections of videos.

To create these innovative tools, there are a few Audio Intelligence models that can help: Entity Detection, Auto Chapters/Summarization, and Speaker Diarization.

Entity Detection, also sometimes referred to as Named Entity Recognition, identifies and classifies key information in a transcription text. Common entities that can be detected include dates, email addresses, phone numbers, locations, occupations, and nationalities. Entity Detection can be used to create relevant video tags or identify commonalities in video content.

Auto Chapters is a summarization model that provides a “summary over time” for audio streams. It works by (a) breaking a text into logical chapters, like where the conversation changes topics, and then (b) generating a short summary of each of these chapters. Auto Chapters is a useful tool that can help users quickly create tables of contents for YouTube or an online learning class, review videos in a short amount of time, or even create a summary of the most important video segments to push out internally or externally.

LLMs are useful here as well. For example, LLM gateway lets users more easily use LLMs to summarize voice data, such as by providing additional information that’s not explicitly referenced in the audio data, or by specifying a specific summarization output.

Speaker Diarization automatically applies speaker labels to a transcription text. Users can then add these to captions or video transcripts to ease readability for viewers. Some video editing platforms are also using Speaker Diarization models, as well as word timings, to create tools that automatically focus on the active speaker during camera changes or to automatically resize videos to center the active speaker, increasing view engagement.

3. Unlock insights for smarter collaboration

Finally, high-quality video editing platforms help users unlock valuable insights from their video that can be used to boost video performance, as well as to foster smarter collaboration between teams during the editing process.

The Audio Intelligence model Auto Chapters makes it simple to build tools that allow users to share important video segments for manual review directly via the video editing platform or the cloud. Entity Detection, tied to analytics, can determine which video tags or filters are the most/least used, most/least popular, and more. Additional data can be aggregated to perform key analytics that help increase viewership and engage with communities that align with video content.

Play around with powerful AI models in AssemblyAI’s free, no-code playground. Play here

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

%20is%20Being%20Used%20Today.png)

.png)