How to choose the best speech-to-text API

With more speech-to-text APIs on the market than ever before, how do you choose the best one for your product or use case? Answering these six questions is a great starting point.

Speech-to-text APIs convert spoken words into written text through developer-friendly interfaces. The field has grown exponentially, and market projections show it's expected to reach a market volume of US$73 billion by 2031. These APIs now power everything from AI meeting assistants to call center analytics. With dozens of providers available, choosing the right API requires understanding key evaluation criteria.

This guide walks through everything you need to know about speech-to-text APIs—from how they work and when to use them, to the specific questions that will help you identify the best solution for your needs. We'll cover the technical foundations, explore real-world applications, and provide a framework for evaluating different options based on accuracy, features, support, and other critical factors.

What is a speech-to-text API?

A speech-to-text API converts spoken words into written text through a simple developer interface. You send audio files or streams to an API endpoint and receive accurate transcriptions back. This eliminates the need to build complex Voice AI models from scratch.

How do speech-to-text APIs work?

The process is fairly simple from a developer's perspective. Your application makes a request to the API provider's endpoint, sending an audio file or a live stream of audio data. The provider's AI models then process the audio, converting the spoken words into text. The API returns this transcript to your application, often including additional data like word-level timestamps, speaker labels, and confidence scores. The entire underlying infrastructure for processing the audio at scale is managed by the API provider.

Types of speech-to-text API architectures

Not all speech-to-text APIs use the same architecture. Your choice depends on your specific use case and processing requirements.

Three main API types handle different audio processing needs:

- Asynchronous APIs: Process pre-recorded files and return complete transcripts. Ideal for media content, call recordings, and batch processing.

- Real-time streaming APIs: Handle live audio through persistent connections, returning incremental transcripts. Essential for live captioning and voice assistants.

- On-premise deployment: Run Voice AI models within your private infrastructure for strict security requirements.

The choice between these architectures impacts not just your technical implementation but also your cost structure and scalability. Real-time APIs typically have different pricing models than batch processing. On-premise solutions require significant upfront infrastructure investment but may offer lower long-term costs for high-volume use cases.

Common use cases for speech-to-text APIs

Speech-to-text APIs power voice features across every industry. Here are the most common applications:

- Call centers: Companies like CallSource and Ringostat transcribe customer interactions to improve agent performance

- Media platforms: Services like Veed and Podchaser generate captions and searchable transcripts

- Meeting intelligence: Tools like Circleback AI create automated summaries and action items

Getting started with a speech-to-text API

Integrating a speech-to-text API is usually a quick process. Most providers, including AssemblyAI, follow a similar developer workflow:

- Get an API key: Sign up for a free account to get an API key that authenticates your requests.

- Read the documentation: Review the API docs to understand the available endpoints, parameters, and SDKs for your programming language.

- Make your first request: Send your first audio file to the API and get a transcript back. From there, you can explore more advanced features.

How accurate is the API?

Accuracy is one of the most important considerations when comparing APIs. In fact, a 2024 survey of over 200 tech leaders found that accuracy and quality were among the top three most important factors when evaluating an AI vendor. Word Error Rate (WER) is the standard measure of accuracy for an Automatic Speech Recognition (ASR) system, but it doesn't always tell the full story. For example, recent research from Apple found that a set of transcripts with a 9.2% WER actually had a Human-Readable Word Error Rate (HEWER) of just 1.4%, suggesting the transcripts were far more readable than the standard metric implied.

The most thorough accuracy test involves calculating WER on your actual audio files through these steps:

- Create human transcriptions of your audio files

- Process the same files through the API

- Compare results to compute the error rate

Read More: How Useful is Word Error Rate? →

While modern speech-to-text models can achieve near-human accuracy across challenging conditions, performance can be unequal; for instance, a notable study found a 16 percentage-point gap in transcription accuracy between Black and white speakers. The Universal model handles pre-recorded, noisy audio with high accuracy. For English audio requiring the highest possible accuracy, the Slam-1 model provides advanced performance with customization via prompting.

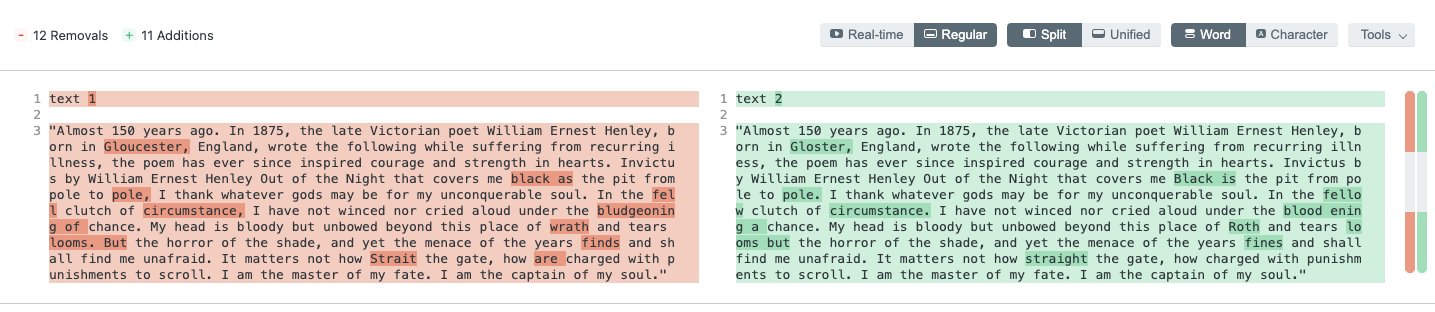

Another great resource for comparing API accuracy is Diffchecker. Diffchecker lets you compare two blocks of text—say from two different APIs or from one API and one human transcription—and shows you what has been added and what has been removed. It also lets you eyeball the differences between two large blocks of texts for a quick comparison.

When using Diffchecker, evaluate these key accuracy factors:

- Missed content: What words or phrases did the API fail to capture?

- Capitalization: Are proper nouns correctly formatted?

- Accent handling: Does speaker dialect affect accuracy?

- Context understanding: Did the API grasp conversational context?

See this text comparison using Diffchecker as an example:

As you can see, Text 1 has 12 removals and Text 2 has 11 additions. Look closely at the highlighted text to spot some of the nuances, such as "black as" in Text 1 vs. "Black is" in Text 2.

Together, WER and Diffchecker can be powerful tools for determining accuracy. This article is also a great option for completing a thorough speech recognition api comparison.

What additional features and models does the API offer?

Next, you should see what additional features the API offers. This will help you get more out of the raw transcription.

Beyond core transcription, you can enable a suite of Speech Understanding models to extract more value from your audio data. Common models include:

- Summarization: Generate summaries of audio files in various formats.

- Speaker Diarization: Identify and label different speakers in the audio.

- PII Redaction: Automatically detect and remove personally identifiable information.

- Auto Chapters: Automatically segment audio into chapters with summaries.

- Topic Detection: Classify audio content based on the IAB standard.

- Content Moderation: Detect sensitive or inappropriate content.

- Paragraph and Sentence Segmentation: Automatically break transcripts into readable paragraphs and sentences.

- Sentiment Analysis: Analyze the sentiment of each sentence.

- Confidence Scores: Get word-level and transcript-level confidence scores.

- Automatic Punctuation and Casing: Improve readability with automatic formatting.

- Profanity Filtering: Censor profane words in the transcript.

- Entity Detection: Identify named entities like people, places, and organizations.

- Accuracy Boosting (Keyterms & Custom Vocabulary): Improve accuracy for specific terms and phrases.

When choosing a speech-to-text API, you should also evaluate how often new features are released and how often the models are updated.

The best speech-to-text APIs maintain dedicated AI research teams for continuous model improvement. Look for these innovation indicators:

- Regular model updates and improvements

- Transparent changelog with detailed release notes

- Active research publications and breakthroughs

Make sure you check the API's changelog and updates, which should be transparent and easily accessible. For example, AssemblyAI ships updates weekly via its publicly accessible changelog. If an API doesn't have a changelog, or doesn't update it very often, this is a red flag.

What kind of support can you expect?

Too often, APIs offered by big tech companies like Google Cloud and AWS go unsupported and are infrequently updated.

It's inevitable that you'll have questions as you build new features, which is why an industry survey found that API and developer resources are a top-five factor for tech leaders when choosing an AI vendor. This is why you should look for an API that offers dedicated, quick support to you and your team of developers. Support should be offered 24/7 via multiple channels such as email, messaging, or Slack.

You should be assigned a dedicated account manager and support engineer that offer integration support, provide quick turnaround on support requests, and help you figure out the best features to integrate.

Also consider:

- Uptime reports (should be at or near 100%)

- Customer reviews and awards on sites like G2

- Accessible changelog with detailed and frequent updates, as discussed above

- Quick, helpful support via multiple channels

Does the API offer transparent pricing and documentation?

API pricing shouldn't be a guessing game. All APIs you are considering should offer transparent, easy-to-decipher pricing as well as volume discounts for high levels of usage. A free trial for the API that lets you explore the API before committing to purchase is even better.

Watch for these common pricing and integration challenges:

- Hidden costs: Google Cloud requires data hosting in GCP Buckets, increasing total expenses

- File size limits: OpenAI Whisper's 25MB chunks complicate large file processing

- Documentation quality: Poor API documentation signals difficult integration

How secure is your data?

Data security becomes critical when processing sensitive voice data, especially as research shows the average cost of a healthcare data breach has reached $10.93 million per incident. Evaluate these essential security measures:

- Encryption: End-to-end encryption for data in transit and at rest

- Compliance certifications: SOC 2 Type 2, GDPR compliance as needed

- Data retention policies: Clear policies on how long audio and transcripts are stored

- Access controls: Robust authentication and authorization mechanisms

For comprehensive guidance, see our detailed analysis of speech-to-text security considerations.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.